PPO¶

Overview¶

PPO(Proximal Policy Optimization) was proposed in Proximal Policy Optimization Algorithms. PPO follows the idea of TRPO, which restricts the step of policy update by KL-divergence, and uses clipped probability ratios of the new and old policies to replace the direct KL-divergence restriction. This adaptation is simpler to implement and avoid the calculation of the Hessian matrix in TRPO.

Quick Facts¶

PPO is a model-free and policy-based RL algorithm.

PPO supports both discrete and continuous action spaces.

PPO supports off-policy mode and on-policy mode.

PPO can be equipped with RNN.

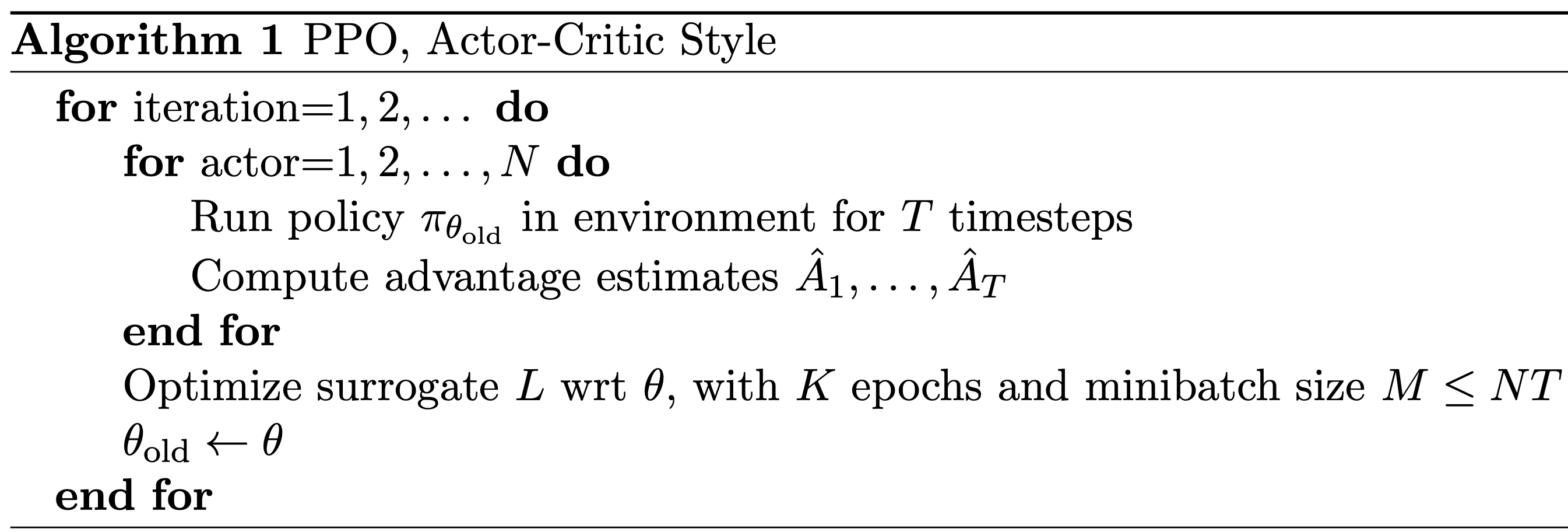

PPO on-policy implementation use double loop(epoch loop and minibatch loop)

Key Equations or Key Graphs¶

PPO use clipped probability ratios in the policy gradient to prevent the policy from too rapid changes:

with the probability ratio \(r_t(\theta)\) defined as:

When \(\hat{A}_t > 0\), \(r_t(\theta) > 1 + \epsilon\) will be clipped. While when \(\hat{A}_t < 0\), \(r_t(\theta) < 1 - \epsilon\) will be clipped. However, in the paper Mastering Complex Control in MOBA Games with Deep Reinforcement Learning, the authors claim that when \(\hat{A}_t < 0\), a too large \(r_t(\theta)\) should also be clipped, which introduces dual clip:

Extensions¶

- PPO can be combined with:

Multi-step learning

RNN

GAE

Note

Indeed, the standard implementation of PPO contains the many additional optimizations which are not described in the paper. Further details can be found in IMPLEMENTATION MATTERS IN DEEP POLICY GRADIENTS: A CASE STUDY ON PPO AND TRPO.

Implementation¶

The default config is defined as follows:

- class ding.policy.ppo.PPOPolicy(cfg: dict, model: Optional[Union[type, torch.nn.modules.module.Module]] = None, enable_field: Optional[List[str]] = None)[source]¶

- Overview:

Policy class of on policy version PPO algorithm.

- class ding.model.template.vac.VAC(obs_shape: Union[int, ding.utils.type_helper.SequenceType], action_shape: Union[int, ding.utils.type_helper.SequenceType], share_encoder: bool = True, continuous: bool = False, encoder_hidden_size_list: ding.utils.type_helper.SequenceType = [128, 128, 64], actor_head_hidden_size: int = 64, actor_head_layer_num: int = 1, critic_head_hidden_size: int = 64, critic_head_layer_num: int = 1, activation: Optional[torch.nn.modules.module.Module] = ReLU(), norm_type: Optional[str] = None, sigma_type: Optional[str] = 'independent', bound_type: Optional[str] = None)[source]

- Overview:

The VAC model.

- Interfaces:

__init__,forward,compute_actor,compute_critic

- __init__(obs_shape: Union[int, ding.utils.type_helper.SequenceType], action_shape: Union[int, ding.utils.type_helper.SequenceType], share_encoder: bool = True, continuous: bool = False, encoder_hidden_size_list: ding.utils.type_helper.SequenceType = [128, 128, 64], actor_head_hidden_size: int = 64, actor_head_layer_num: int = 1, critic_head_hidden_size: int = 64, critic_head_layer_num: int = 1, activation: Optional[torch.nn.modules.module.Module] = ReLU(), norm_type: Optional[str] = None, sigma_type: Optional[str] = 'independent', bound_type: Optional[str] = None) None[source]

- Overview:

Init the VAC Model according to arguments.

- Arguments:

obs_shape (

Union[int, SequenceType]): Observation’s space.action_shape (

Union[int, SequenceType]): Action’s space.share_encoder (

bool): Whether share encoder.continuous (

bool): Whether collect continuously.encoder_hidden_size_list (

SequenceType): Collection ofhidden_sizeto pass toEncoderactor_head_hidden_size (

Optional[int]): Thehidden_sizeto pass to actor-nn’sHead.

- actor_head_layer_num (

int):The num of layers used in the network to compute Q value output for actor’s nn.

critic_head_hidden_size (

Optional[int]): Thehidden_sizeto pass to critic-nn’sHead.

- critic_head_layer_num (

int):The num of layers used in the network to compute Q value output for critic’s nn.

- activation (

Optional[nn.Module]):The type of activation function to use in

MLPthe afterlayer_fn, ifNonethen default set tonn.ReLU()

- norm_type (

Optional[str]):The type of normalization to use, see

ding.torch_utils.fc_blockfor more details`

- compute_actor(x: torch.Tensor) Dict[source]

- Overview:

Execute parameter updates with

'compute_actor'mode Use encoded embedding tensor to predict output.- Arguments:

- inputs (

torch.Tensor):The encoded embedding tensor, determined with given

hidden_size, i.e.(B, N=hidden_size).hidden_size = actor_head_hidden_size- Returns:

- outputs (

Dict):Run with encoder and head.

- ReturnsKeys:

logit (

torch.Tensor): Logit encoding tensor, with same size as inputx.- Shapes:

logit (

torch.FloatTensor): \((B, N)\), where B is batch size and N isaction_shape- Examples:

- compute_actor_critic(x: torch.Tensor) Dict[source]

- Overview:

Execute parameter updates with

'compute_actor_critic'mode Use encoded embedding tensor to predict output.- Arguments:

inputs (

torch.Tensor): The encoded embedding tensor.- Returns:

- outputs (

Dict):Run with encoder and head.

- ReturnsKeys:

logit (

torch.Tensor): Logit encoding tensor, with same size as inputx.value (

torch.Tensor): Q value tensor with same size as batch size.- Shapes:

logit (

torch.FloatTensor): \((B, N)\), where B is batch size and N isaction_shapevalue (

torch.FloatTensor): \((B, )\), where B is batch size.- Examples:

Note

compute_actor_criticinterface aims to save computation when shares encoder. Returning the combination dictionry.

- compute_critic(x: torch.Tensor) Dict[source]

- Overview:

Execute parameter updates with

'compute_critic'mode Use encoded embedding tensor to predict output.- Arguments:

- inputs (

torch.Tensor):The encoded embedding tensor, determined with given

hidden_size, i.e.(B, N=hidden_size).hidden_size = critic_head_hidden_size- Returns:

- outputs (

Dict):Run with encoder and head.

- Necessary Keys:

value (

torch.Tensor): Q value tensor with same size as batch size.- Shapes:

value (

torch.FloatTensor): \((B, )\), where B is batch size.- Examples:

- forward(inputs: Union[torch.Tensor, Dict], mode: str) Dict[source]

- Overview:

Use encoded embedding tensor to predict output. Parameter updates with VAC’s MLPs forward setup.

- Arguments:

- Forward with

'compute_actor'or'compute_critic':

- inputs (

torch.Tensor):The encoded embedding tensor, determined with given

hidden_size, i.e.(B, N=hidden_size). Whetheractor_head_hidden_sizeorcritic_head_hidden_sizedepend onmode.- Returns:

- outputs (

Dict):Run with encoder and head.

- Forward with

'compute_actor', Necessary Keys:

logit (

torch.Tensor): Logit encoding tensor, with same size as inputx.- Forward with

'compute_critic', Necessary Keys:

value (

torch.Tensor): Q value tensor with same size as batch size.- Shapes:

inputs (

torch.Tensor): \((B, N)\), where B is batch size and N correspondinghidden_sizelogit (

torch.FloatTensor): \((B, N)\), where B is batch size and N isaction_shapevalue (

torch.FloatTensor): \((B, )\), where B is batch size.- Actor Examples:

- Critic Examples:

- Actor-Critic Examples:

The policy gradient and value update of PPO is implemented as follows:

def ppo_error(

data: namedtuple,

clip_ratio: float = 0.2,

use_value_clip: bool = True,

dual_clip: Optional[float] = None

) -> Tuple[namedtuple, namedtuple]:

assert dual_clip is None or dual_clip > 1.0, "dual_clip value must be greater than 1.0, but get value: {}".format(

dual_clip

)

logit_new, logit_old, action, value_new, value_old, adv, return_, weight = data

policy_data = ppo_policy_data(logit_new, logit_old, action, adv, weight)

policy_output, policy_info = ppo_policy_error(policy_data, clip_ratio, dual_clip)

value_data = ppo_value_data(value_new, value_old, return_, weight)

value_loss = ppo_value_error(value_data, clip_ratio, use_value_clip)

return ppo_loss(policy_output.policy_loss, value_loss, policy_output.entropy_loss), policy_info

The Benchmark result of PPO implemented in DI-engine is shown in Benchmark.

References¶

John Schulman, Filip Wolski, Prafulla Dhariwal, Alec Radford, Oleg Klimov: “Proximal Policy Optimization Algorithms”, 2017; [http://arxiv.org/abs/1707.06347 arXiv:1707.06347].

Logan Engstrom, Andrew Ilyas, Shibani Santurkar, Dimitris Tsipras, Firdaus Janoos, Larry Rudolph, Aleksander Madry: “Implementation Matters in Deep Policy Gradients: A Case Study on PPO and TRPO”, 2020; [http://arxiv.org/abs/2005.12729 arXiv:2005.12729].