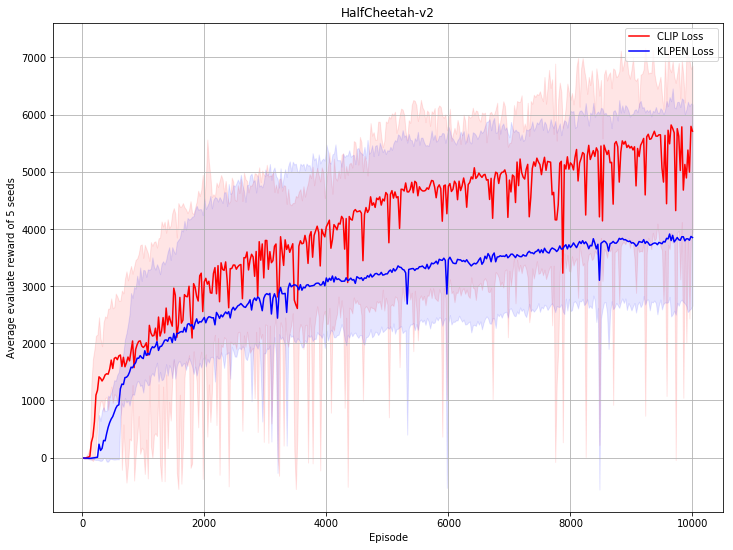

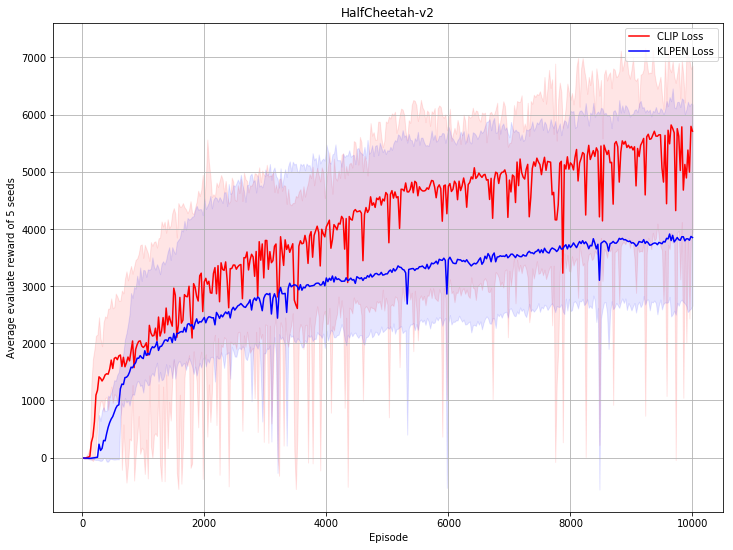

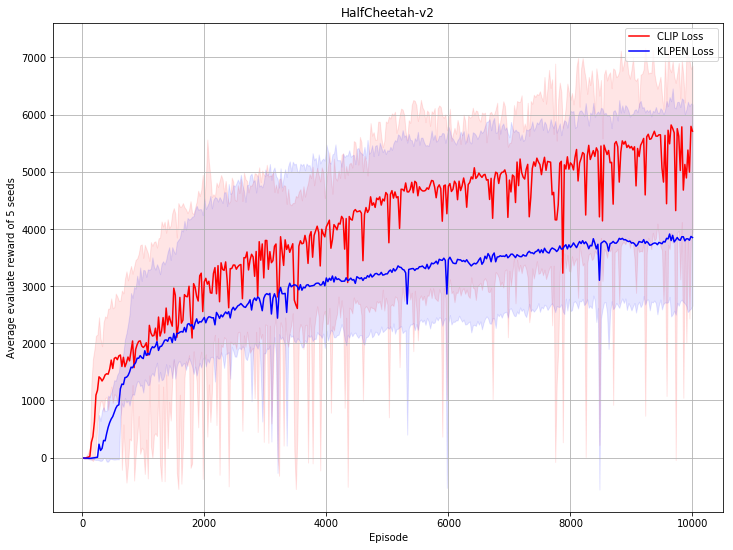

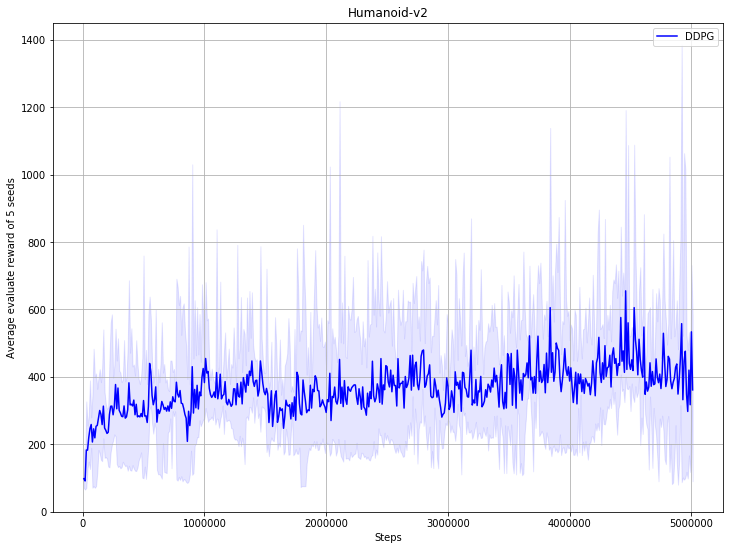

fix PPO bug; add more benchmark result (#47)

* fix PPO bug; add more benchmark result * refine code * update benchmark of PPO, after fix bug * refine code

Showing

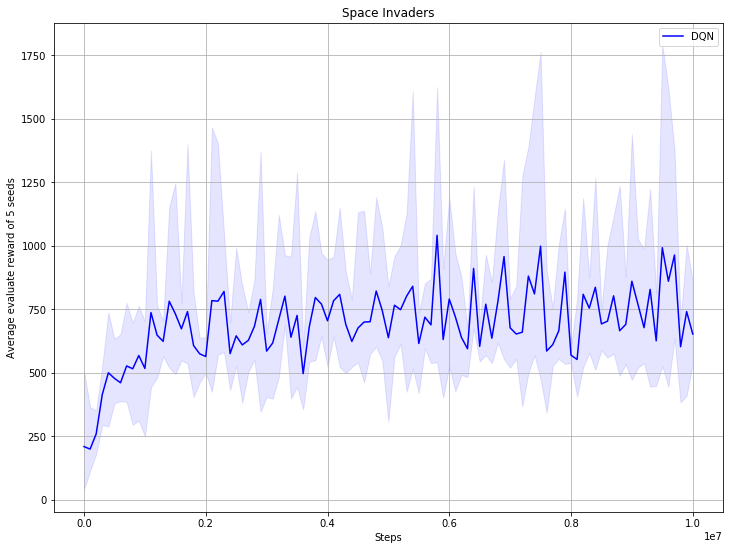

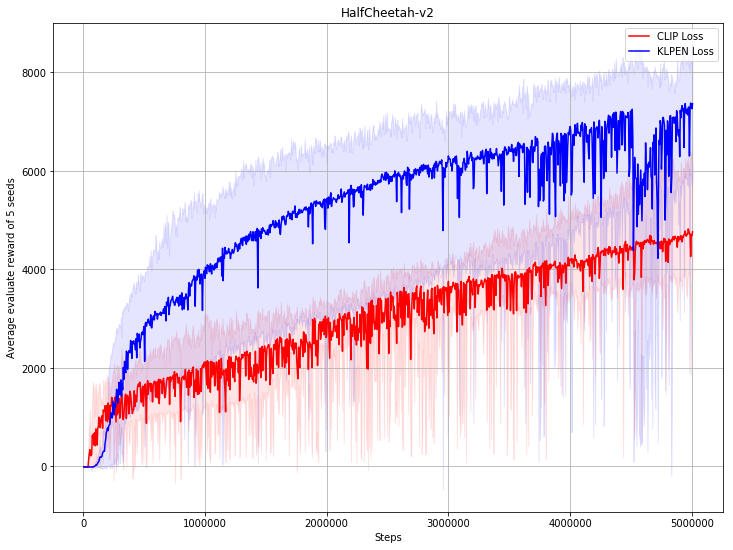

38.7 KB

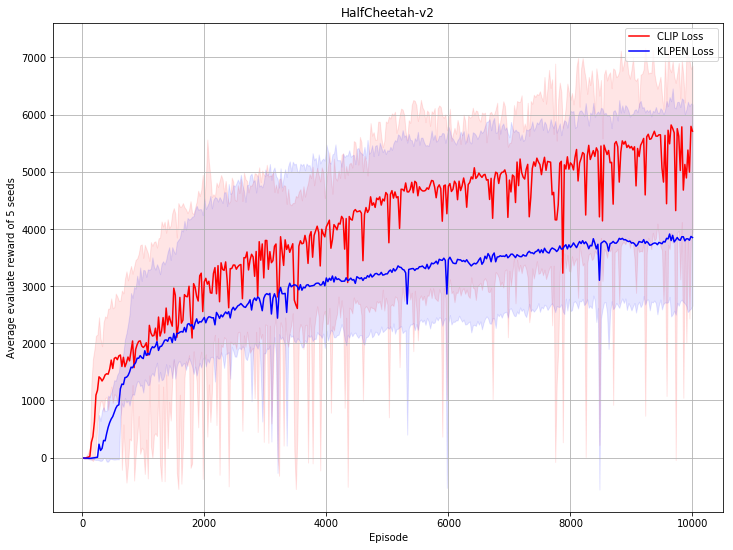

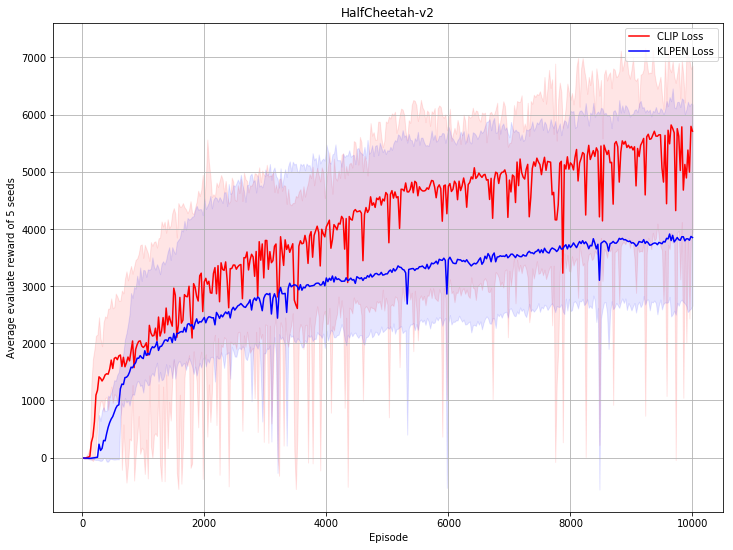

77.3 KB

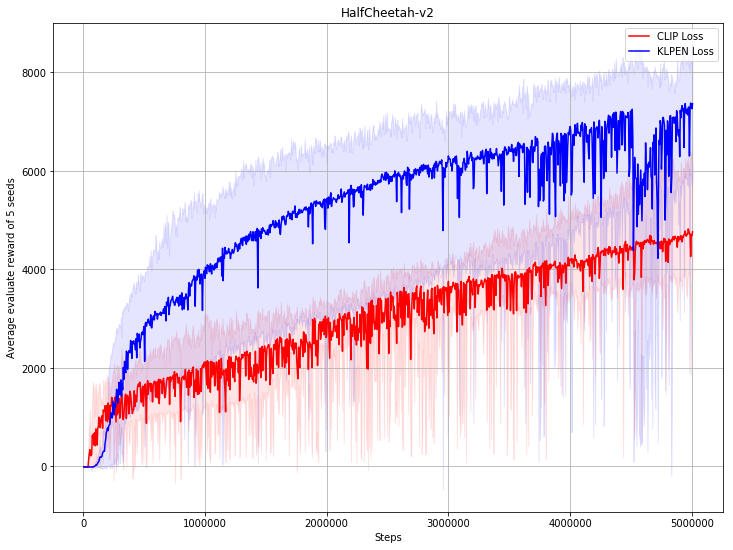

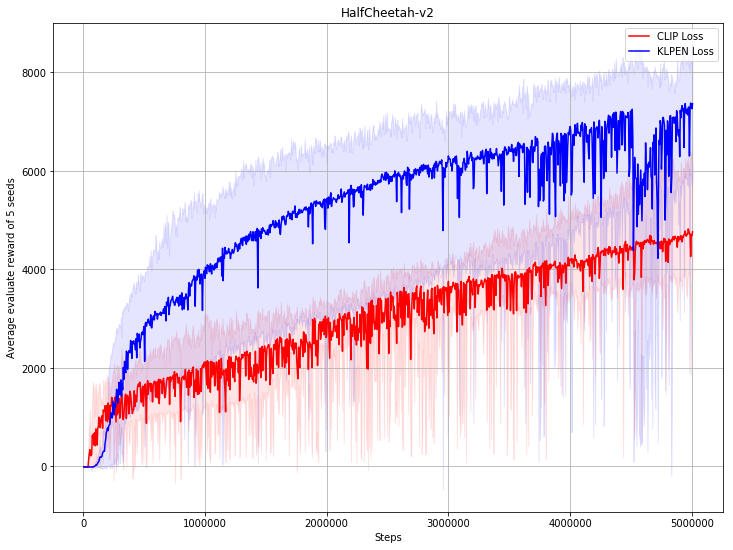

61.1 KB

| W: | H:

| W: | H:

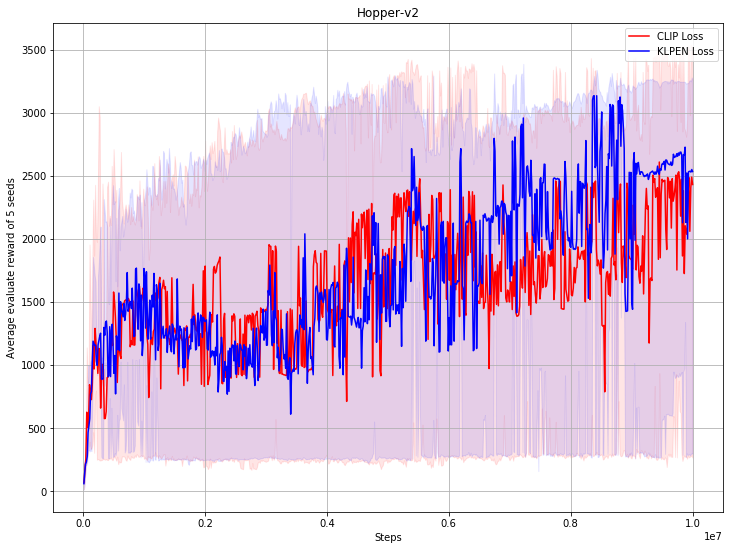

152.0 KB

* fix PPO bug; add more benchmark result * refine code * update benchmark of PPO, after fix bug * refine code

38.7 KB

77.3 KB

61.1 KB

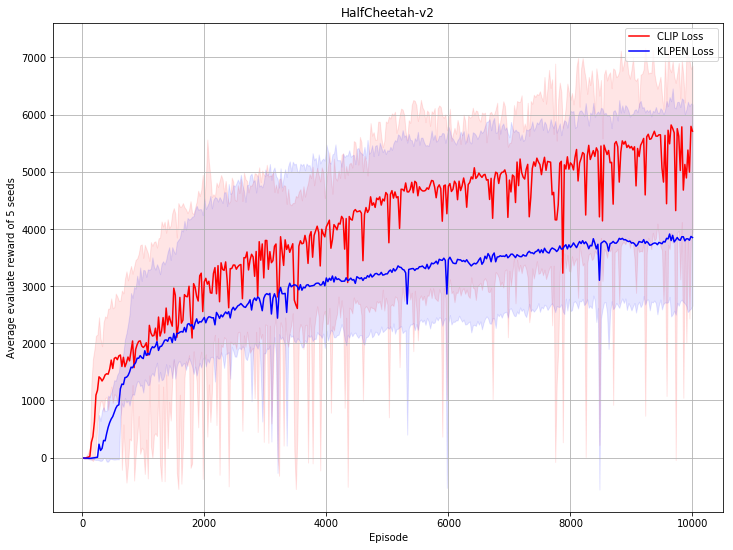

115.0 KB | W: | H:

119.8 KB | W: | H:

152.0 KB