GA3C example (#63)

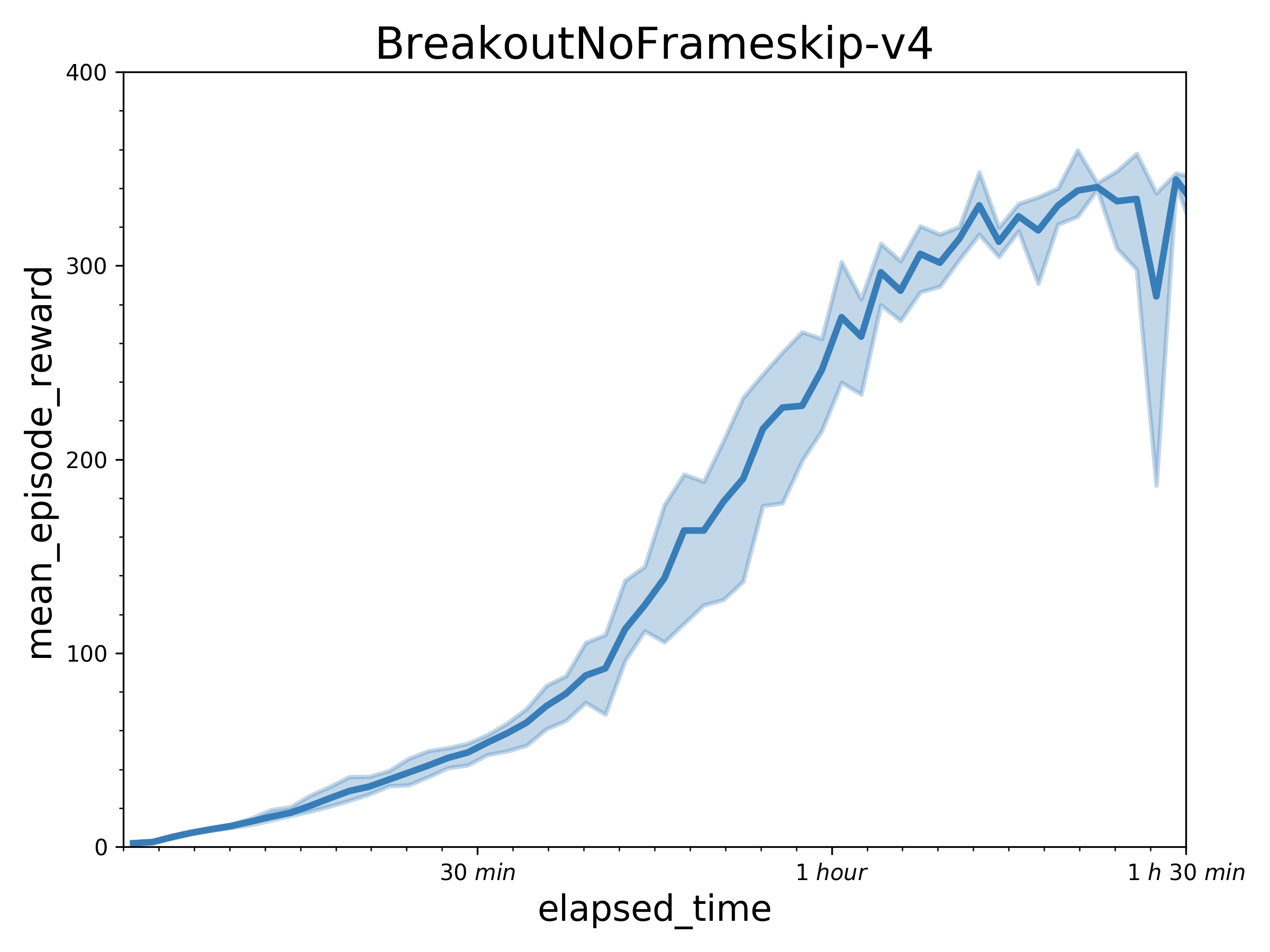

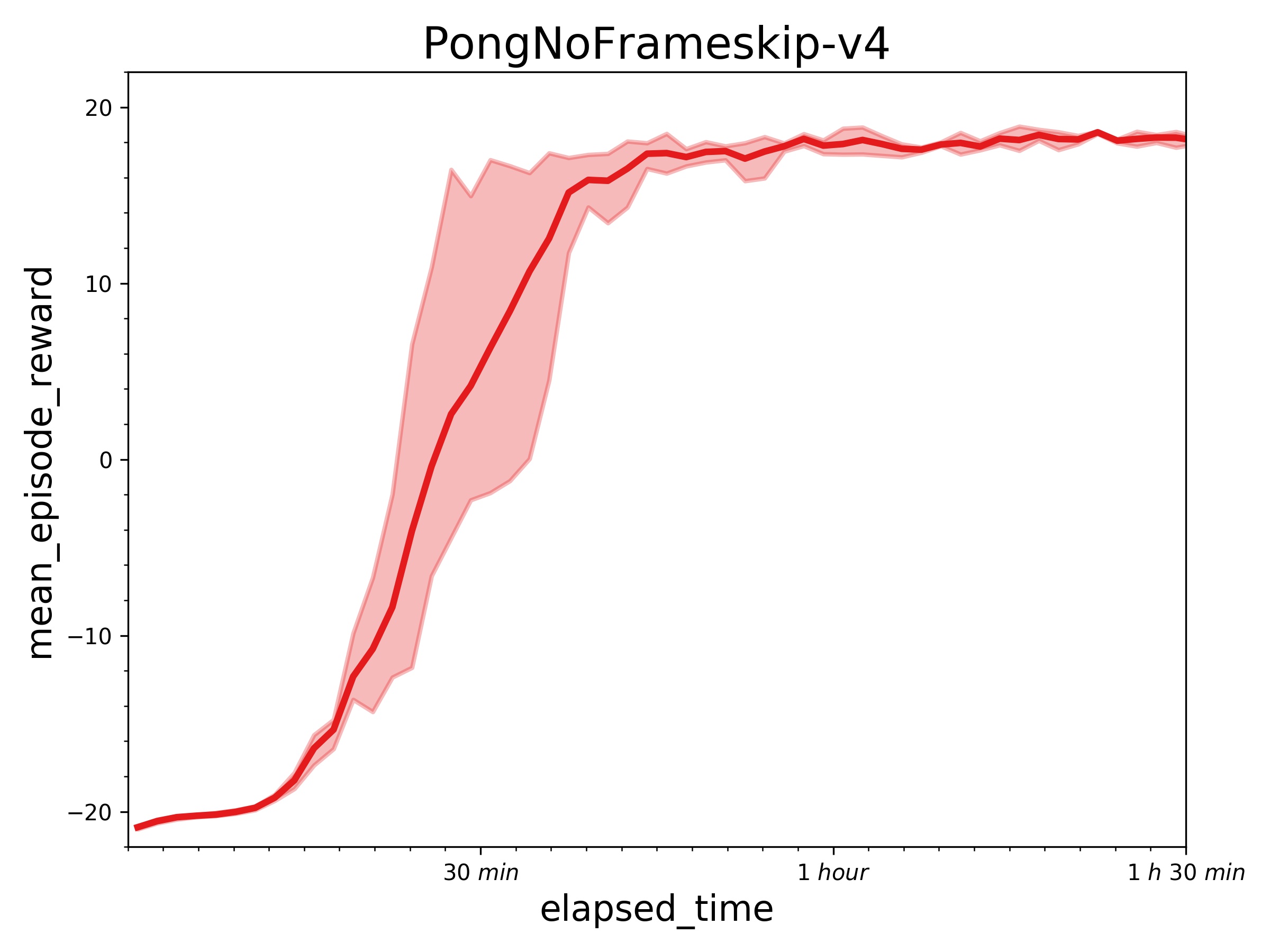

* add IMPALA algorithm and some common utils * update README.md * refactor files structure of impala algorithm; seperate numpy utils from utils * add hyper parameter scheduler module; add entropy and lr scheduler in impala * clip reward in atari wrapper instead of learner side; fix codestyle * add benchmark result of impala; refine code of impala example; add obs_format in atari_wrappers * Update README.md * add a3c algorithm, A2C example and rl_utils * require training in single gpu/cpu * only check cpu/gpu num in learner * refine Readme * update impala benchmark picture; update Readme * add benchmark result of A2C * move get_params/set_params in agent_base * add GA3C example * Update README.md * Update README.md * Update README.md * Update README.md * refine Readme * add benchmark * add default safe eps in numpy logp calculation * refine document; make unittest stable

Showing

268.2 KB

248.2 KB

examples/GA3C/README.md

0 → 100644

examples/GA3C/atari_agent.py

0 → 100644

examples/GA3C/atari_model.py

0 → 120000

examples/GA3C/ga3c_config.py

0 → 100644

examples/GA3C/learner.py

0 → 100644

examples/GA3C/run_simulators.sh

0 → 100644

examples/GA3C/simulator.py

0 → 100644

examples/GA3C/train.py

0 → 100644