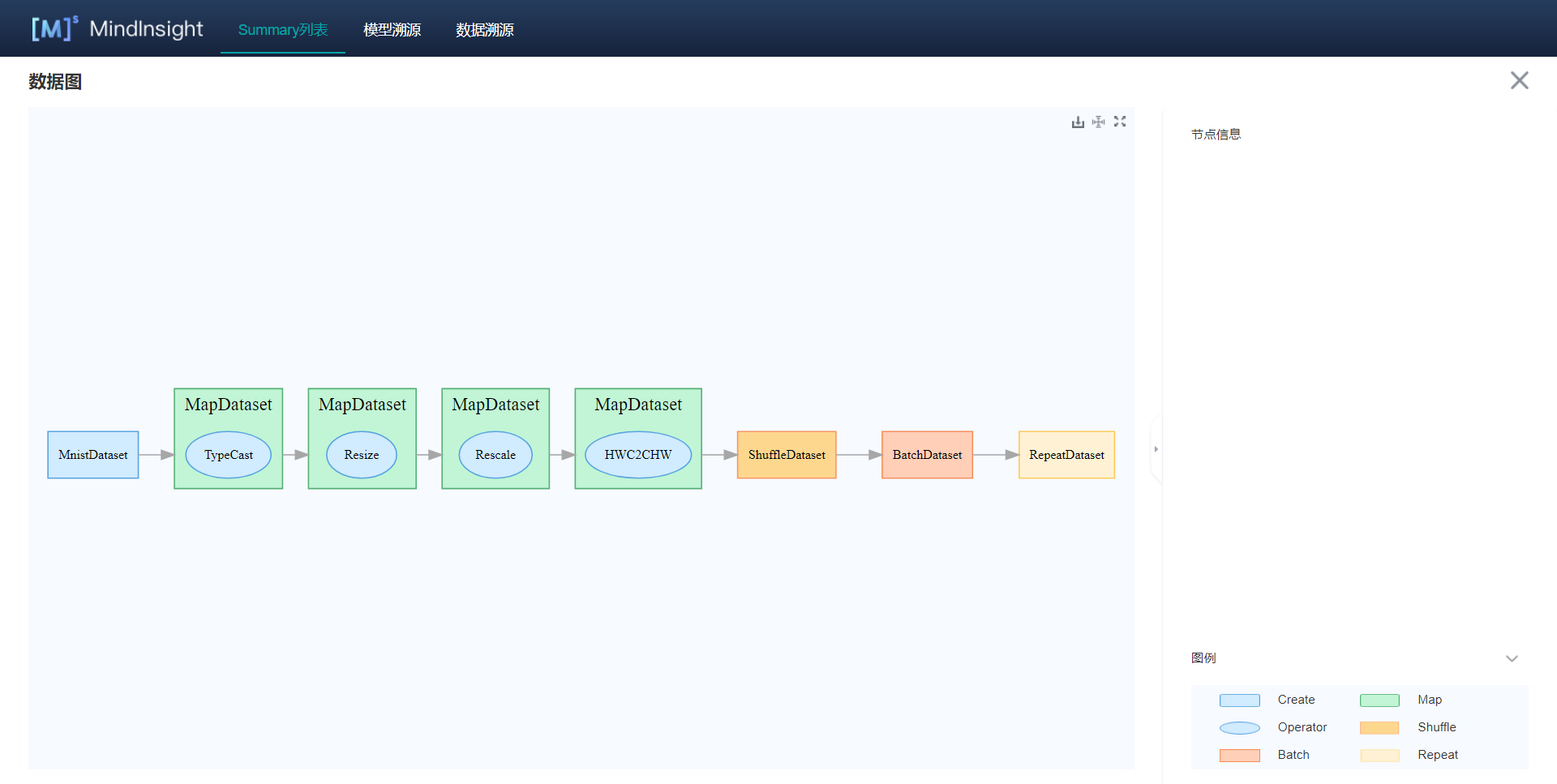

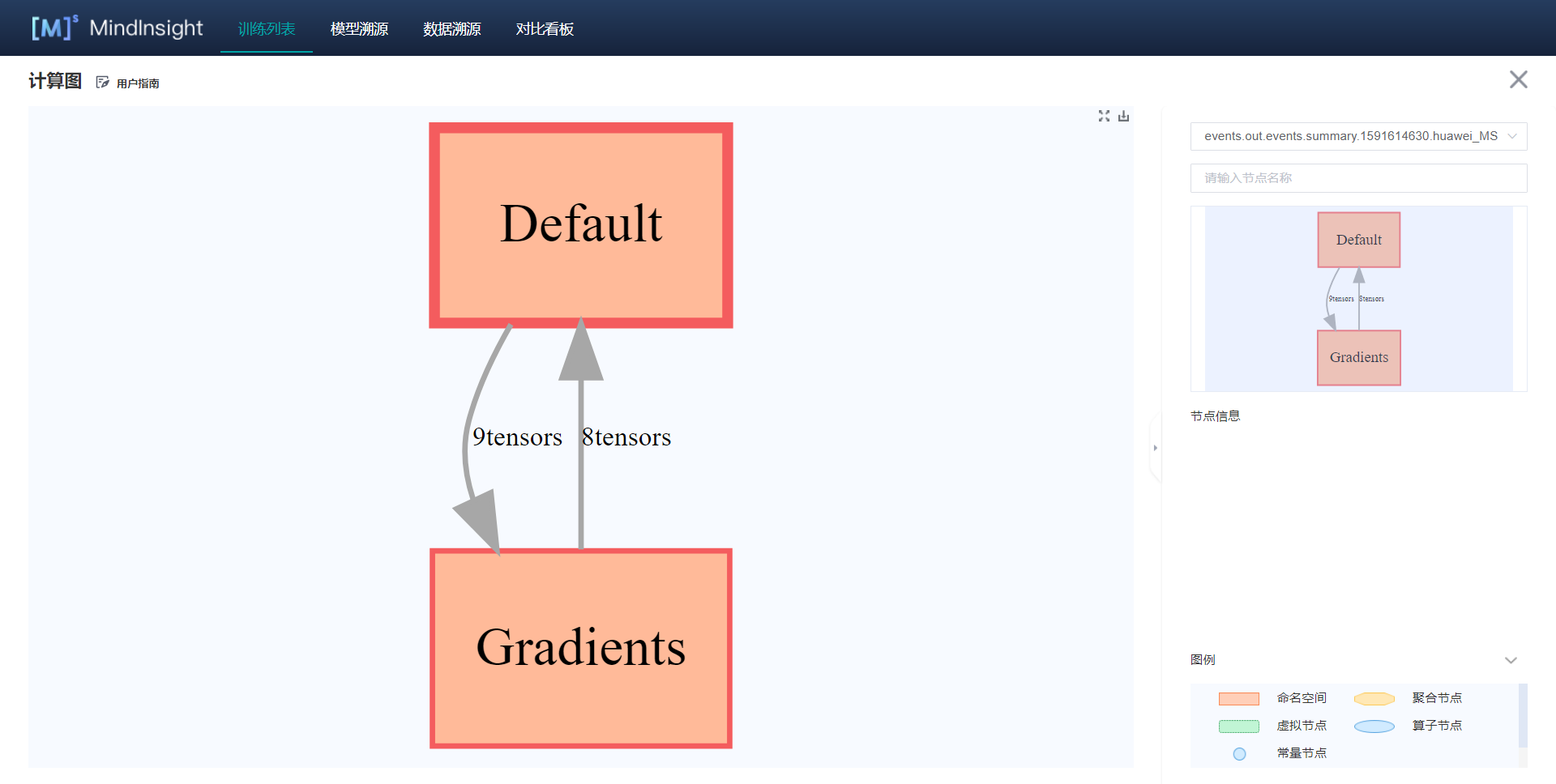

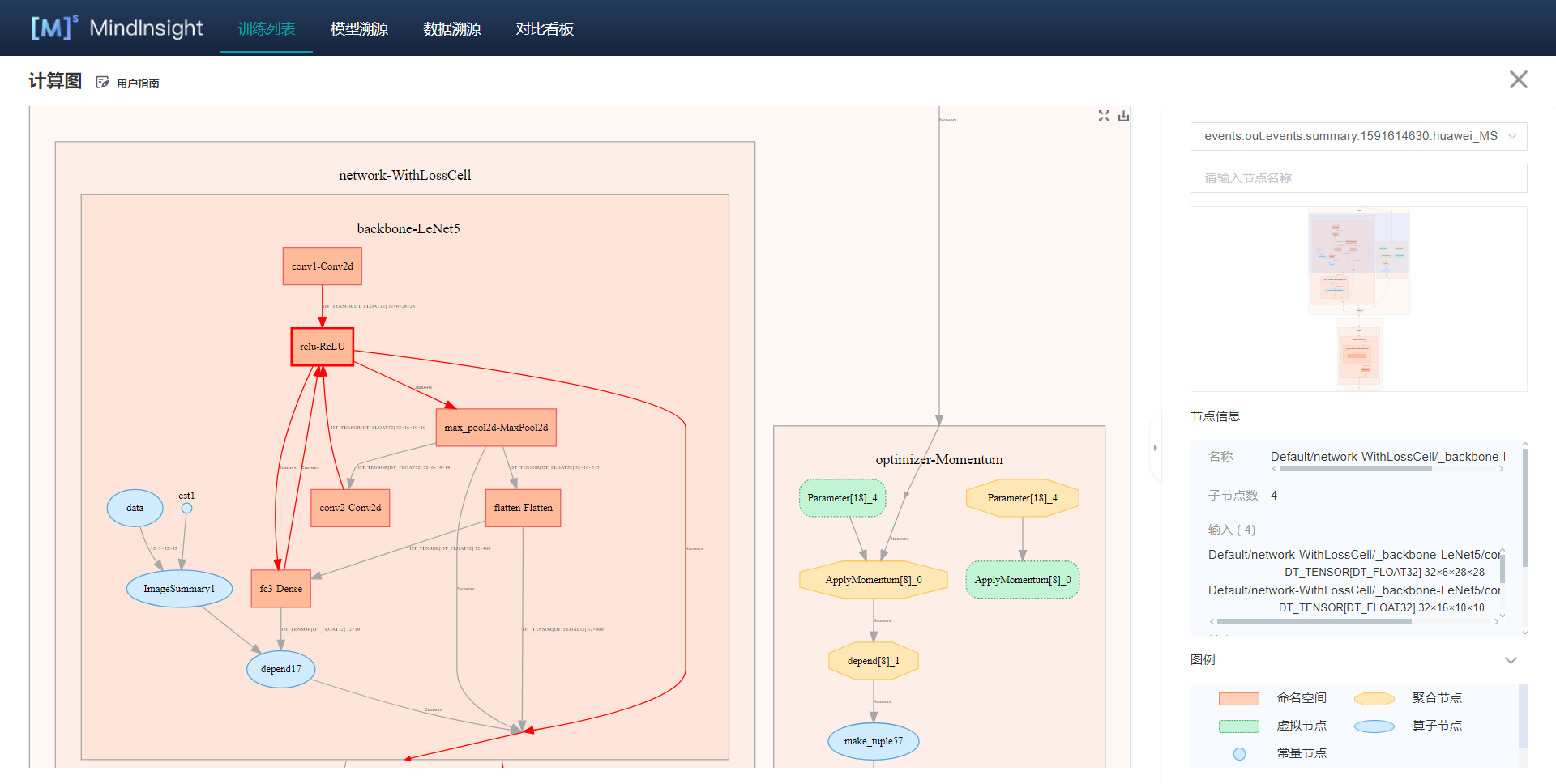

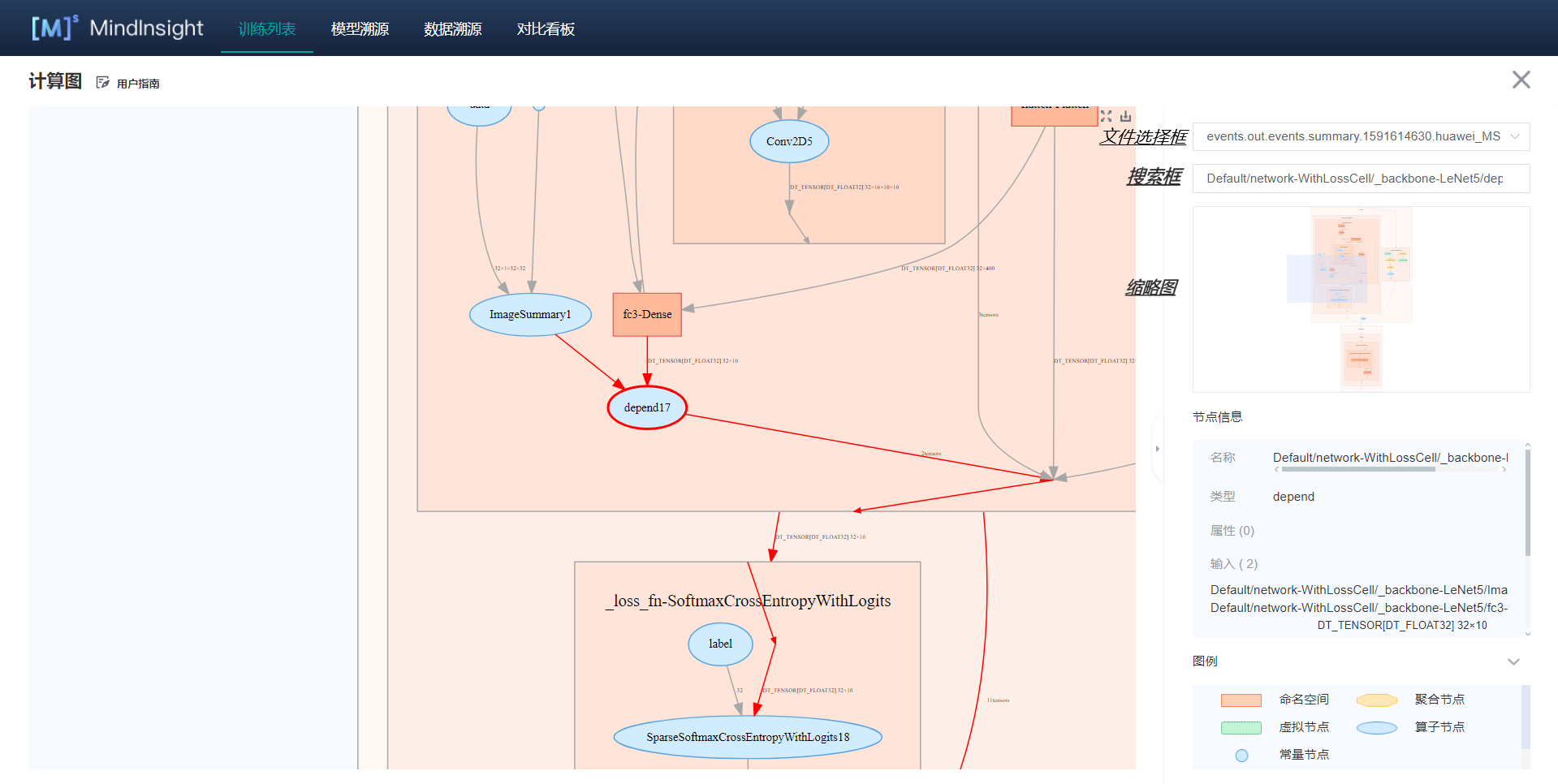

!280 add New data graph and calculation graph

Merge pull request !280 from chengxiaoli/add_doc

Showing

59.4 KB

71.6 KB

140.7 KB

163.0 KB

31.5 KB

Merge pull request !280 from chengxiaoli/add_doc

59.4 KB

71.6 KB

140.7 KB

163.0 KB

31.5 KB