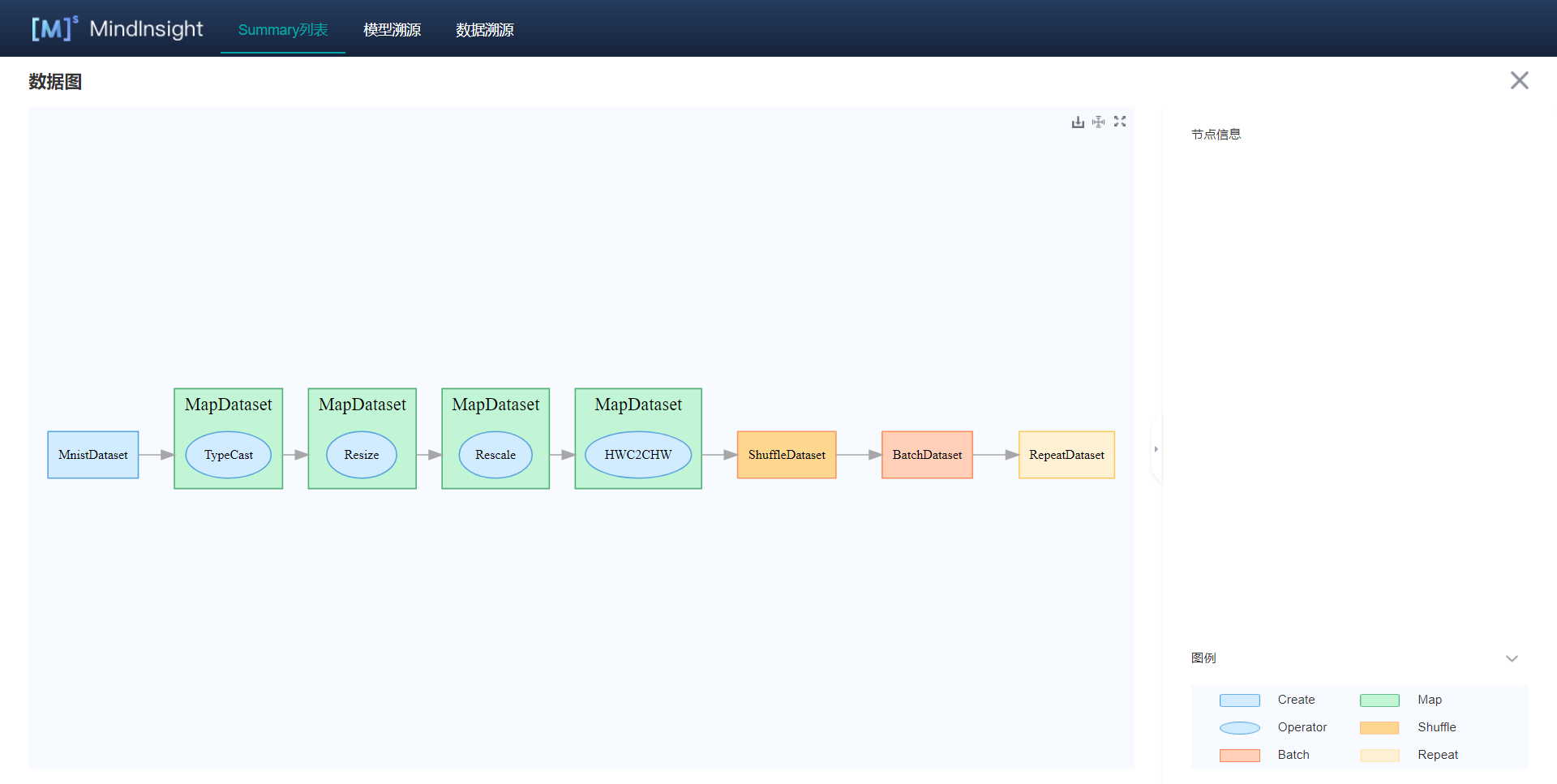

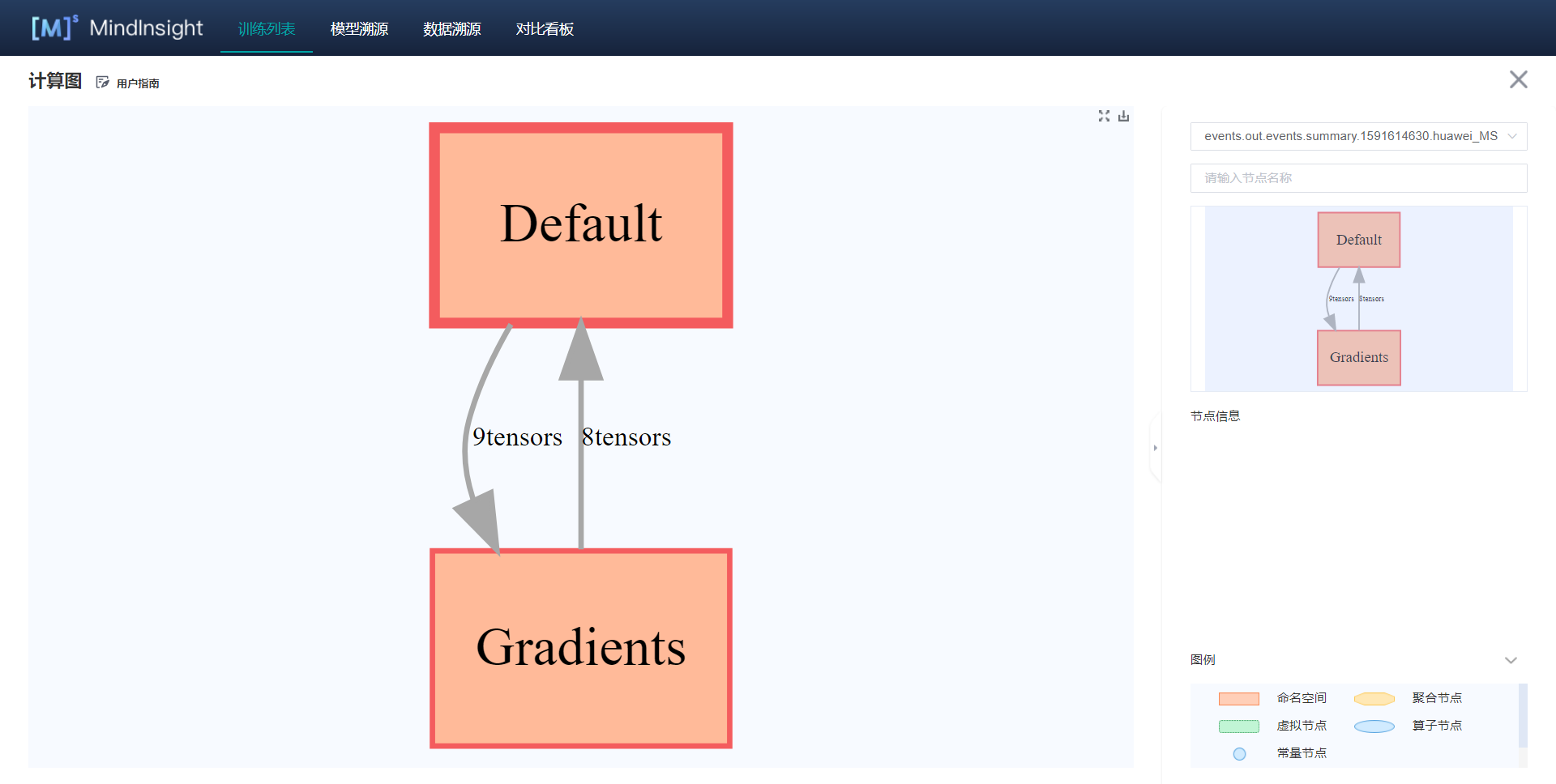

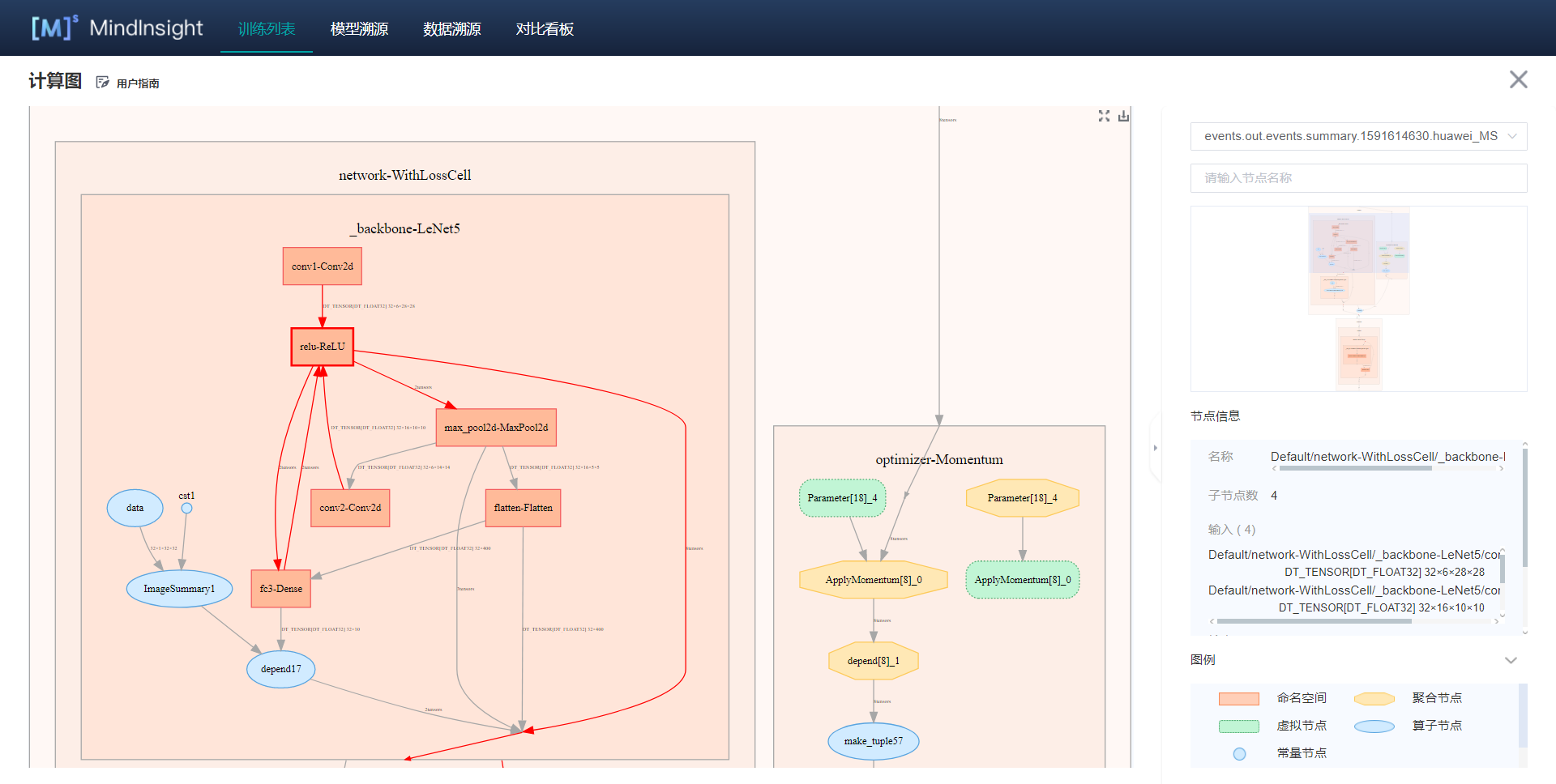

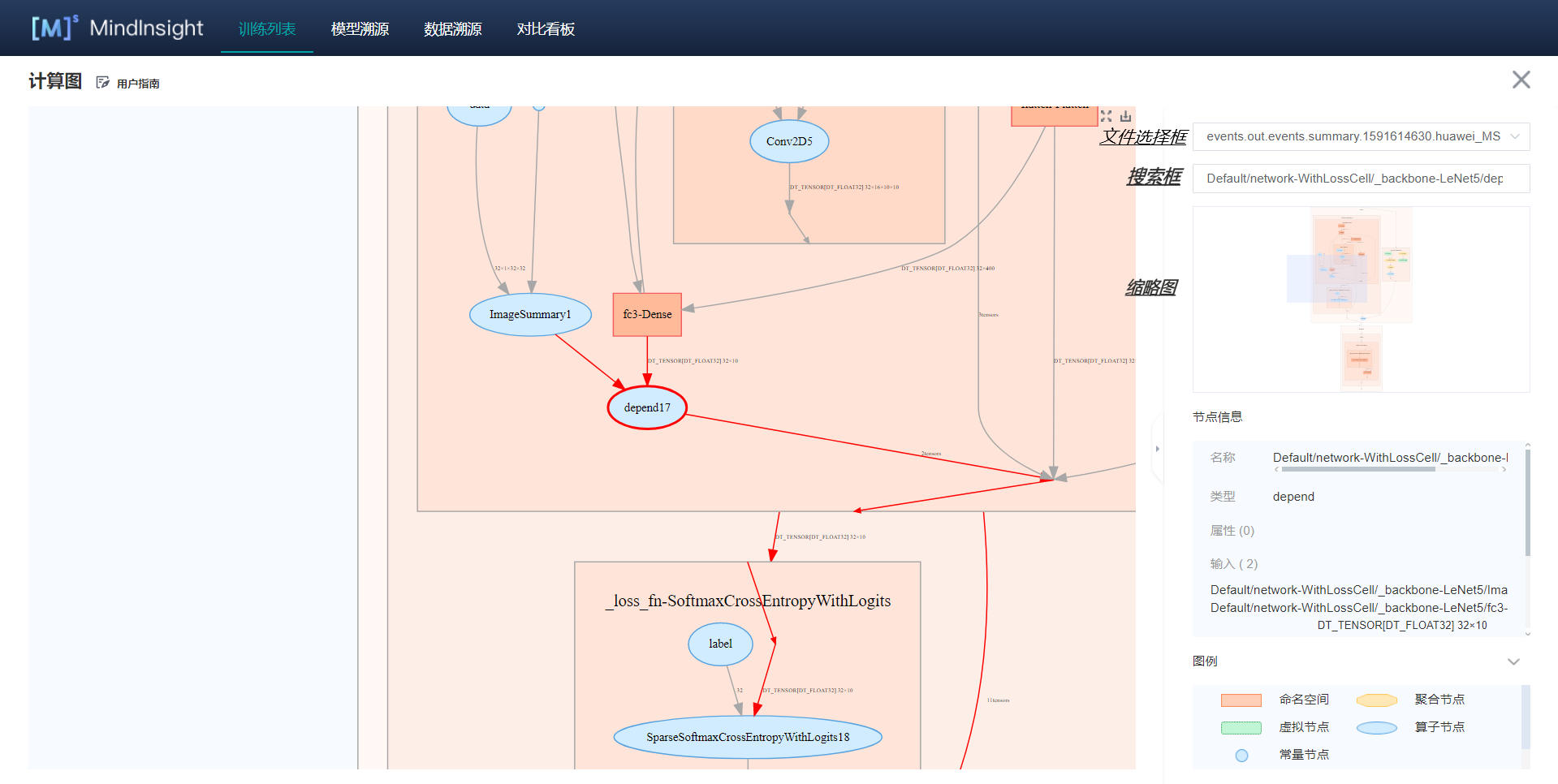

add New data graph and calculation graph

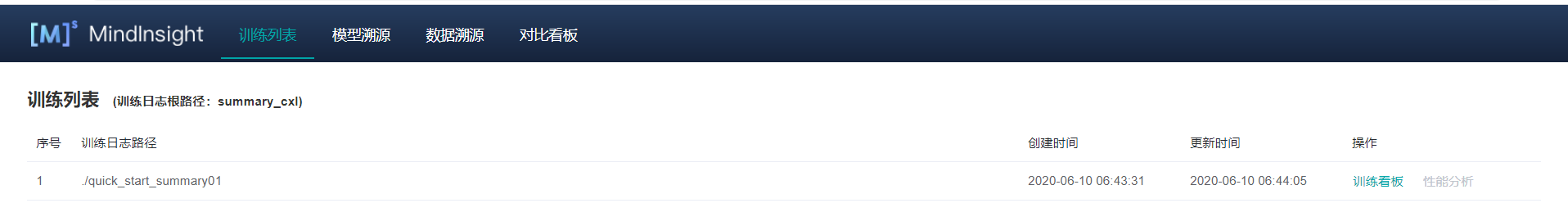

new file: tutorials/notebook/mindinsight/calculate.ipynb new file: tutorials/notebook/mindinsight/datagraphic.ipynb new file: tutorials/notebook/mindinsight/images/001.png new file: tutorials/notebook/mindinsight/images/002.png new file: tutorials/notebook/mindinsight/images/003.png new file: tutorials/notebook/mindinsight/images/004.png new file: tutorials/notebook/mindinsight/images/005.png new file: tutorials/notebook/mindinsight/calculate.ipynb new file: tutorials/notebook/mindinsight/datagraphic.ipynb

Showing

59.4 KB

71.6 KB

140.7 KB

163.0 KB

31.5 KB