update ppvehicle modelzoo (#6679)

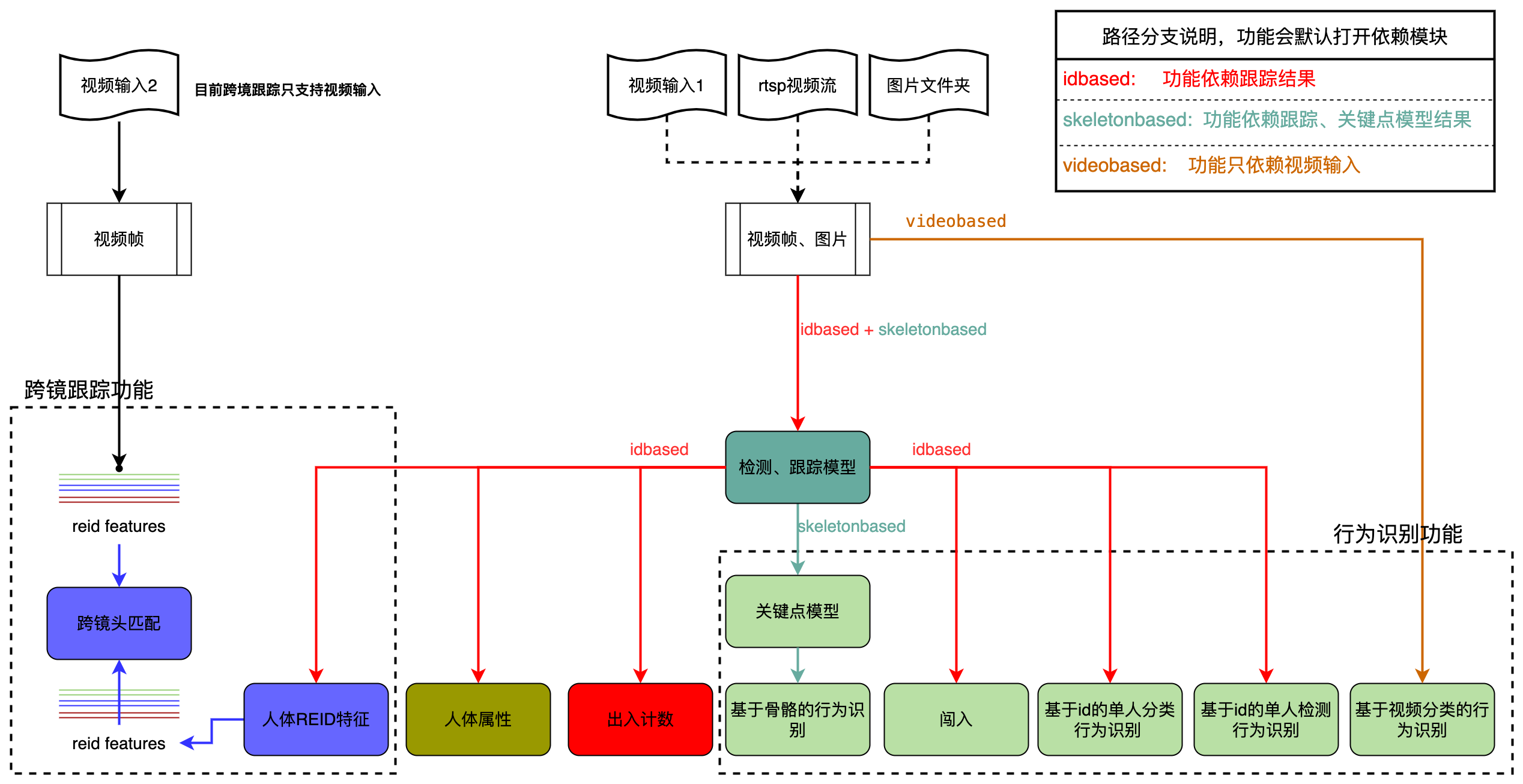

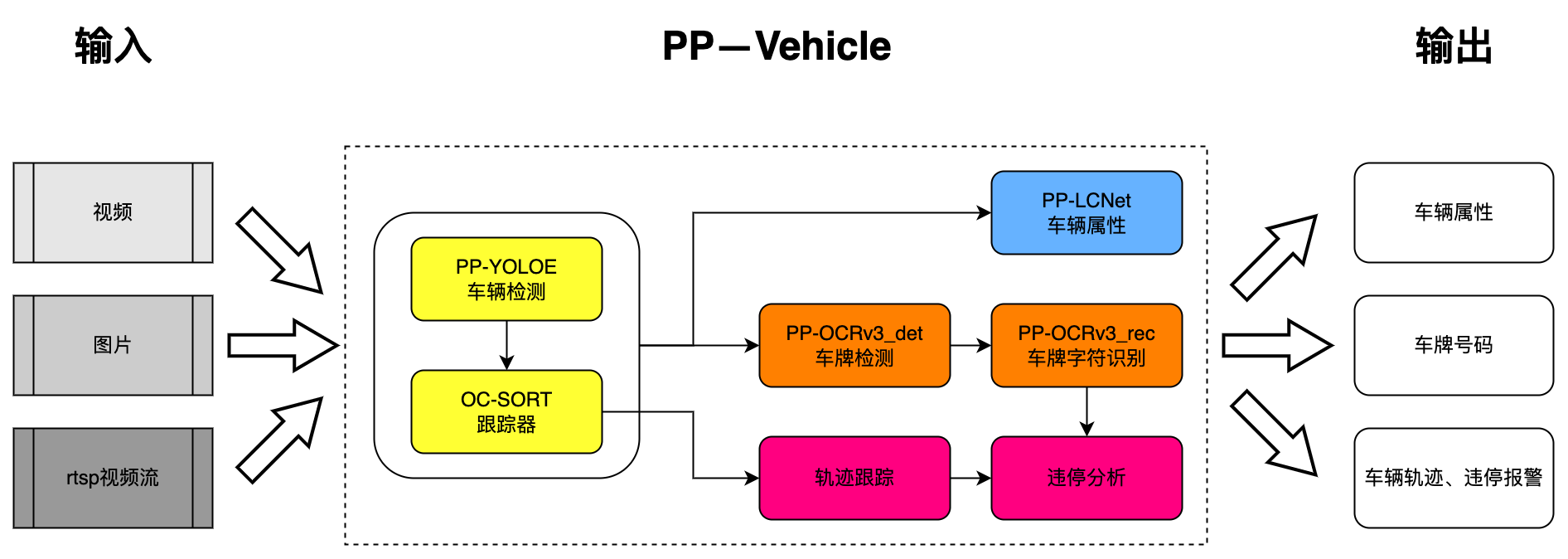

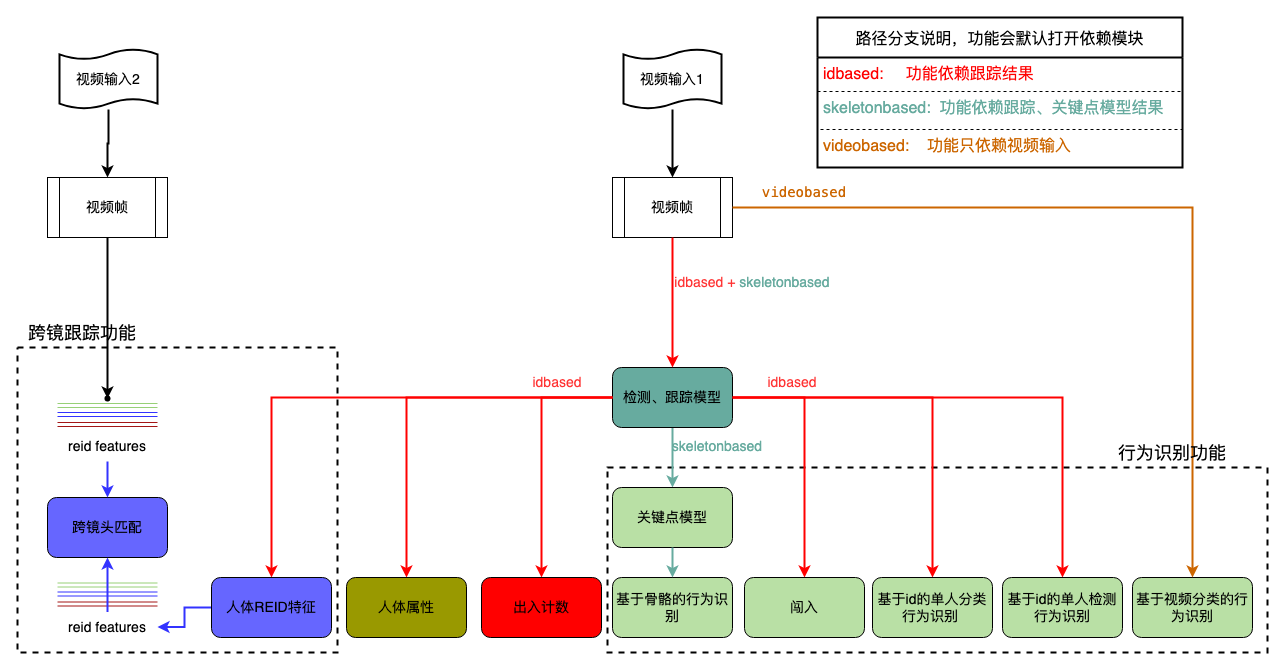

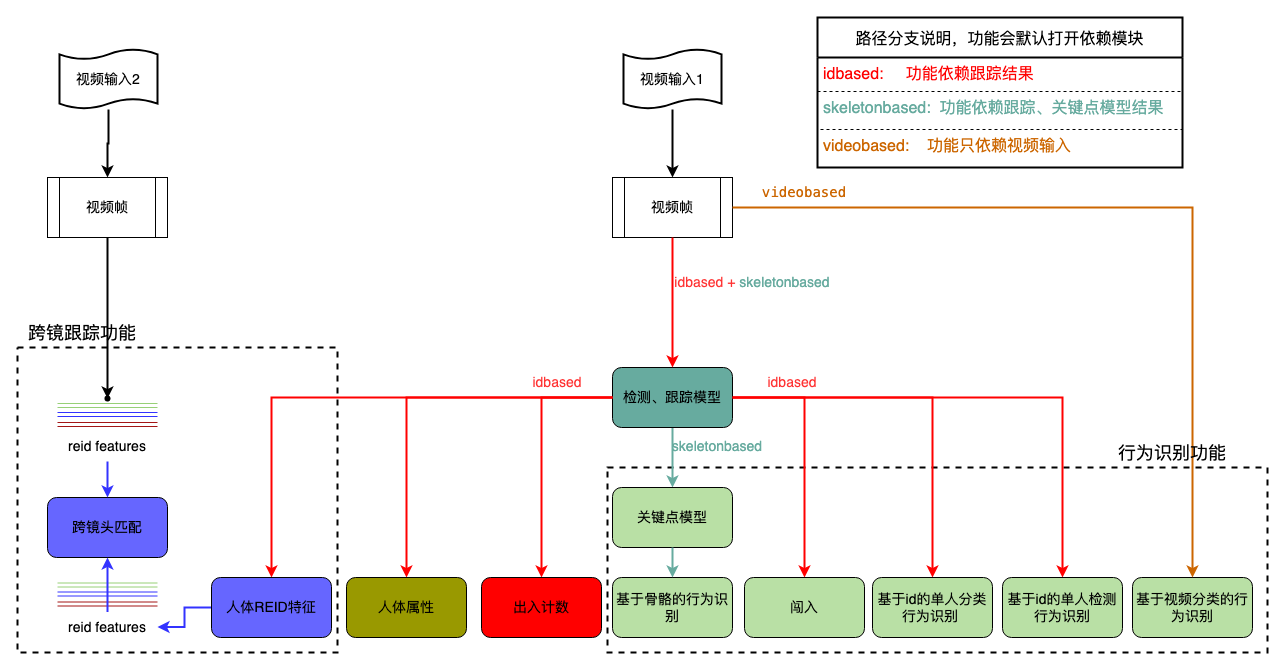

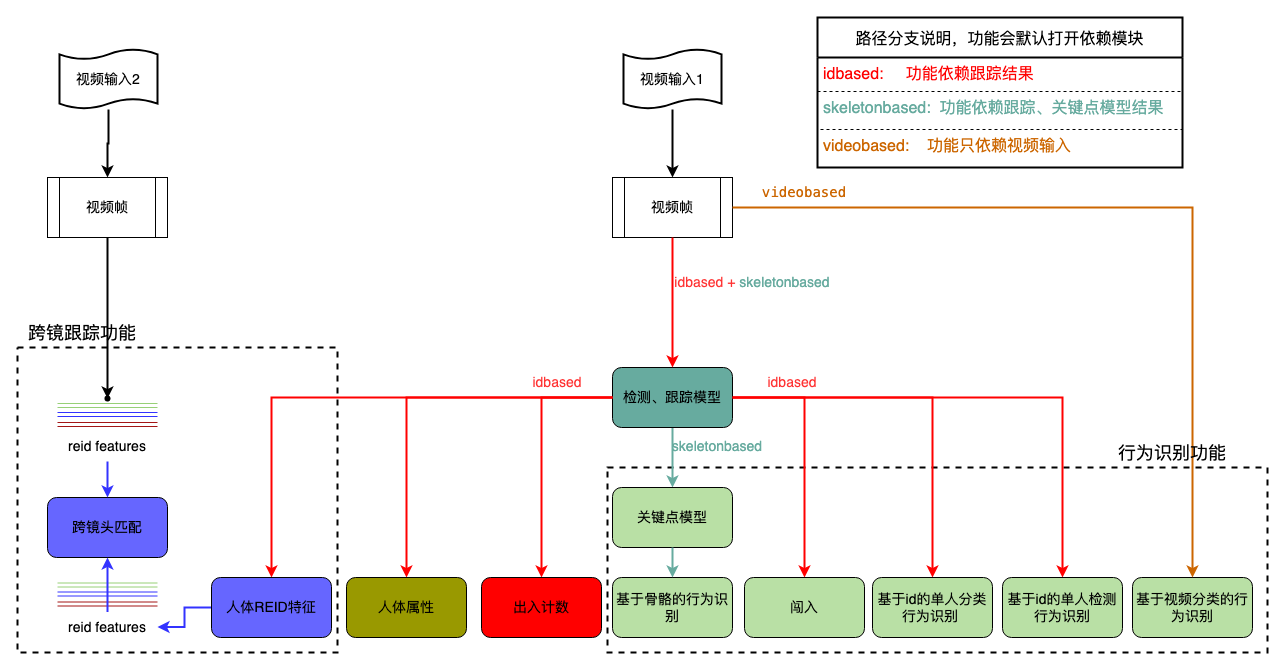

* update ppvehicle modelzoo; test=document_fix * update pipeline pictures * update * fix platerec jetson trtfp16; test=document_fix * replace all --model_dir in pipeline docs; test=document_fix

Showing

| W: | H:

| W: | H:

docs/images/ppvehicle.png

0 → 100644

127.1 KB