add gesture app

Showing

applications/gesture_local_app.py

0 → 100644

lib/gesture_lib/cfg/handpose.cfg

0 → 100644

lib/gesture_lib/cores/hand_pnp.py

0 → 100644

lib/gesture_lib/doc/README.md

0 → 100644

lib/gesture_lib/utils/utils.py

0 → 100644

2.9 KB

6.2 KB

5.5 KB

4.6 KB

18.1 KB

1.7 KB

3.1 KB

4.9 KB

1.6 KB

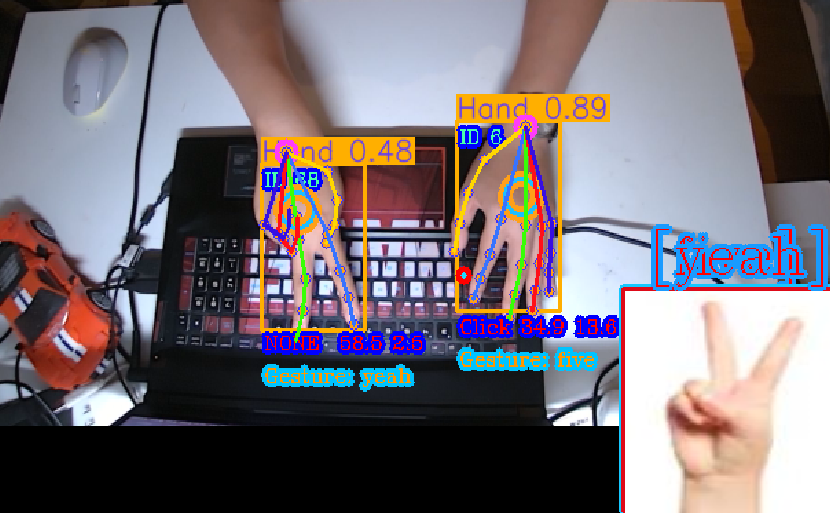

samples/gesture.png

0 → 100644

338.3 KB