fix conflict

Showing

docs/anaconda_install.md

0 → 100644

711.2 KB

315.9 KB

316.4 KB

docs/client_use.md

0 → 100644

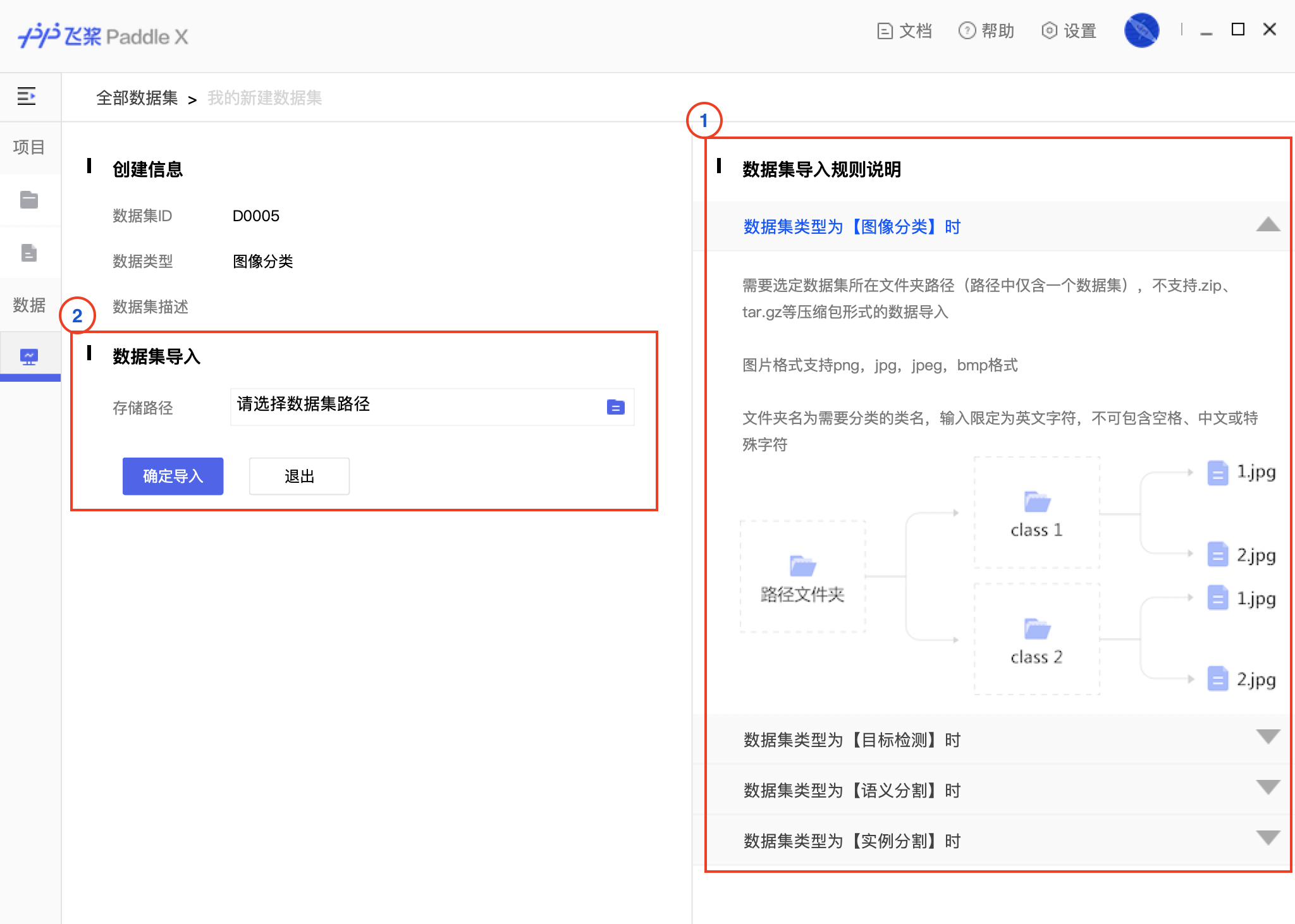

docs/images/00_loaddata.png

0 → 100644

456.5 KB

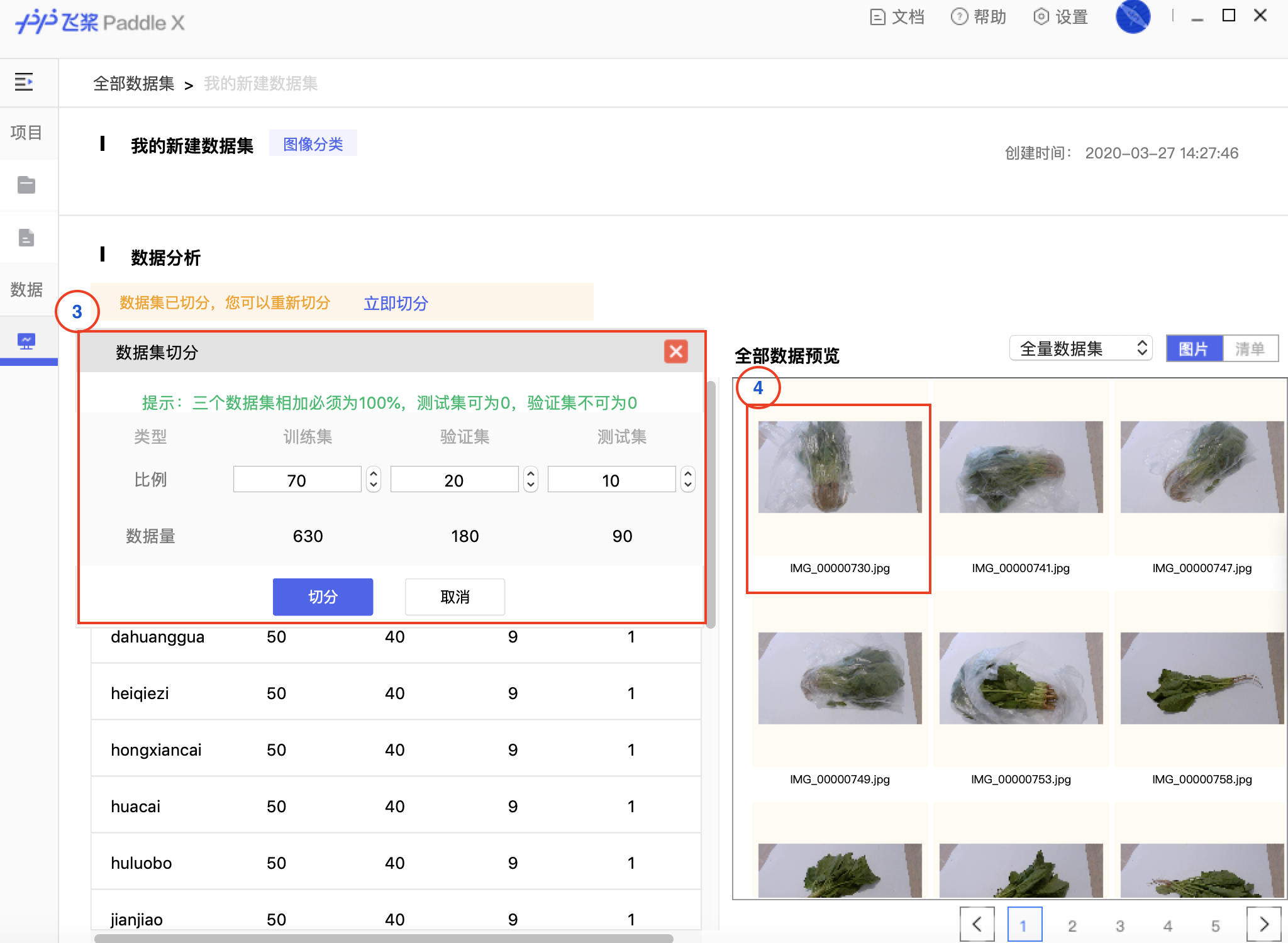

docs/images/01_datasplit.png

0 → 100644

898.1 KB

docs/images/02_newproject.png

0 → 100644

423.2 KB

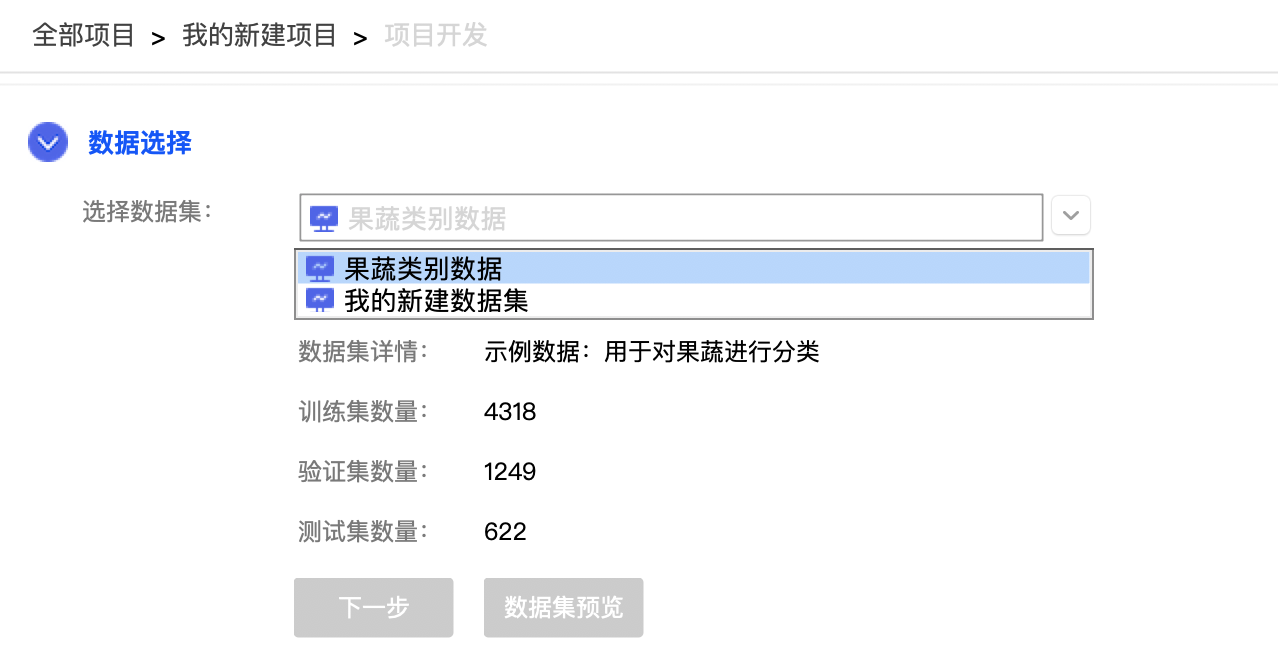

docs/images/03_choosedata.png

0 → 100644

108.7 KB

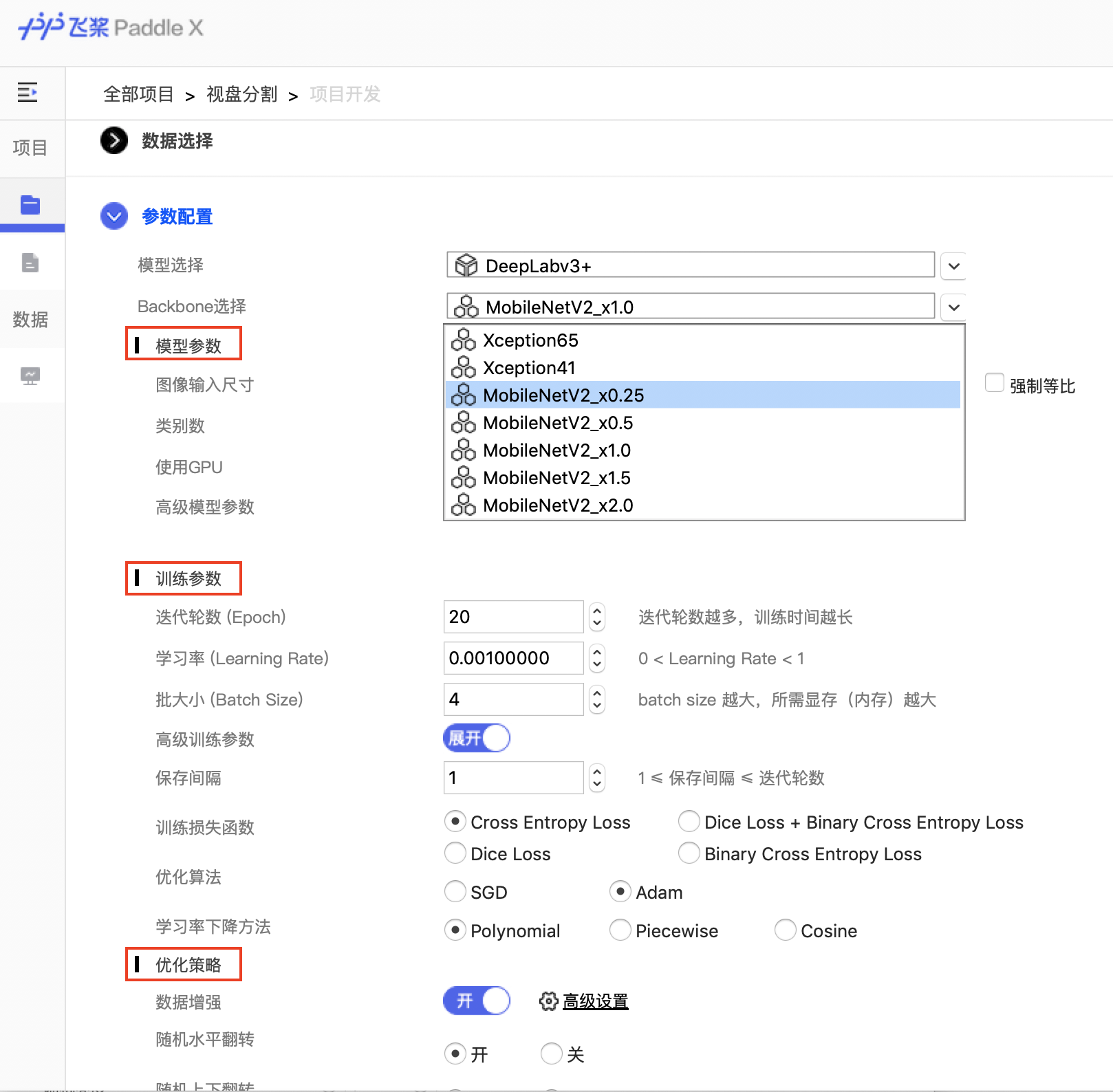

docs/images/04_parameter.png

0 → 100644

392.2 KB

docs/images/05_train.png

0 → 100644

174.0 KB

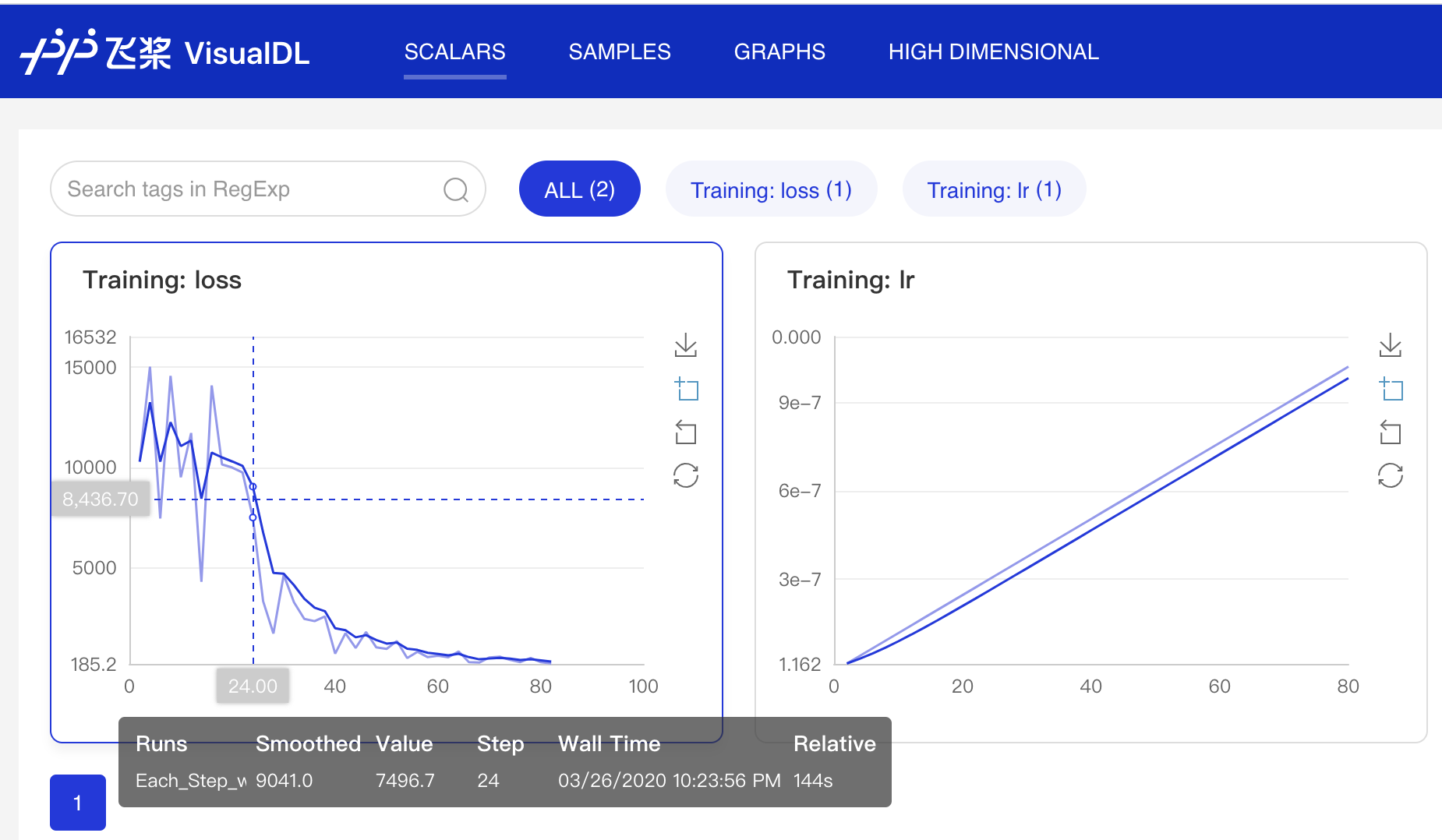

docs/images/06_VisualDL.png

0 → 100644

196.3 KB

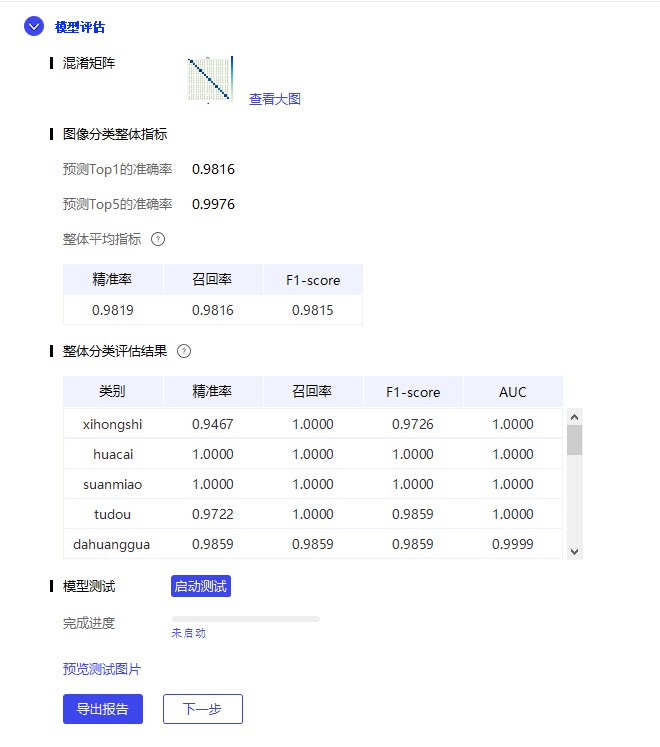

docs/images/07_evaluate.png

0 → 100644

59.7 KB

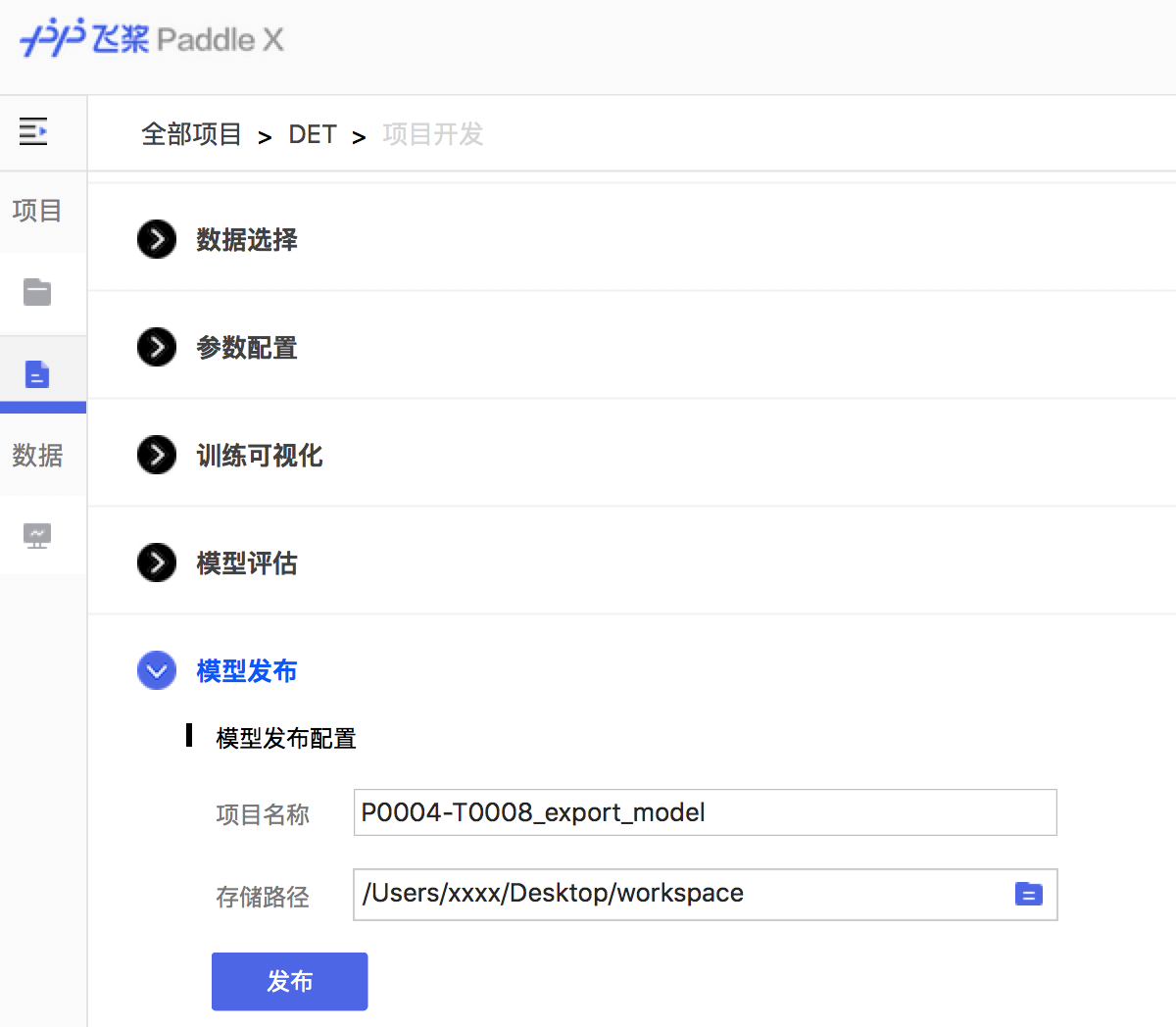

docs/images/08_deploy.png

0 → 100644

194.1 KB

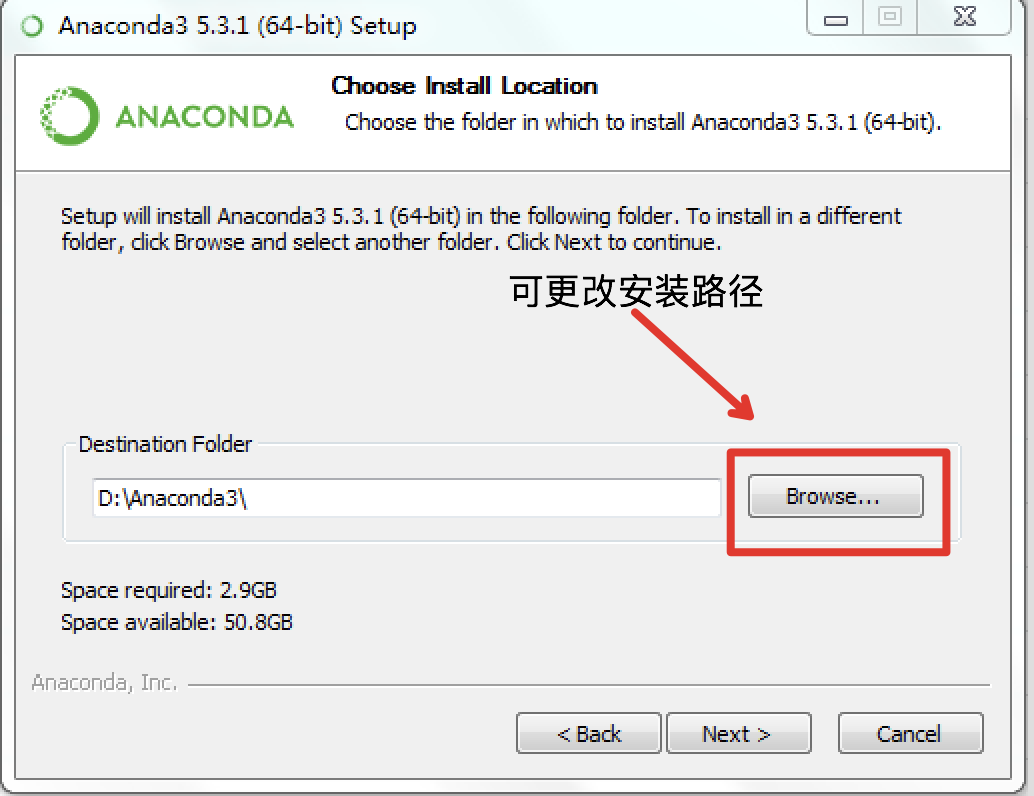

docs/images/anaconda_windows.png

0 → 100644

74.6 KB

| ... | @@ -6,3 +6,4 @@ cython | ... | @@ -6,3 +6,4 @@ cython |

| pycocotools | pycocotools | ||

| visualdl=1.3.0 | visualdl=1.3.0 | ||

| paddleslim=1.0.1 | paddleslim=1.0.1 | ||

| shapely |

.png)

.png)

.png)