OFA demo for ernie (#493)

* add ofa_ernie * add ofa_bert * add bert and update ernie

Showing

demo/ofa/bert/README.md

0 → 100644

demo/ofa/bert/run_glue_ofa.py

0 → 100644

此差异已折叠。

demo/ofa/ernie/README.md

0 → 100644

demo/ofa/ernie/ofa_ernie.py

0 → 100644

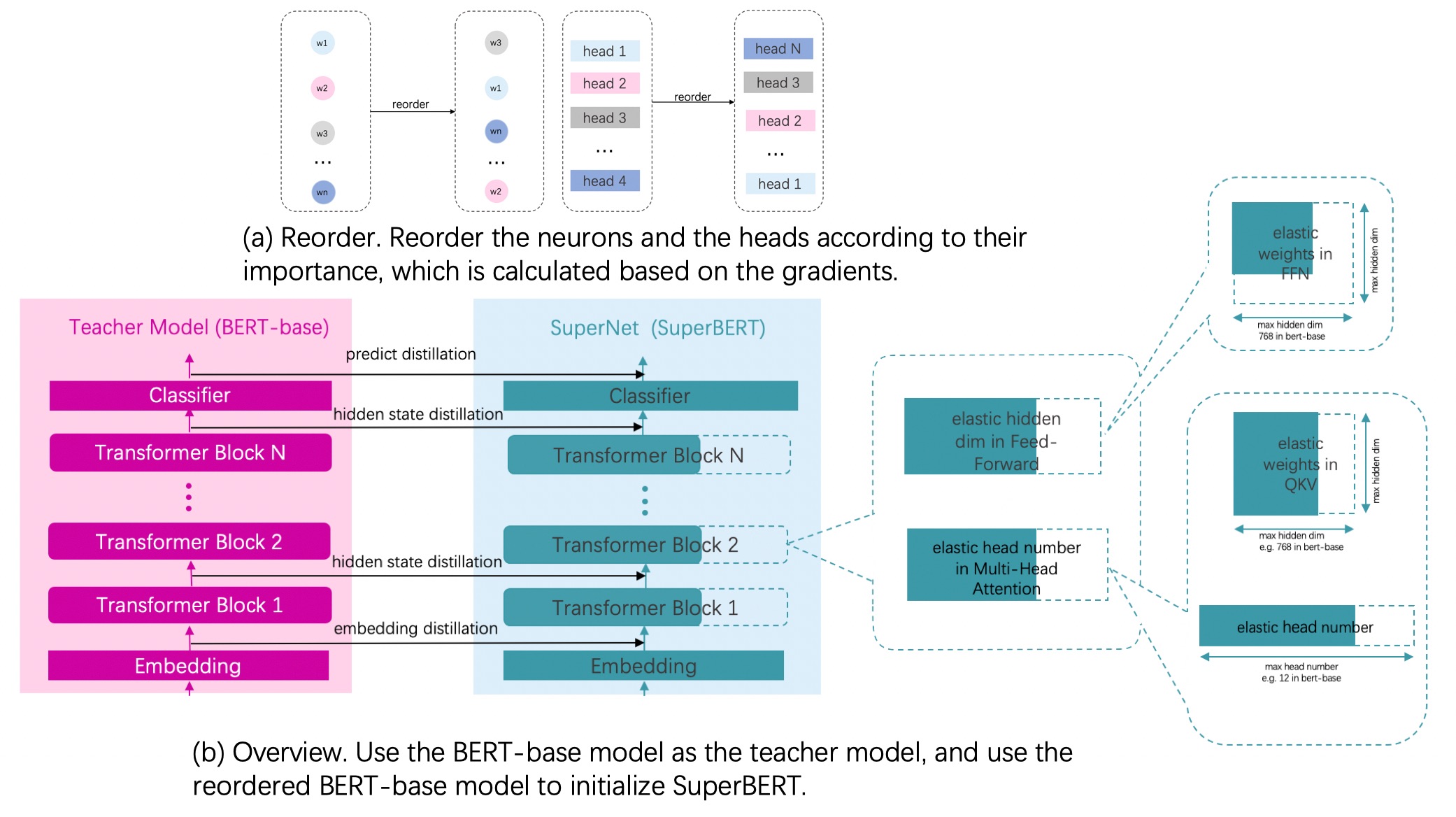

docs/images/algo/ofa_bert.jpg

0 → 100644

364.6 KB