Merge branch 'release/v0.2.0'

Showing

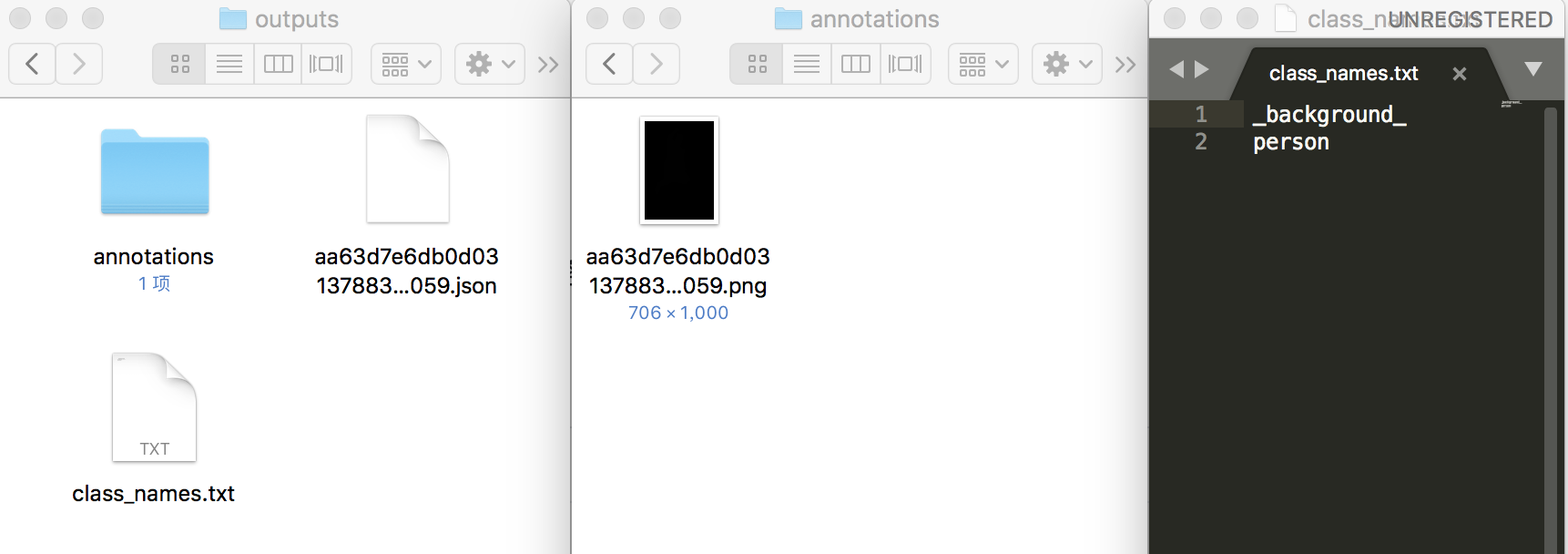

17.5 KB

14.1 KB

17.4 KB

22.4 KB

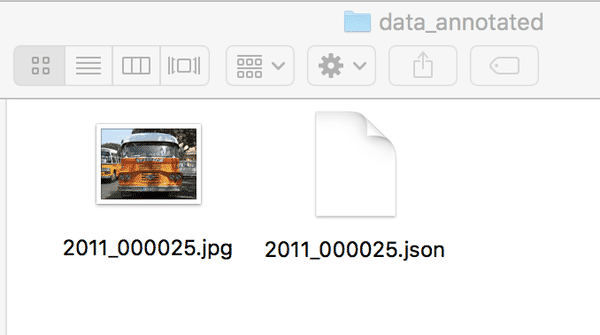

2.4 MB

2.1 MB

1.9 MB

2.0 MB

文件已移动

4.2 KB

文件已移动

文件已移动

1.8 KB

文件已移动

| W: | H:

| W: | H:

70.5 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H: