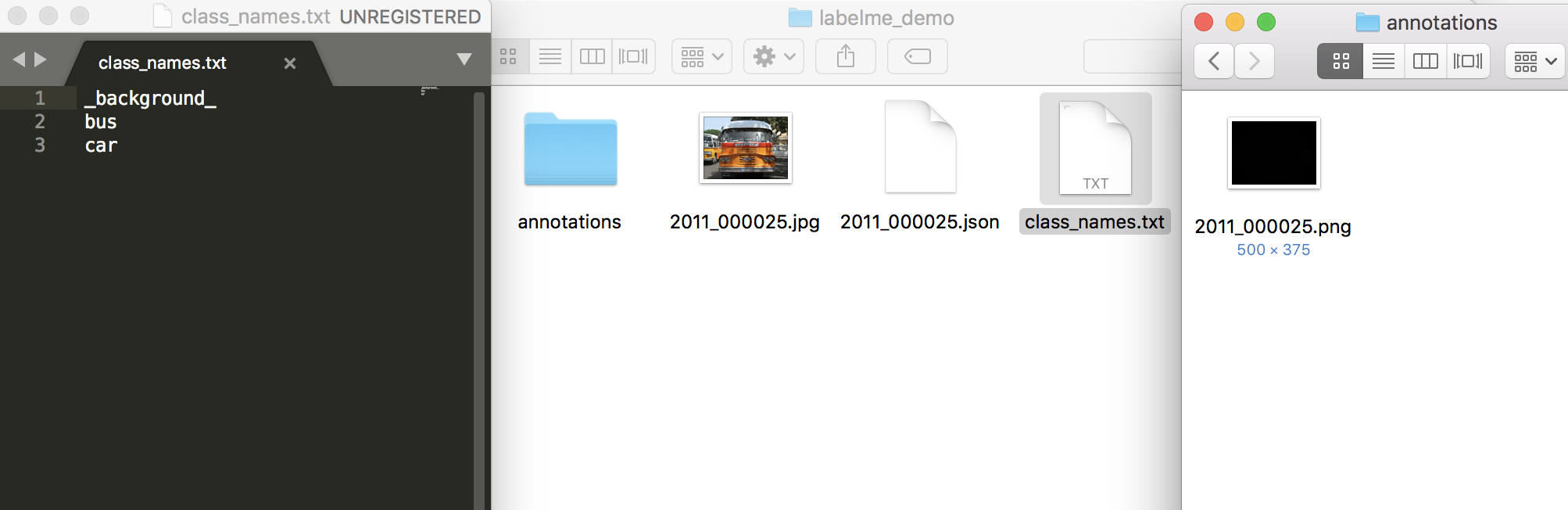

modify labelme jingling + fix label bug (#91)

* modify annotation stript path

Showing

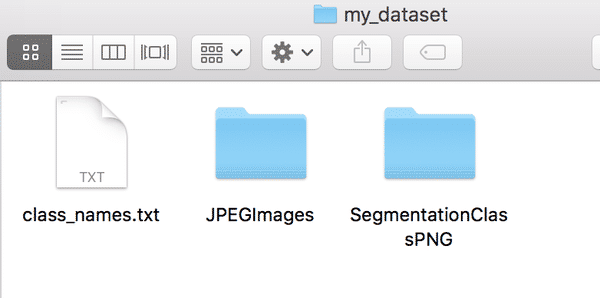

文件已移动

4.2 KB

文件已移动

文件已移动

1.8 KB

文件已移动

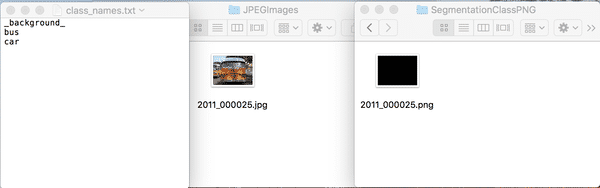

| W: | H:

| W: | H:

70.5 KB

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H:

| W: | H: