First commit

Showing

configs/cityscape.yaml

0 → 100644

configs/coco.yaml

0 → 100644

configs/humanseg.yaml

0 → 100644

configs/line.yaml

0 → 100644

configs/unet_pet.yaml

0 → 100644

contrib/ACE2P/README.md

0 → 100644

contrib/ACE2P/__init__.py

0 → 100644

contrib/ACE2P/config.py

0 → 100644

contrib/ACE2P/imgs/net.jpg

0 → 100644

101.5 KB

contrib/ACE2P/imgs/result.jpg

0 → 100644

146.5 KB

contrib/ACE2P/reader.py

0 → 100644

contrib/HumanSeg/__init__.py

0 → 100644

contrib/HumanSeg/config.py

0 → 100644

contrib/README.md

0 → 100644

contrib/RoadLine/__init__.py

0 → 100644

contrib/RoadLine/config.py

0 → 100644

contrib/imgs/Human.jpg

0 → 100644

491.7 KB

contrib/imgs/HumanSeg.jpg

0 → 100644

489.3 KB

contrib/imgs/RoadLine.jpg

0 → 100644

450.3 KB

contrib/imgs/RoadLine.png

0 → 100644

3.7 KB

contrib/infer.py

0 → 100644

contrib/utils/__init__.py

0 → 100644

contrib/utils/palette.py

0 → 100644

contrib/utils/util.py

0 → 100644

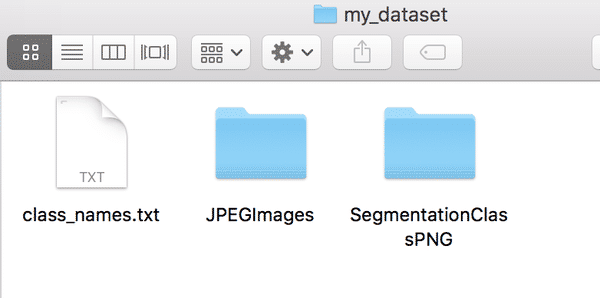

docs/annotation/README.md

0 → 100644

133.8 KB

docs/annotation/labelme2seg.py

0 → 100755

docs/benchmark.md

0 → 100644

docs/config.md

0 → 100644

docs/configs/.gitkeep

0 → 100644

docs/configs/basic_group.md

0 → 100644

docs/configs/dataloader_group.md

0 → 100644

docs/configs/dataset_group.md

0 → 100644

docs/configs/freeze_group.md

0 → 100644

docs/configs/model_group.md

0 → 100644

docs/configs/model_icnet_group.md

0 → 100644

docs/configs/model_unet_group.md

0 → 100644

docs/configs/solver_group.md

0 → 100644

docs/configs/test_group.md

0 → 100644

docs/configs/train_group.md

0 → 100644

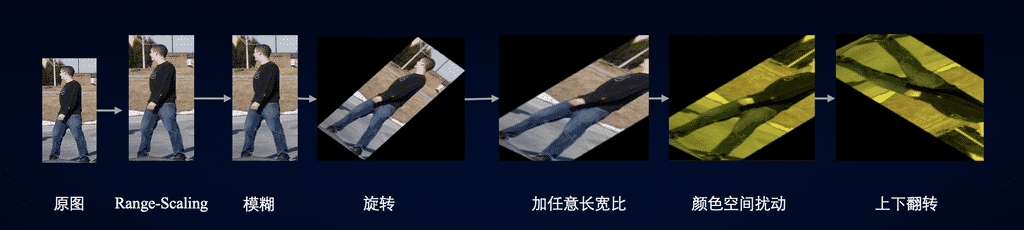

docs/data_aug.md

0 → 100644

docs/data_prepare.md

0 → 100644

docs/deploy.md

0 → 100644

docs/imgs/annotation/image-1.png

0 → 100644

8.3 KB

docs/imgs/annotation/image-2.png

0 → 100644

74.8 KB

docs/imgs/annotation/image-3.png

0 → 100644

81.6 KB

78.0 KB

75.8 KB

docs/imgs/annotation/image-5.png

0 → 100644

15.0 KB

docs/imgs/annotation/image-6.png

0 → 100644

15.2 KB

docs/imgs/annotation/image-7.png

0 → 100644

10.1 KB

docs/imgs/aug_method.png

0 → 100644

91.2 KB

18.4 KB

docs/imgs/data_aug_example.png

0 → 100644

44.8 KB

docs/imgs/data_aug_flow.png

0 → 100644

153.4 KB

docs/imgs/deeplabv3p.png

0 → 100644

269.2 KB

docs/imgs/file_list.png

0 → 100644

88.2 KB

docs/imgs/gn.png

0 → 100644

126.9 KB

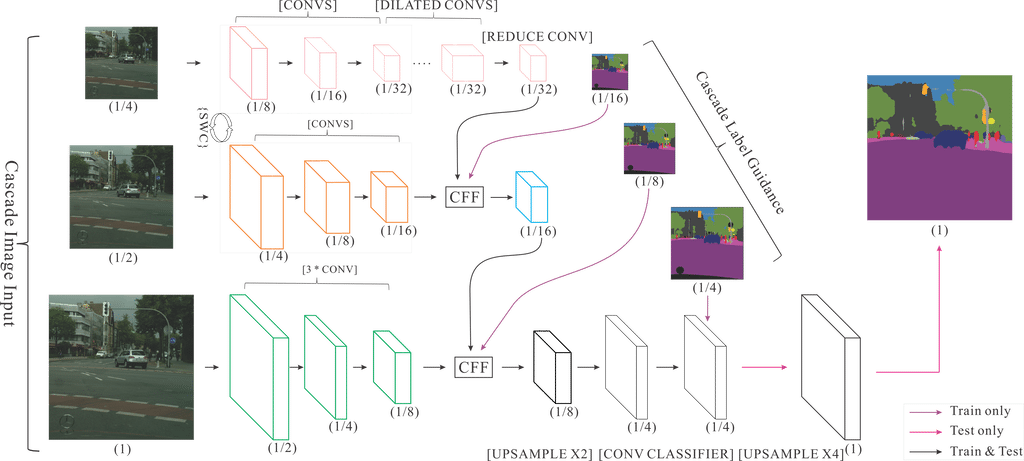

docs/imgs/icnet.png

0 → 100644

68.9 KB

23.0 KB

docs/imgs/poly_decay_example.png

0 → 100644

135.7 KB

docs/imgs/rangescale.png

0 → 100644

13.1 KB

docs/imgs/tensorboard_image.JPG

0 → 100644

120.1 KB

docs/imgs/tensorboard_scalar.JPG

0 → 100644

193.4 KB

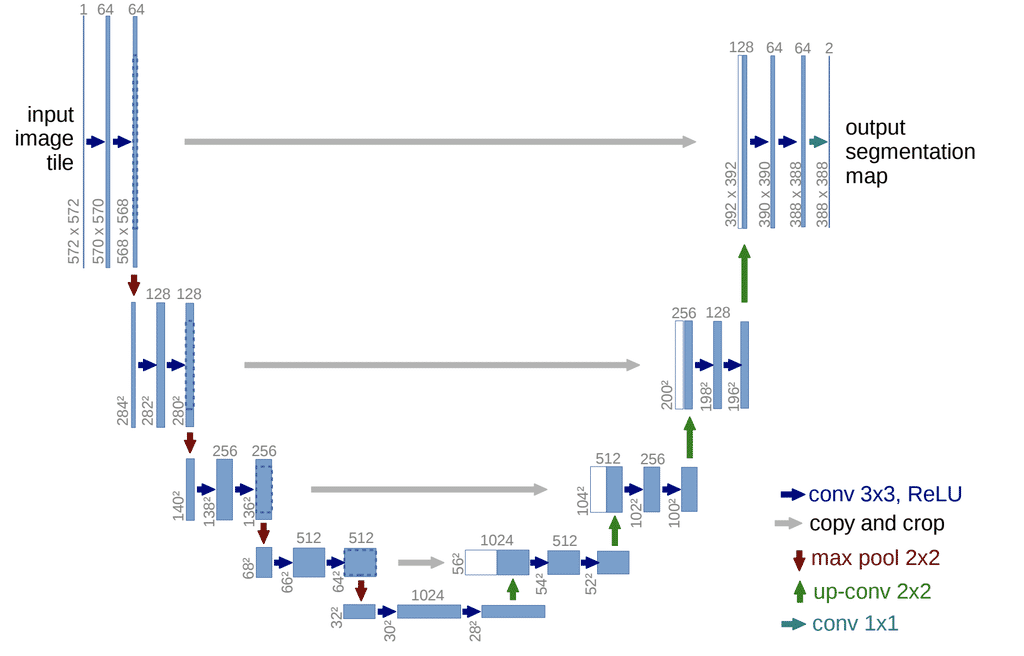

docs/imgs/unet.png

0 → 100644

35.7 KB

docs/installation.md

0 → 100644

docs/model_zoo.md

0 → 100644

docs/models.md

0 → 100644

docs/usage.md

0 → 100644

inference/CMakeLists.txt

0 → 100644

inference/CMakeSettings.json

0 → 100644

inference/INSTALL.md

0 → 100644

inference/LICENSE

0 → 100644

inference/README.md

0 → 100644

inference/conf/humanseg.yaml

0 → 100644

inference/demo.cpp

0 → 100644

inference/images/humanseg/1.jpg

0 → 100644

136.5 KB

inference/images/humanseg/10.jpg

0 → 100644

105.1 KB

inference/images/humanseg/11.jpg

0 → 100644

163.5 KB

inference/images/humanseg/12.jpg

0 → 100644

53.3 KB

inference/images/humanseg/13.jpg

0 → 100644

120.9 KB

inference/images/humanseg/14.jpg

0 → 100644

146.9 KB

inference/images/humanseg/2.jpg

0 → 100644

132.9 KB

inference/images/humanseg/3.jpg

0 → 100644

98.1 KB

inference/images/humanseg/4.jpg

0 → 100644

124.1 KB

inference/images/humanseg/5.jpg

0 → 100644

159.8 KB

inference/images/humanseg/6.jpg

0 → 100644

172.9 KB

inference/images/humanseg/7.jpg

0 → 100644

143.9 KB

inference/images/humanseg/8.jpg

0 → 100644

157.3 KB

inference/images/humanseg/9.jpg

0 → 100644

120.7 KB

491.7 KB

369.3 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

inference/tools/visualize.py

0 → 100644

此差异已折叠。

inference/utils/seg_conf_parser.h

0 → 100644

此差异已折叠。

inference/utils/utils.h

0 → 100644

此差异已折叠。

pdseg/__init__.py

0 → 100644

此差异已折叠。

pdseg/check.py

0 → 100644

此差异已折叠。

pdseg/data_aug.py

0 → 100644

此差异已折叠。

pdseg/data_utils.py

0 → 100644

此差异已折叠。

pdseg/eval.py

0 → 100644

此差异已折叠。

pdseg/export_model.py

0 → 100644

此差异已折叠。

pdseg/loss.py

0 → 100644

此差异已折叠。

pdseg/metrics.py

0 → 100644

此差异已折叠。

pdseg/models/__init__.py

0 → 100644

此差异已折叠。

pdseg/models/backbone/__init__.py

0 → 100644

此差异已折叠。

pdseg/models/backbone/resnet.py

0 → 100644

此差异已折叠。

pdseg/models/backbone/xception.py

0 → 100644

此差异已折叠。

pdseg/models/libs/__init__.py

0 → 100644

pdseg/models/libs/model_libs.py

0 → 100644

此差异已折叠。

pdseg/models/model_builder.py

0 → 100644

此差异已折叠。

pdseg/models/modeling/__init__.py

0 → 100644

pdseg/models/modeling/deeplab.py

0 → 100644

此差异已折叠。

pdseg/models/modeling/icnet.py

0 → 100644

此差异已折叠。

pdseg/models/modeling/unet.py

0 → 100644

此差异已折叠。

pdseg/reader.py

0 → 100644

此差异已折叠。

pdseg/solver.py

0 → 100644

此差异已折叠。

pdseg/train.py

0 → 100644

此差异已折叠。

pdseg/utils/__init__.py

0 → 100644

pdseg/utils/collect.py

0 → 100644

此差异已折叠。

pdseg/utils/config.py

0 → 100644

此差异已折叠。

pdseg/utils/fp16_utils.py

0 → 100644

此差异已折叠。

pdseg/utils/timer.py

0 → 100644

此差异已折叠。

pdseg/vis.py

0 → 100644

此差异已折叠。

requirements.txt

0 → 100644

此差异已折叠。

serving/COMPILE_GUIDE.md

0 → 100644

此差异已折叠。

serving/README.md

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_0.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_1.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_2.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_3.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_4.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_5.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_6.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_7.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_8.png

0 → 100644

此差异已折叠。

serving/imgs/GF1_PMS1_sino0_9.png

0 → 100644

此差异已折叠。

serving/requirements.txt

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

serving/seg-serving/op/seg_conf.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

serving/tools/image_seg_client.py

0 → 100644

此差异已折叠。

serving/tools/images/1.jpg

0 → 100755

此差异已折叠。

serving/tools/images/2.jpg

0 → 100755

此差异已折叠。

serving/tools/images/3.jpg

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/configs/unet_coco.yaml

0 → 100644

此差异已折叠。

test/configs/unet_pet.yaml

0 → 100644

此差异已折叠。

test/local_test_cityscapes.py

0 → 100644

此差异已折叠。

test/local_test_pet.py

0 → 100644

此差异已折叠。

test/test_utils.py

0 → 100644

此差异已折叠。