Merge branch 'master' of https://github.com/PaddlePaddle/PaddleSeg

track official

Showing

dataset/README.md

0 → 100644

dataset/convert_voc2012.py

0 → 100644

docs/annotation/README.md

已删除

100644 → 0

docs/imgs/deepglobe.png

0 → 100644

659.8 KB

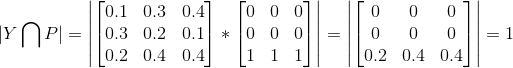

docs/imgs/dice1.png

0 → 100644

7.9 KB

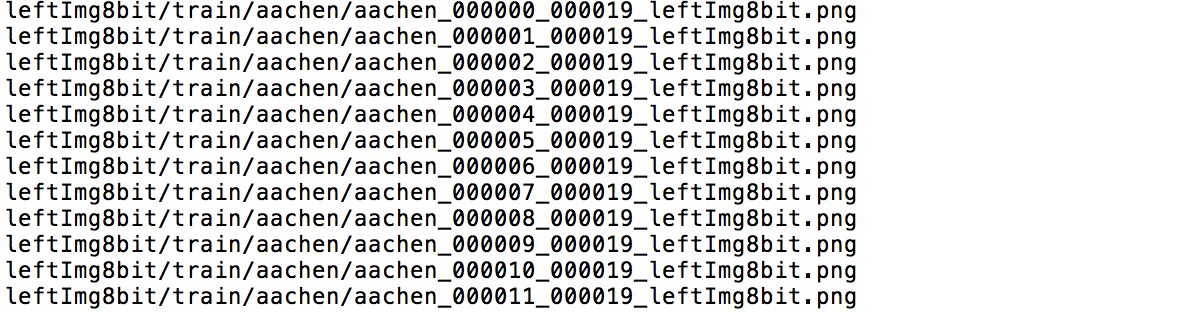

docs/imgs/file_list2.png

0 → 100644

90.6 KB

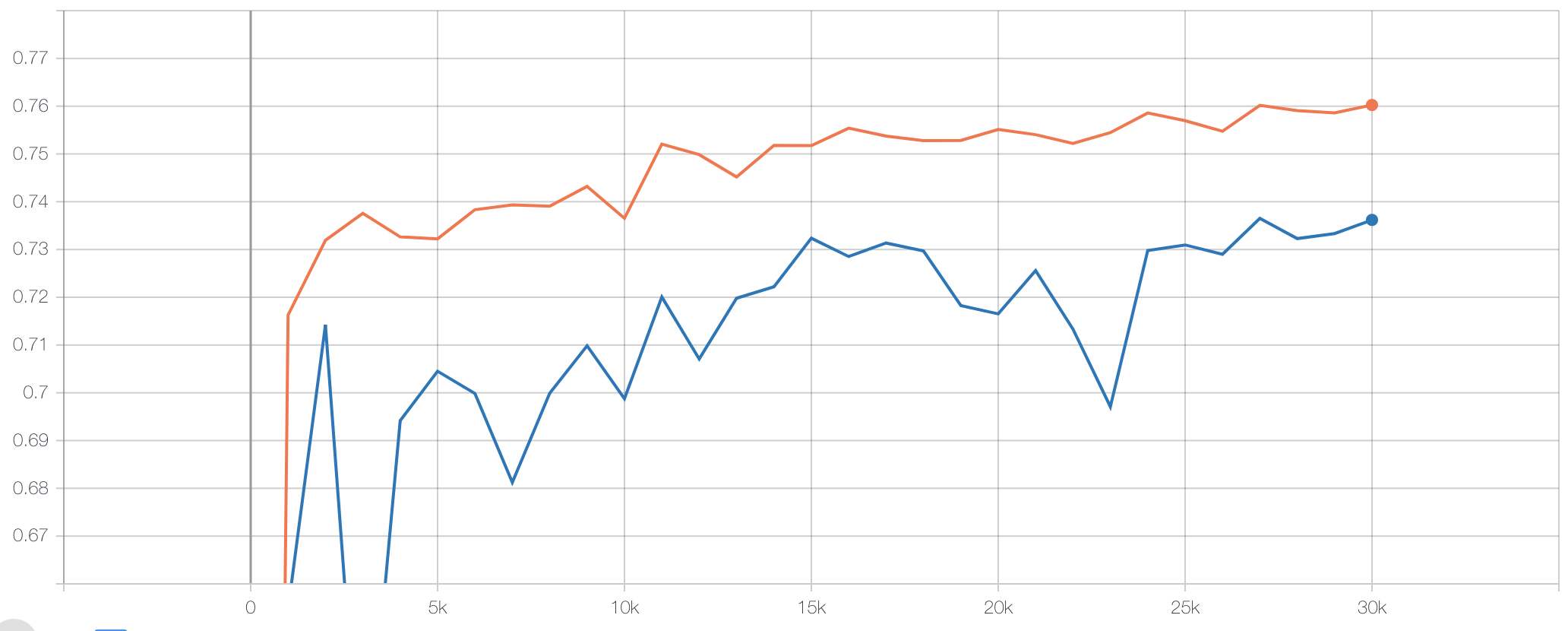

docs/imgs/loss_comparison.png

0 → 100644

123.5 KB

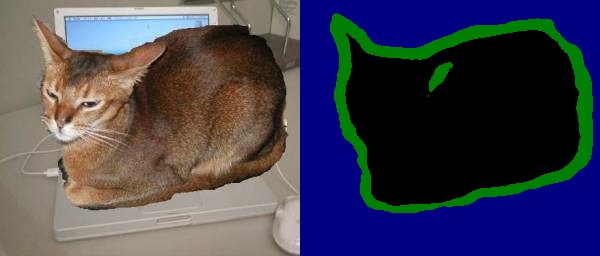

docs/imgs/usage_vis_demo.jpg

0 → 100644

65.0 KB

docs/imgs/usage_vis_demo2.jpg

0 → 100644

33.4 KB

docs/imgs/usage_vis_demo3.jpg

0 → 100644

91.4 KB

docs/loss_select.md

0 → 100644

此差异已折叠。

pdseg/tools/__init__.py

0 → 100644

pdseg/utils/dist_utils.py

0 → 100755