pull develop

Showing

configs/hrnet_w18_pet.yaml

0 → 100644

deploy/README.md

0 → 100644

文件已移动

434.3 KB

文件已移动

文件已移动

文件已移动

文件已移动

deploy/lite/README.md

0 → 100644

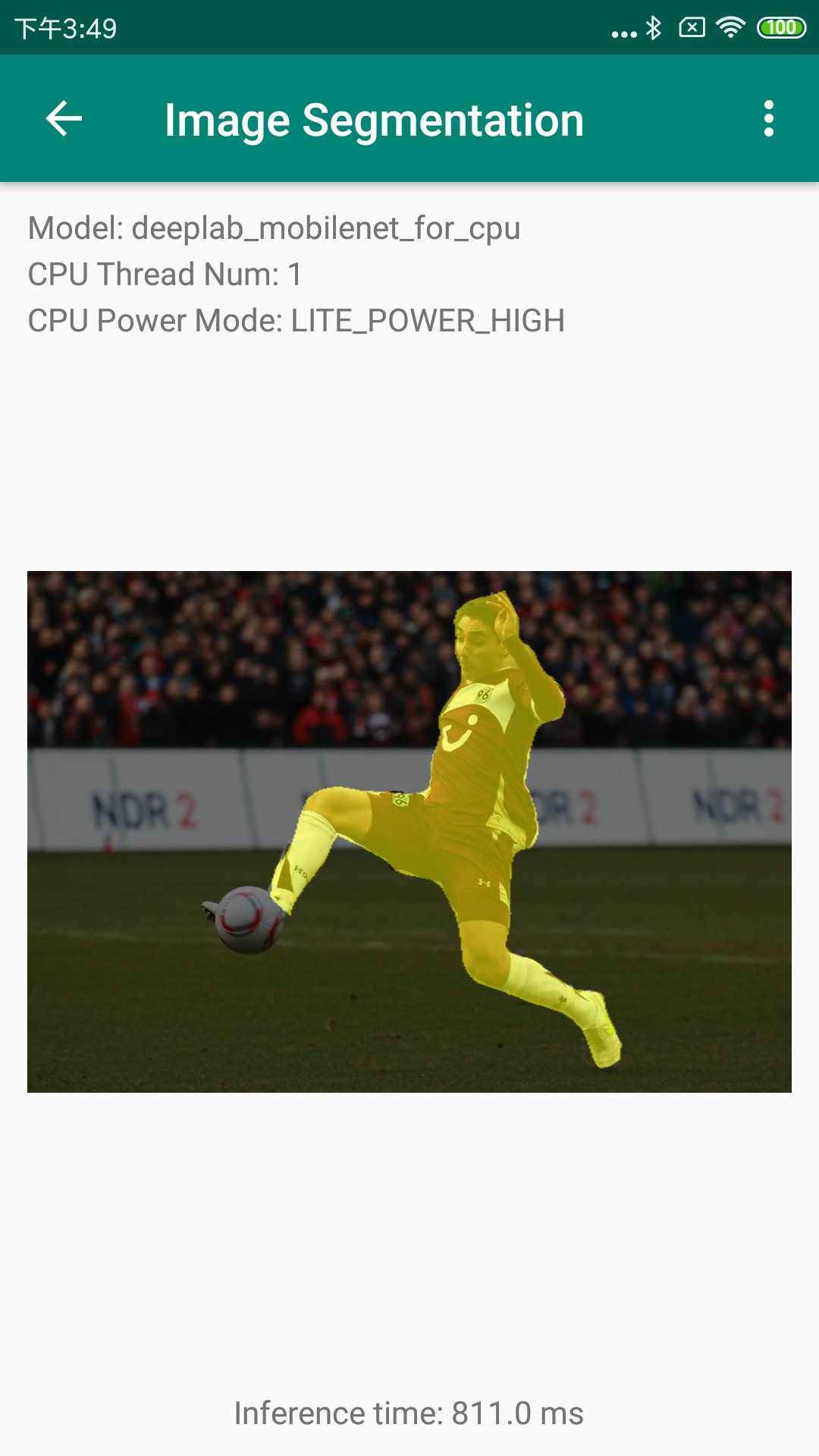

deploy/lite/example/human_1.png

0 → 100644

606.3 KB

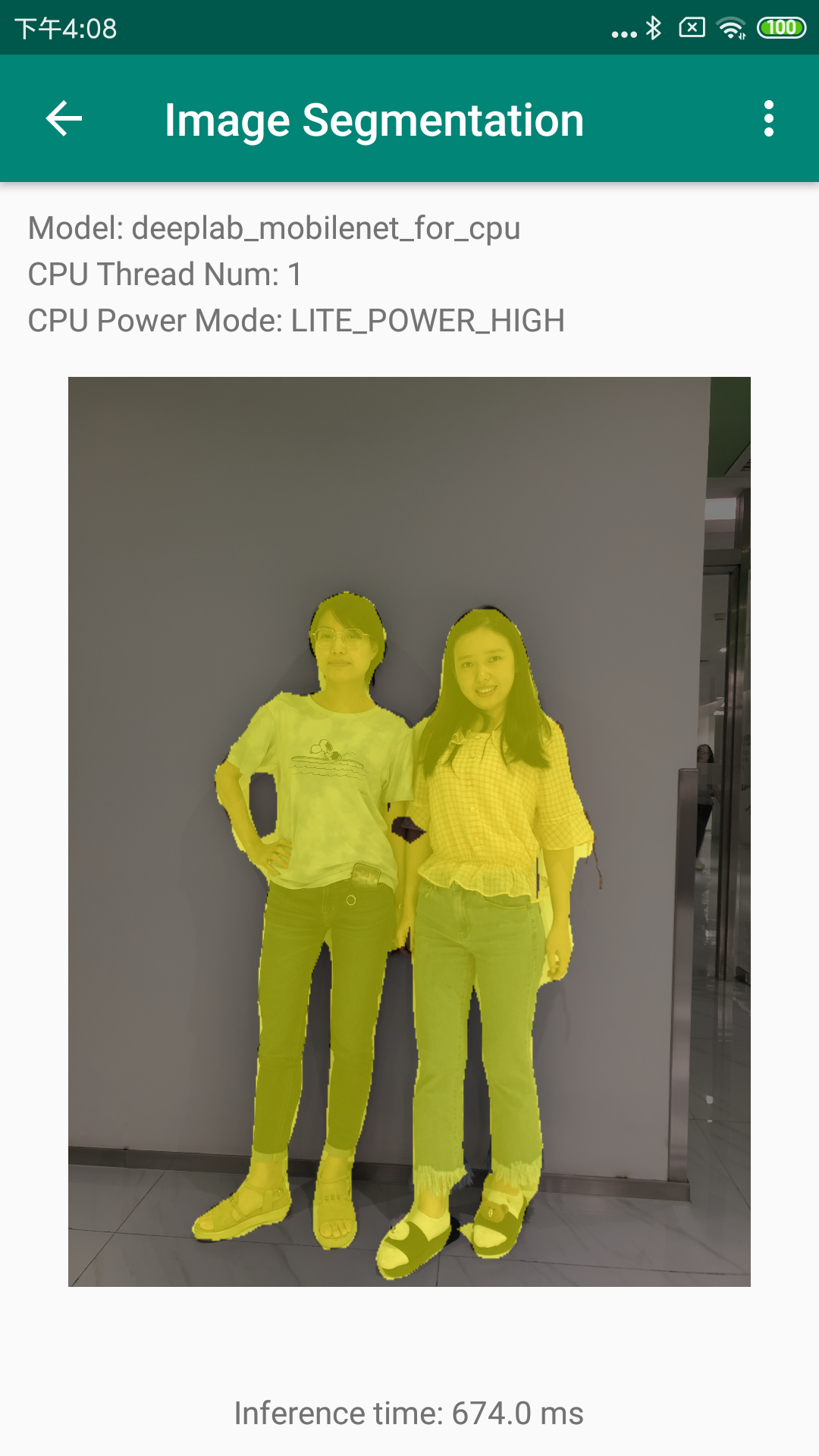

deploy/lite/example/human_2.png

0 → 100644

1002.6 KB

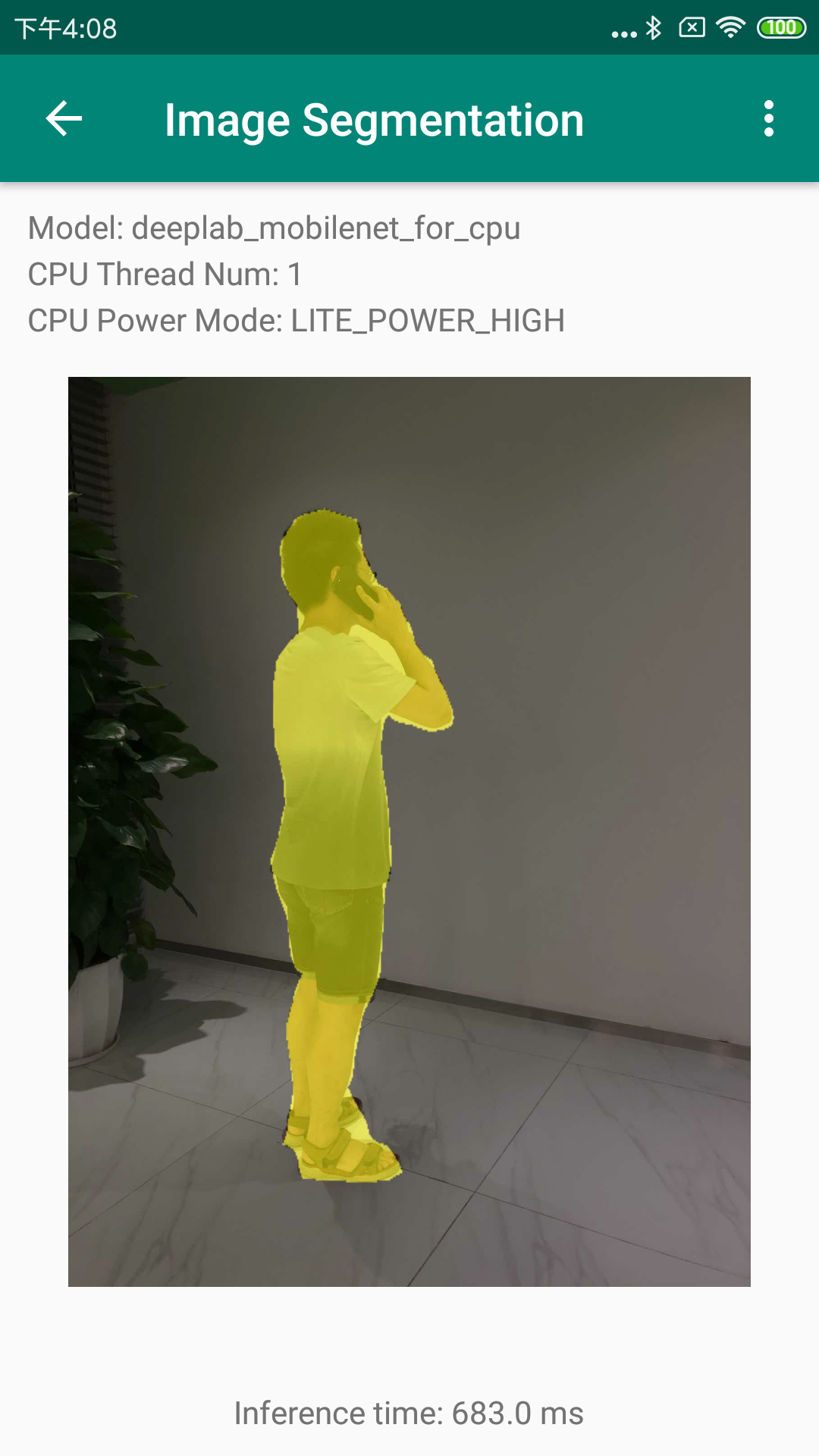

deploy/lite/example/human_3.png

0 → 100644

316.3 KB

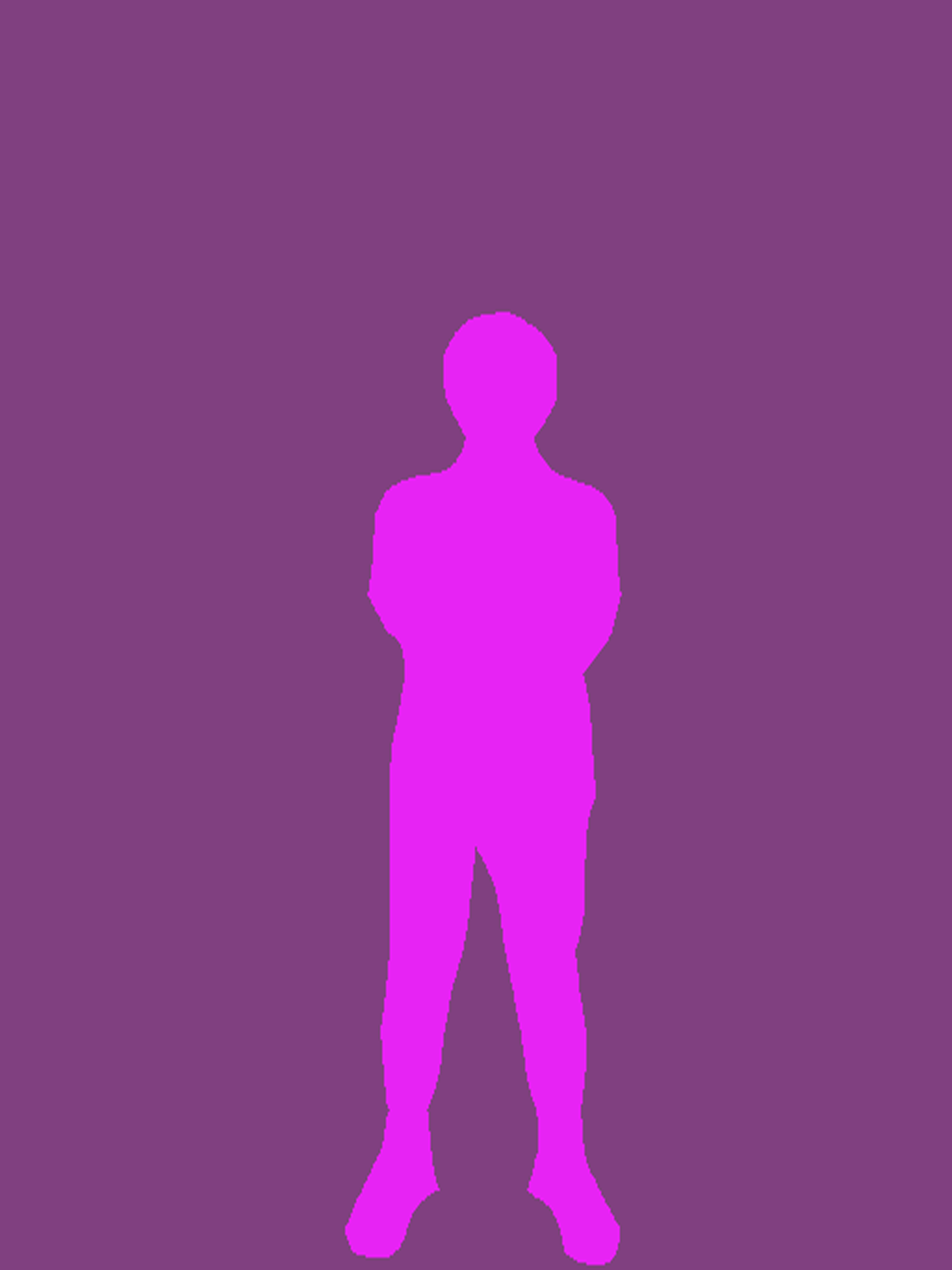

文件已添加

106.8 KB

文件已添加

文件已添加

文件已添加

文件已添加

文件已添加

文件已添加

106.8 KB

2.9 KB

4.8 KB

2.0 KB

2.7 KB

4.4 KB

6.7 KB

6.2 KB

10.2 KB

8.9 KB

14.8 KB

文件已添加

deploy/python/README.md

0 → 100644

此差异已折叠。

deploy/python/infer.py

0 → 100644

此差异已折叠。

deploy/python/requirements.txt

0 → 100644

此差异已折叠。

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

docs/configs/model_hrnet_group.md

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

pdseg/tools/gray2pseudo_color.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

turtorial/finetune_hrnet.md

0 → 100644

此差异已折叠。