Merge pull request #12 from frankwhzhang/200520_listwise

add listwise

Showing

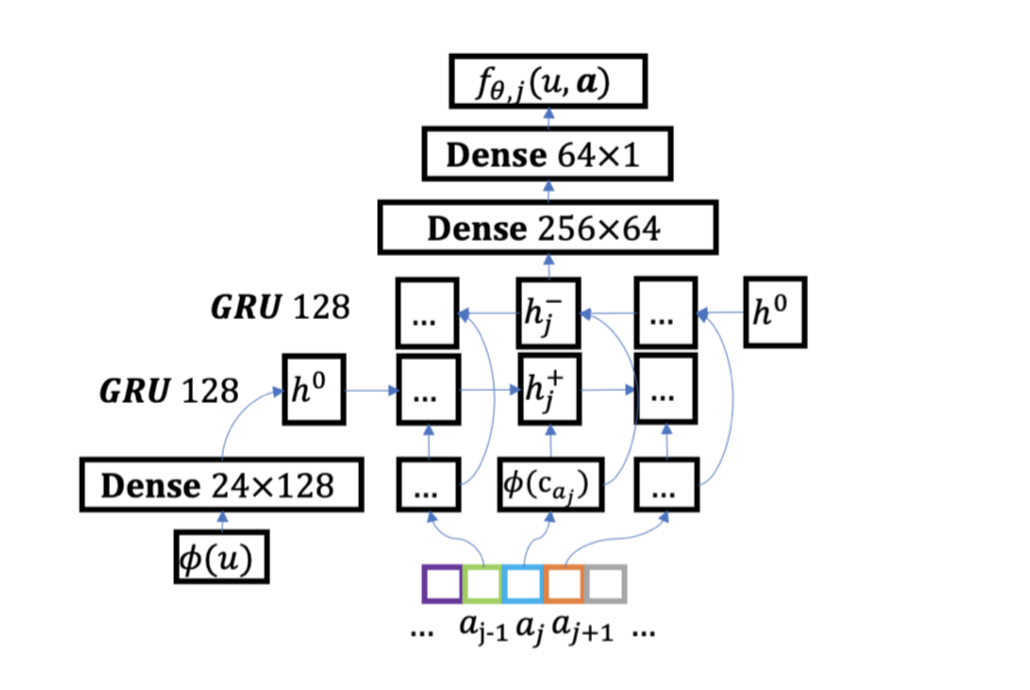

doc/imgs/listwise.png

0 → 100644

133.7 KB

models/rerank/__init__.py

0 → 100755

models/rerank/listwise/model.py

0 → 100644

models/rerank/readme.md

0 → 100755