Merge branch 'dygraph' into v3_rec_introduc

Showing

doc/doc_en/logging_en.md

0 → 100644

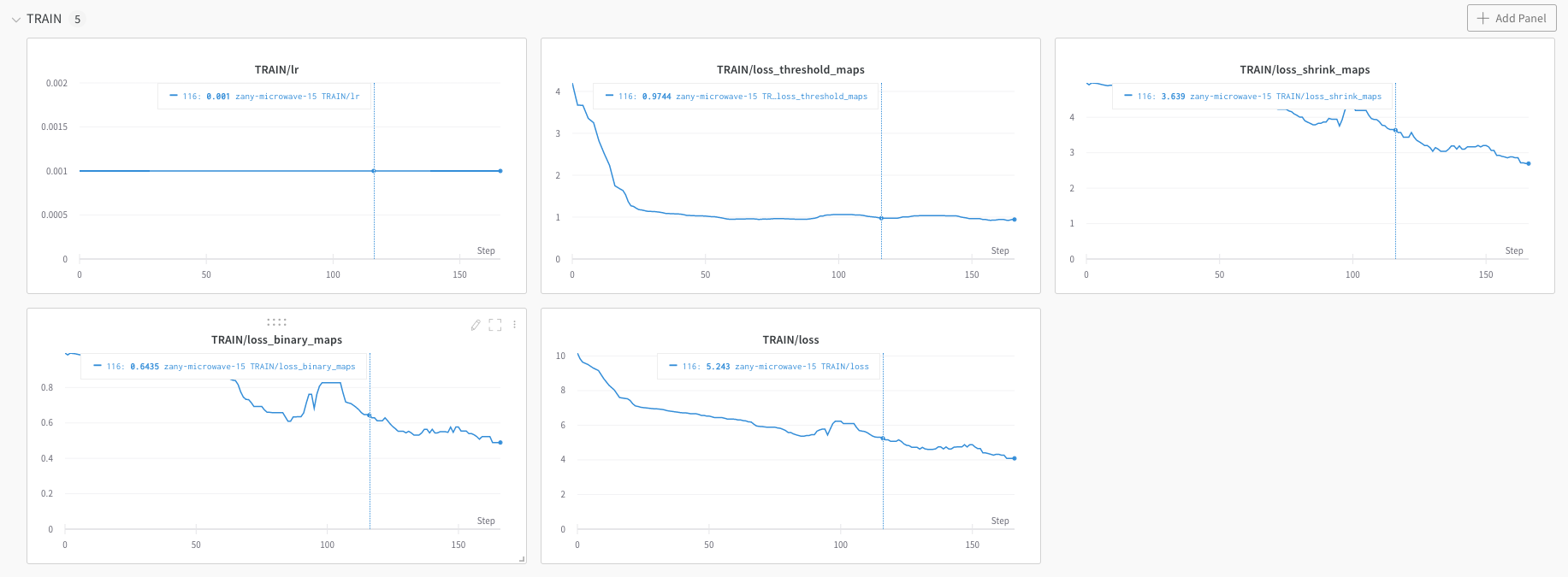

doc/imgs_en/wandb_metrics.png

0 → 100644

89.6 KB

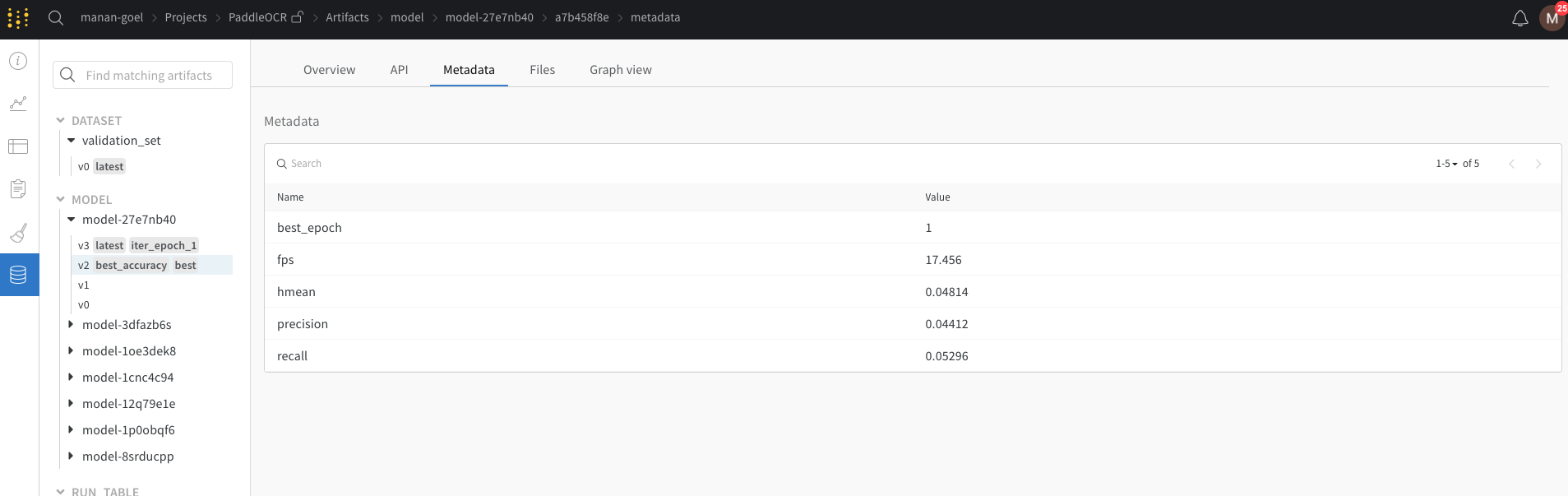

doc/imgs_en/wandb_models.png

0 → 100644

96.0 KB

ppocr/utils/loggers/__init__.py

0 → 100644

ppocr/utils/loggers/loggers.py

0 → 100644

ppocr/utils/loggers/vdl_logger.py

0 → 100644