Merge remote-tracking branch 'origin/dygraph' into dygraph

Showing

doc/doc_ch/application.md

已删除

100644 → 0

| W: | H:

| W: | H:

| W: | H:

| W: | H:

96.6 KB

138.7 KB

88.7 KB

635.9 KB

626.3 KB

497.6 KB

281.6 KB

478.1 KB

158.4 KB

116.8 KB

160.4 KB

279.4 KB

170.4 KB

63.8 KB

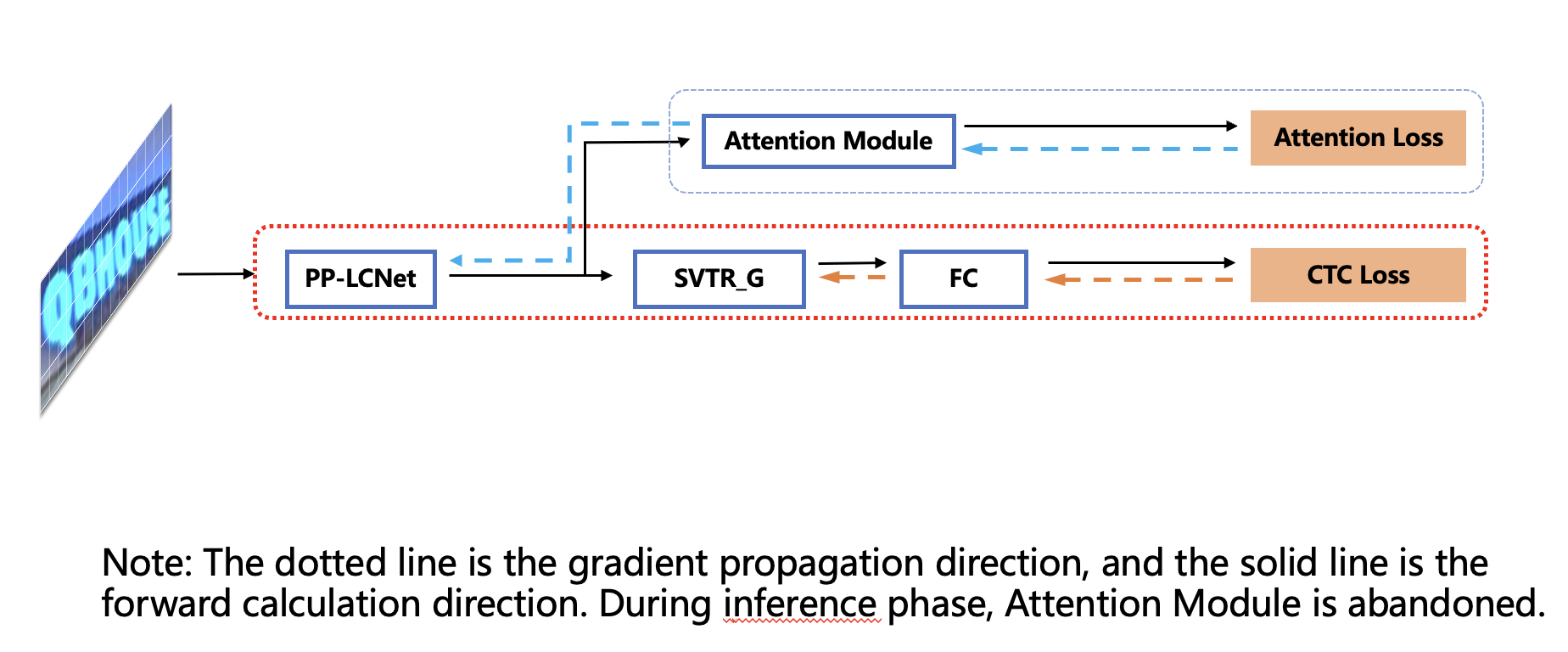

doc/ppocr_v3/GTC_en.png

0 → 100644

166.5 KB

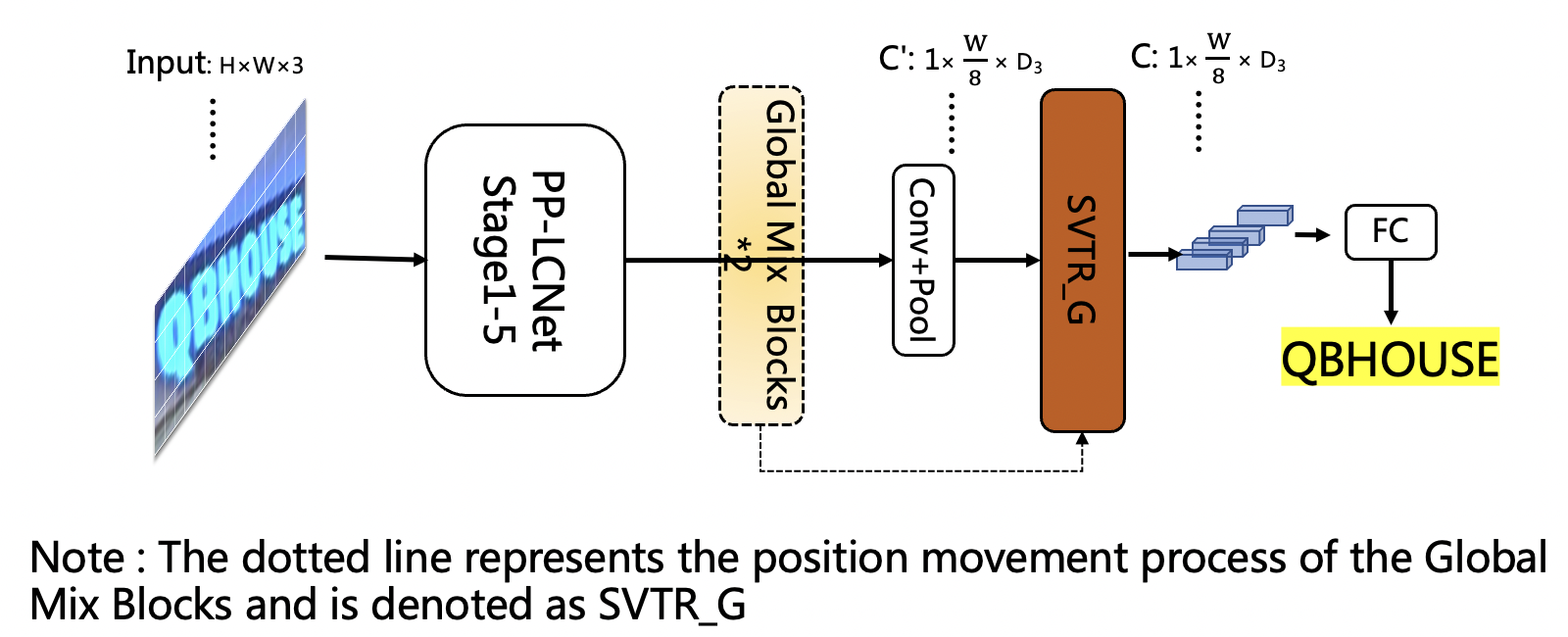

doc/ppocr_v3/LCNet_SVTR_en.png

0 → 100644

499.6 KB

762.2 KB

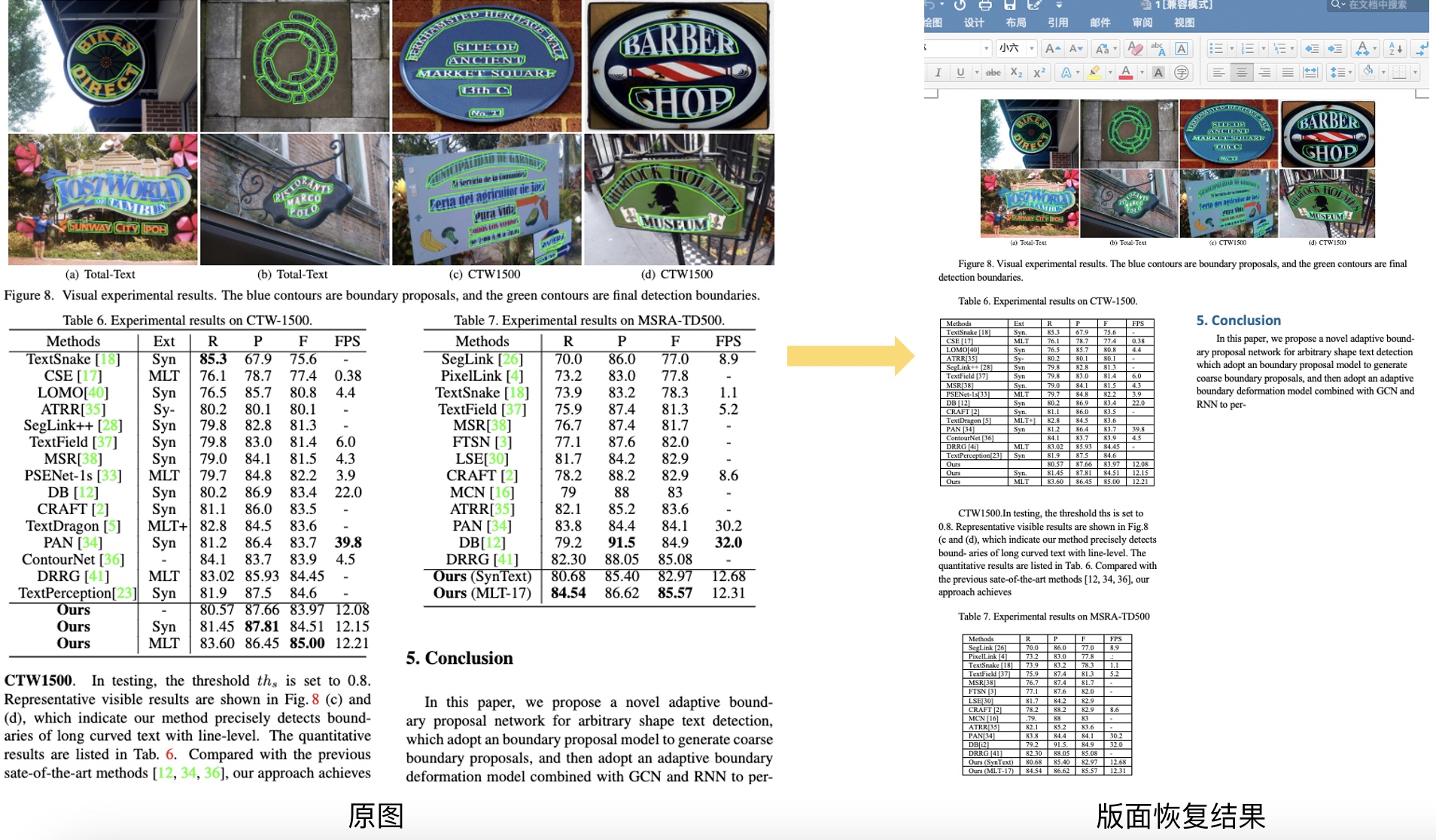

ppstructure/recovery/README.md

0 → 100644

ppstructure/recovery/README_ch.md

0 → 100644

ppstructure/recovery/docx.py

0 → 100644