Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into add_layout_hub

Showing

doc/doc_en/algorithm_en.md

已删除

100644 → 0

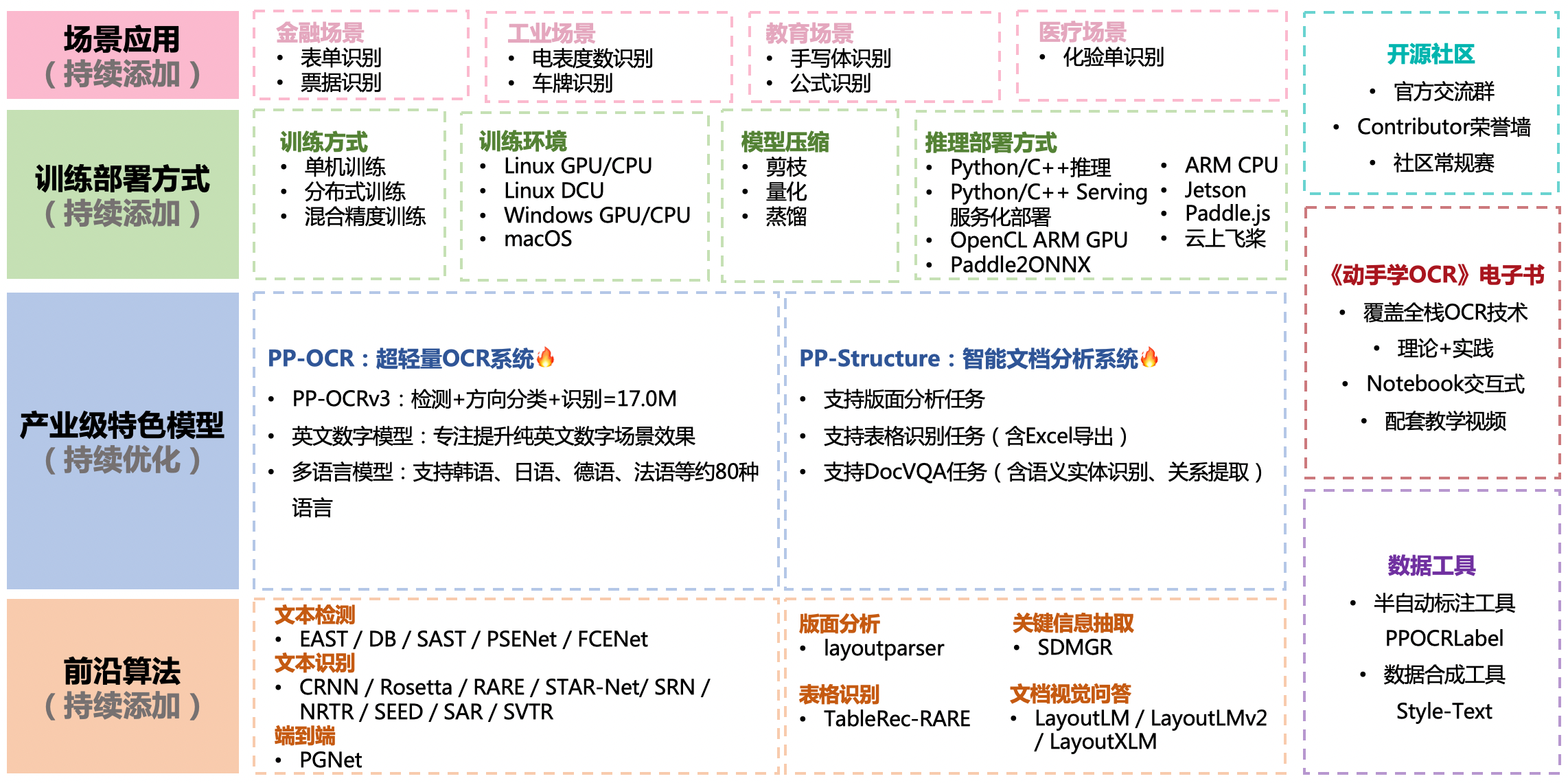

doc/features.png

已删除

100644 → 0

1.1 MB

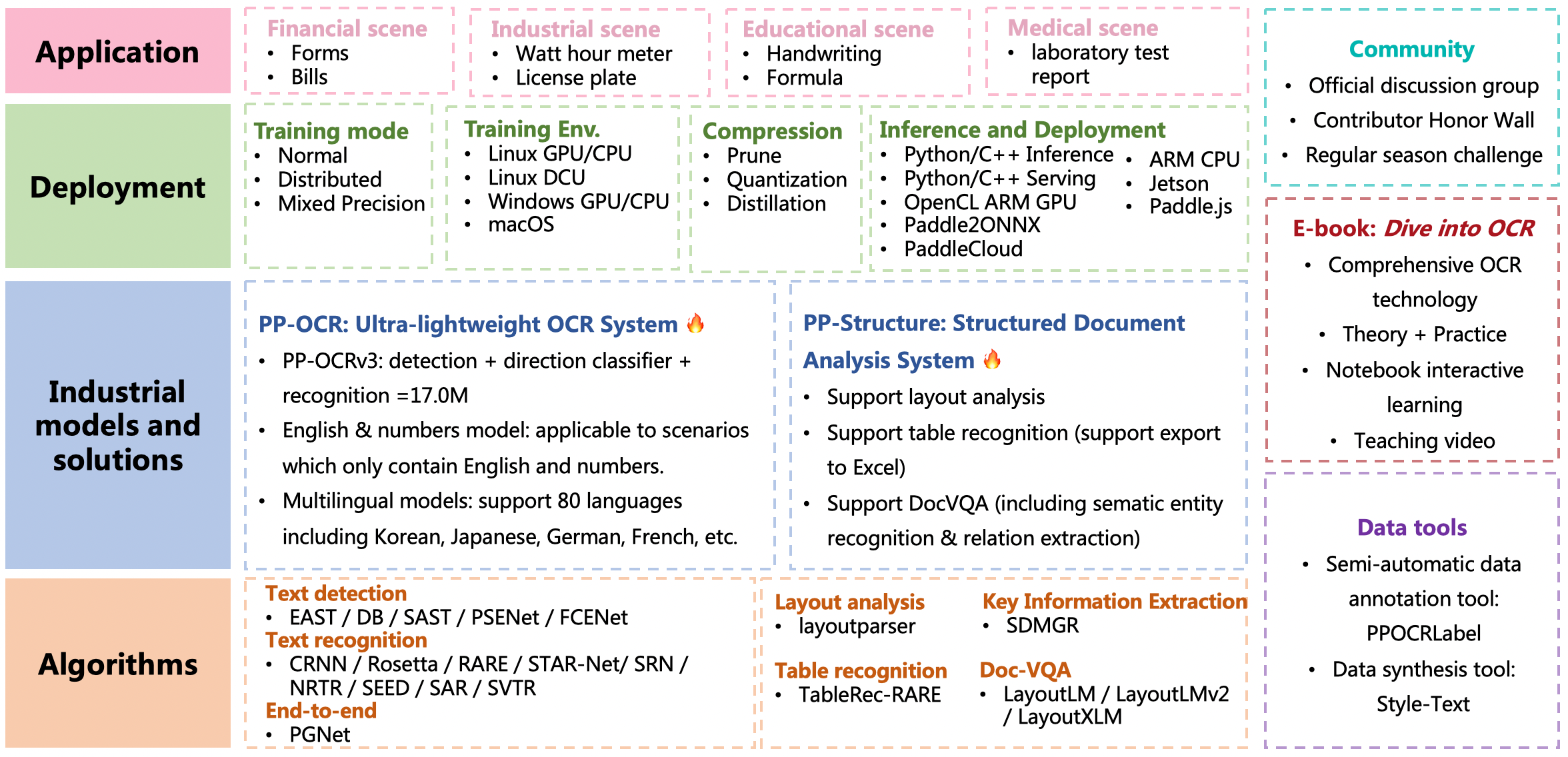

doc/features_en.png

已删除

100644 → 0

1.2 MB

此差异已折叠。