Created by: zhiqiu

PR types

New features

PR changes

Others

Describe

New feature, support inplace addto strategy for gradient accumulation, which can improve the performance of gradient accumulation.

Background

In back-propagation,if the gradients of a tensor are generated by more than one operation, these gradients should be accumulated before back-propagating to the next layer. For example,

If the forward network contains the following part,

y = conv2d(x, w)

z = add(x, y)

Then in backward,

x_grad_0 = add_grad(z_grad)

x_grad_1 = conv2d_grad(y_grad, ...)

x_grad = sum(x_grad_0, x_grad_1)

Traditionally, if the gradients of a tensor are generated by n operation, then after these gradients generated, a sum operation is used to sum up these gradients. x_grad = sum(x_grad_0, x_grag_1, ..., x_grad_n)

However, we can improve the performance by adds up these gradients one by one in their backward operations.

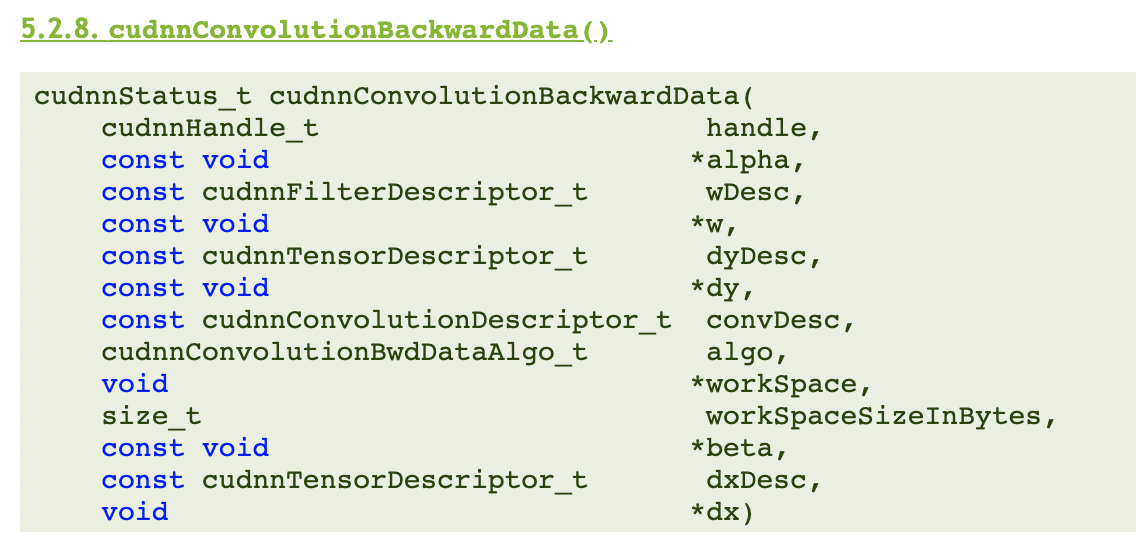

For example, the backward API of conv in cudnn cudnnConvolutionBackwardData contains a beta arguments,

which means,

dstValue = alpha[0]*result + beta[0]*priorDstValue

So, in the above case, if the gradient x_grad_1 is set to x_grad_0 before conv2d_grad's execution, then we can set beta = 1 and the result x_grad_1 will be exactly x_grad_0 + x_grad_1, which is the gradient accumulation we want to do.

Implementation

- In the backward stage, when appending grad operators automatically, use several

grad_add opsinstead ofsum op.

g = sum(g_0, g_1,..., g_n)

==>

g_sum_0 = g_0

g_sum_1 = grad_add(g_sum_0, g_1)

g_sum_2 = grad_add(g_sum_1, g_2)

...

g_sum_n = grad_add(g_sum_n-1, g_n)- For each

grad_addop with the formout = grad_add(left, right)- Make the two input tensor and the output tensor share the same memory address.

- Make the right input of

grad_adddoaddtocalculation. - Make the

grad_addskip execution (since its work is done byaddto)

Usage

To train with inplace addto strategy, there are two steps.

- Set

FLAGS_max_inplace_grad_addto a positive number, for example, 8. It means if the number gradients that need to sum up is less than 8, thegrad_addwill be used. - Set

build_strategy.enable_addto=True, it enables theinplace addto strategy.

Performance

The performance of ResNet50 with amp enabled and batch_size=128 on V100 single card.

- before: 1014 img/s

- after: 1078 img/s, 6.3% imporved.