add some introduction for our parallelization feature (#61)

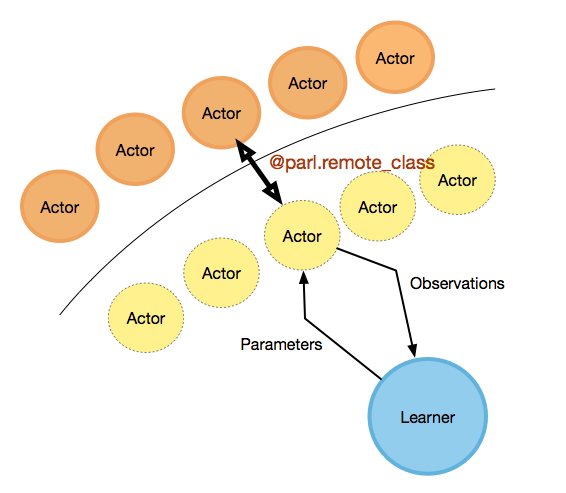

* Update remote_decorator.py * Update README.md * add an figure for the demonstration about parallelization * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * Update README.md * add a link to IMPALA

Showing

.github/decorator.png

0 → 100644

67.2 KB