Merge pull request #21 from alibaba/shim

shim: open source code

Showing

.circleci/config.yml

0 → 100644

shim/.circleci/config.yml

0 → 100644

shim/.gitignore

0 → 100644

shim/.markdownlint.json

0 → 100644

shim/.spelling

0 → 100644

shim/LICENSE

0 → 100644

shim/Makefile

0 → 100644

shim/README-zh_CN.md

0 → 100644

shim/README.md

0 → 100644

shim/cmd/signature-server/main.go

0 → 100644

shim/conf/config.toml

0 → 100644

shim/config/config.go

0 → 100644

shim/config/config_test.go

0 → 100644

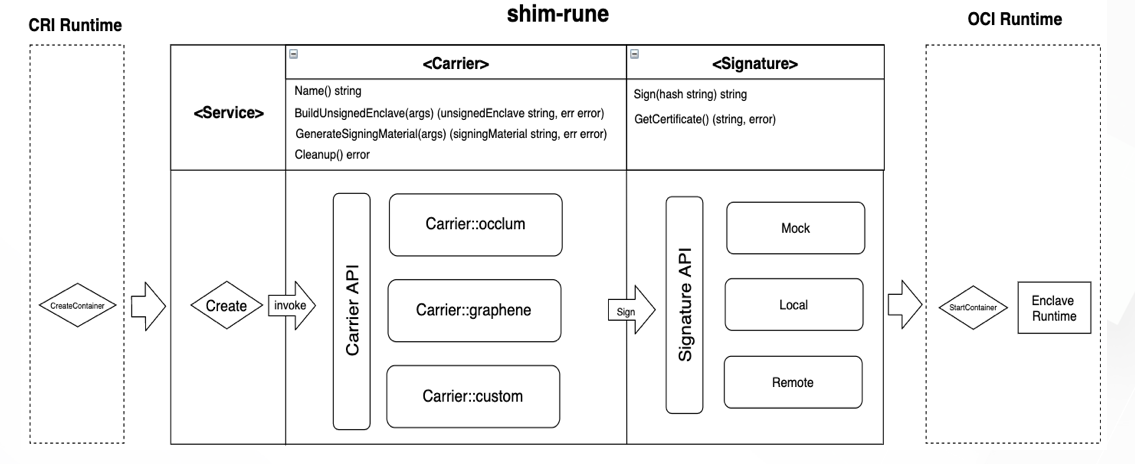

shim/docs/images/shim-carrier.png

0 → 100644

95.9 KB

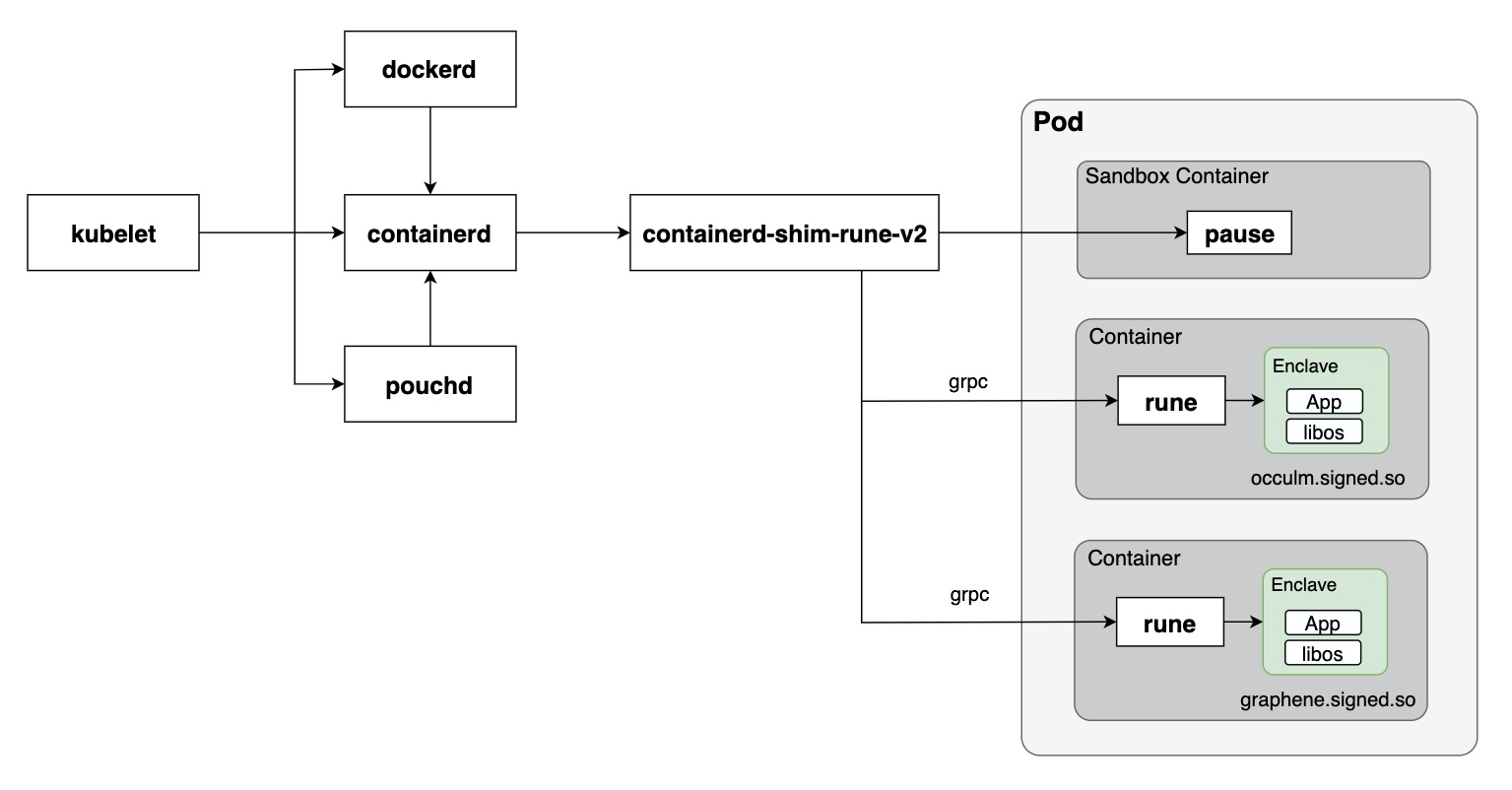

shim/docs/images/shim-rune.png

0 → 100644

147.8 KB

144.4 KB

shim/go.mod

0 → 100644

shim/go.sum

0 → 100644

此差异已折叠。

shim/runtime/carrier/README.md

0 → 100644

shim/runtime/carrier/interface.go

0 → 100644

shim/runtime/config/config.go

0 → 100644

shim/runtime/signature/README.md

0 → 100644

shim/runtime/v2/rune/types.go

0 → 100644

shim/runtime/v2/rune/v2/rune.go

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。