!581 delete on_device_inference.md and related images for r0.6

Merge pull request !581 from lvmingfu/r0.6

Showing

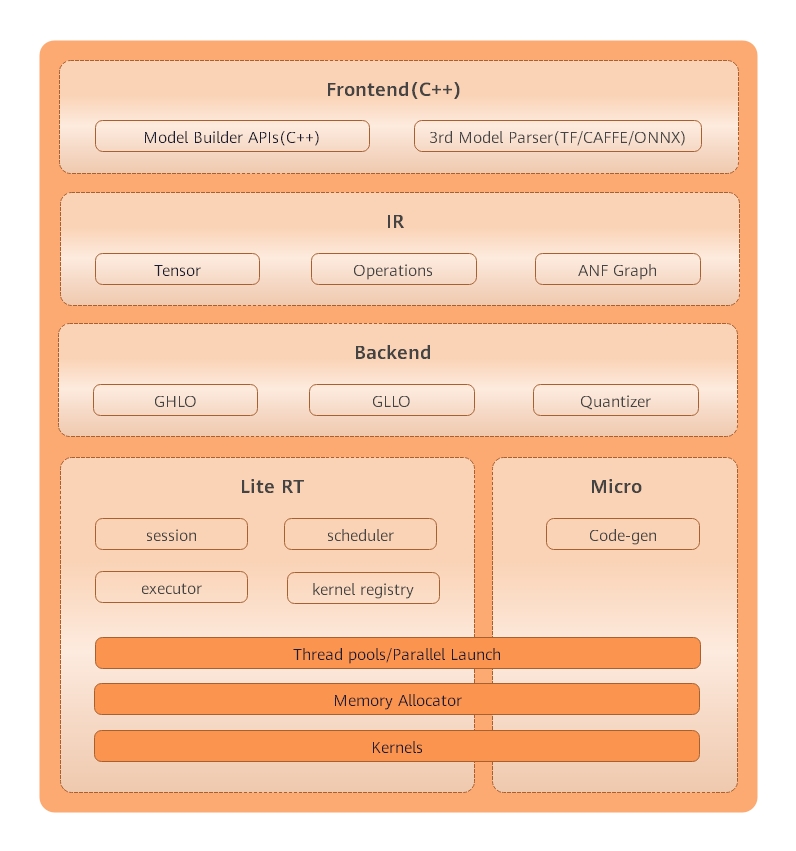

235.9 KB

文件已删除

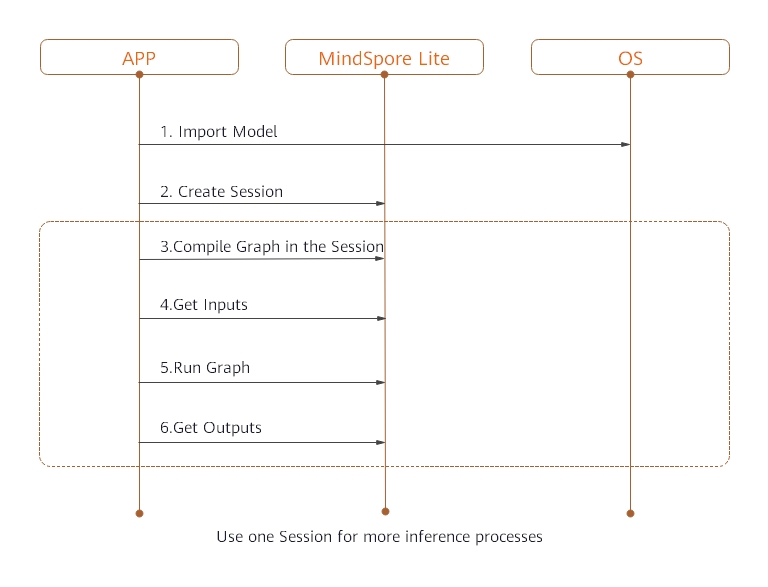

90.2 KB

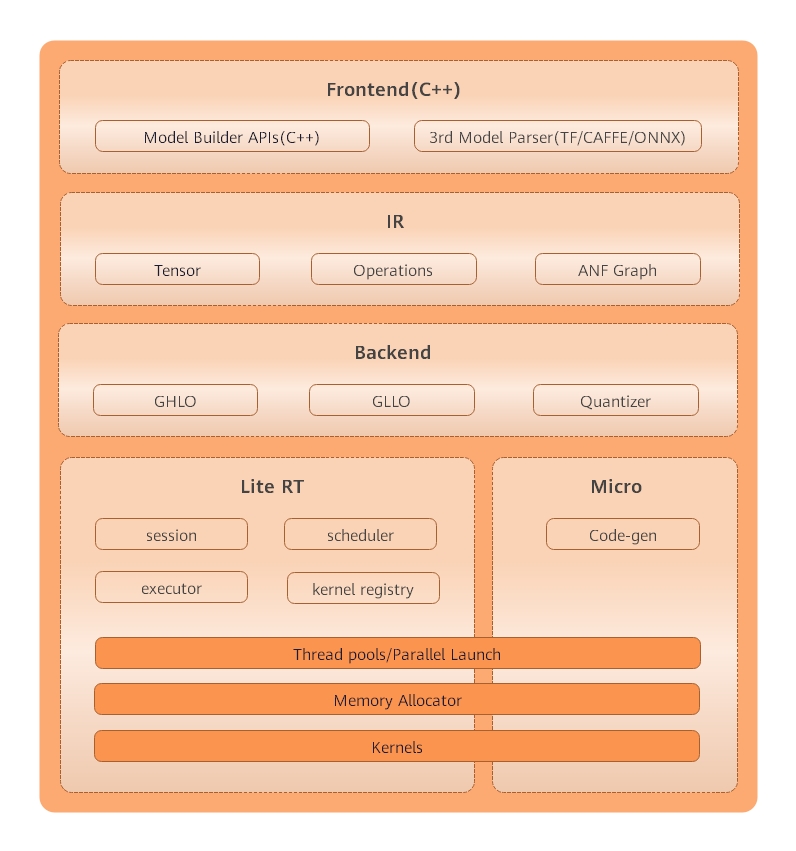

235.9 KB

文件已删除

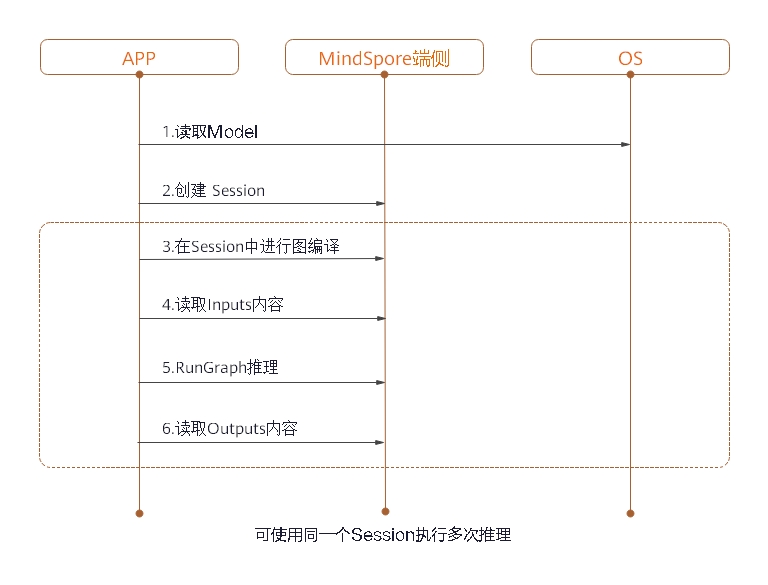

96.4 KB

Merge pull request !581 from lvmingfu/r0.6

235.9 KB

90.2 KB

235.9 KB

96.4 KB