!21 upgrade lenet experiment to r0.5, unify codes for different platf orm

Merge pull request !21 from dyonghan/update_to_0.5

Showing

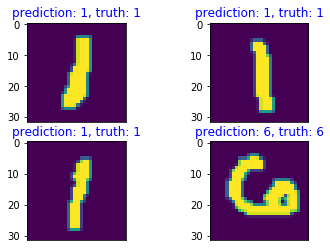

checkpoint/images/prediction.png

0 → 100644

7.3 KB

此差异已折叠。

此差异已折叠。

experiment_5/main.py

已删除

100644 → 0

experiment_6/main.py

已删除

100644 → 0

lenet5/README.md

0 → 100644

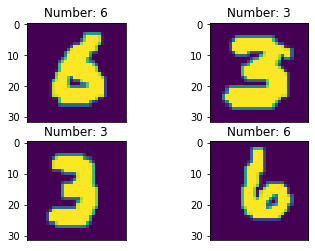

lenet5/images/mnist.png

0 → 100644

7.3 KB