- 11 11月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: There is an undocumented behavior about a certain combination of options and operations. - inplace_update_support = true, and - call `SeekForPrev()`, `SeekToLast()`, and/or `Prev()` on unflushed data. We should stop the backward iteration and report an error of `Status::NotSupported`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7618 Test Plan: make check Reviewed By: pdillinger Differential Revision: D24769619 Pulled By: riversand963 fbshipit-source-id: 81d199fa55ed4739ab10e719cc345a992238ccbb

-

- 29 10月, 2020 1 次提交

-

-

由 Ramkumar Vadivelu 提交于

Summary: Fixes Issue https://github.com/facebook/rocksdb/issues/7497 When allow_data_in_errors db_options is set, log error key details in `ParseInternalKey()` Have fixed most of the calls. Have few TODOs still pending - because have to make more deeper changes to pass in the allow_data_in_errors flag. Will do those in a separate PR later. Tests: - make check - some of the existing tests that exercise the "internal key too small" condition are: dbformat_test, cuckoo_table_builder_test - some of the existing tests that exercise the corrupted key path are: corruption_test, merge_helper_test, compaction_iterator_test Example of new status returns: - Key too small - `Corrupted Key: Internal Key too small. Size=5` - Corrupt key with allow_data_in_errors option set to false: `Corrupted Key: '<redacted>' seq:3, type:3` - Corrupt key with allow_data_in_errors option set to true: `Corrupted Key: '61' seq:3, type:3` Pull Request resolved: https://github.com/facebook/rocksdb/pull/7515 Reviewed By: ajkr Differential Revision: D24240264 Pulled By: ramvadiv fbshipit-source-id: bc48f5d4475ac19d7713e16df37505b31aac42e7

-

- 24 10月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Add a threshold timestamp, full_history_ts_low_ of type `std::string*` to `CompactionIterator`, so that RocksDB can also perform garbage collection during compaction. * If full_history_ts_low_ is nullptr, then compaction iterator does not perform GC, preserving all timestamp history for all keys. Compaction iterator will treat user key with different timestamps as different user keys. * If full_history_ts_low_ is not nullptr, then compaction iterator performs GC. GC will look at keys older than `*full_history_ts_low_` and determine their eligibility based on factors including snapshots. Current rules of GC: * If an internal key is in the same snapshot as a previous counterpart with the same user key, and this key is eligible for GC, and the key is not single-delete or merge operand, then this key can be dropped. Note that the previous internal key cannot be a merge operand either. * If a tombstone is the most recent one in the earliest snapshot and it is eligible for GC, and keyNotExistsBeyondLevel() is true, then this tombstone can be dropped. * If a tombstone is the most recent one in a snapshot and it is eligible for GC, and the compaction is at bottommost level, then all other older internal keys of the same user key must also be eligible for GC, thus can be dropped * Single-delete, delete-range and merge are not currently supported. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7556 Test Plan: make check Reviewed By: ltamasi Differential Revision: D24507728 Pulled By: riversand963 fbshipit-source-id: 3c09c7301f41eed76dfcf4d1527e68cf6e0a8bb3

-

- 07 10月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: In opt mode, assertions are just no-ops. Therefore, we need to report errors instead of just doing an `assert(false)`. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7483 Test Plan: make check Reviewed By: anand1976 Differential Revision: D24142725 Pulled By: riversand963 fbshipit-source-id: 5629556dbe29f00dd09e30a7d5df5e6cf09ee435

-

- 03 10月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: Fix prefix_test so that it passes when ASSERT_STATUS_CHECKED=1 Pull Request resolved: https://github.com/facebook/rocksdb/pull/7495 Test Plan: Run the test with the option Reviewed By: anand1976 Differential Revision: D24069715 fbshipit-source-id: 54f74b58575a1b49dbdee9ea2d24751fa956b620

-

- 01 10月, 2020 1 次提交

-

-

由 Ramkumar Vadivelu 提交于

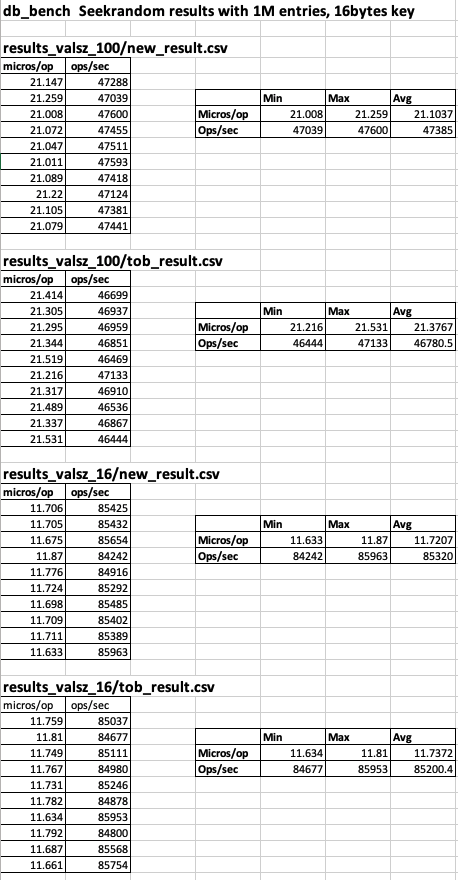

Summary: Fixes https://github.com/facebook/rocksdb/issues/7430 Change ParseInternalKey() to return Status instead of bool. db_bench (seekrandom) based before/after results with value size of 100 bytes and 16 bytes can be found at (tests ran on an udb server): https://www.dropbox.com/s/47bwamdy5ozngph/PIK_ret_Status_results.xlsx?dl=0  Pull Request resolved: https://github.com/facebook/rocksdb/pull/7457 Reviewed By: ajkr Differential Revision: D24002433 Pulled By: ramvadiv fbshipit-source-id: ac253ecf577a29044c47c3fe254a01e71404c44c

-

- 24 9月, 2020 1 次提交

-

-

由 rockeet 提交于

Summary: Pull Request resolved: https://github.com/facebook/rocksdb/pull/7407 Reviewed By: ajkr Differential Revision: D23817122 Pulled By: jay-zhuang fbshipit-source-id: 62bf43e4d780fad8c682edd750b4800b5b8f4a77

-

- 25 8月, 2020 1 次提交

-

-

由 mrambacher 提交于

Summary: More tests now pass. When in doubt, I added a TODO comment to check what should happen with an ignored error. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7305 Reviewed By: akankshamahajan15 Differential Revision: D23301262 Pulled By: ajkr fbshipit-source-id: 5f120edc7393560aefc0633250277bbc7e8de9e6

-

- 06 8月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: IteratorIterator::IsOutOfBound() and IteratorIterator::MayBeOutOfUpperBound() are two functions that related to upper bound check. It is hard for users to reason about this complexity. Consolidate the two functions into one and assign an enum as results to improve readability. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7200 Test Plan: Run all existing test. Would run crash test with atomic for a while. Reviewed By: anand1976 Differential Revision: D22833181 fbshipit-source-id: a0c724267056adbd0476bde74650e6c7226077e6

-

- 05 8月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: as title. When ReadOptions.iter_start_ts is not nullptr, DBIter::key() should return internal keys including value type. Pull Request resolved: https://github.com/facebook/rocksdb/pull/7178 Test Plan: make check Reviewed By: ltamasi Differential Revision: D22935879 Pulled By: riversand963 fbshipit-source-id: 7508d962cf11ebcfa6386d2529b4f3606b47ccfd

-

- 29 5月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Preliminary user-timestamp support for delete. If ["a", ts=100] exists, you can delete it by calling `DB::Delete(write_options, key)` in which `write_options.timestamp` points to a `ts` higher than 100. Implementation A new ValueType, i.e. `kTypeDeletionWithTimestamp` is added for deletion marker with timestamp. The reason for a separate `kTypeDeletionWithTimestamp`: RocksDB may drop tombstones (keys with kTypeDeletion) when compacting them to the bottom level. This is OK and useful if timestamp is disabled. When timestamp is enabled, should we still reuse `kTypeDeletion`, we may drop the tombstone with a more recent timestamp, causing deleted keys to re-appear. Test plan (dev server) ``` make check ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/6253 Reviewed By: ltamasi Differential Revision: D20995328 Pulled By: riversand963 fbshipit-source-id: a9e5c22968ad76f98e3dc6ee0151265a3f0df619

-

- 16 4月, 2020 1 次提交

-

-

由 Mike Kolupaev 提交于

Summary: Context: Index type `kBinarySearchWithFirstKey` added the ability for sst file iterator to sometimes report a key from index without reading the corresponding data block. This is useful when sst blocks are cut at some meaningful boundaries (e.g. one block per key prefix), and many seeks land between blocks (e.g. for each prefix, the ranges of keys in different sst files are nearly disjoint, so a typical seek needs to read a data block from only one file even if all files have the prefix). But this added a new error condition, which rocksdb code was really not equipped to deal with: `InternalIterator::value()` may fail with an IO error or Status::Incomplete, but it's just a method returning a Slice, with no way to report error instead. Before this PR, this type of error wasn't handled at all (an empty slice was returned), and kBinarySearchWithFirstKey implementation was considered a prototype. Now that we (LogDevice) have experimented with kBinarySearchWithFirstKey for a while and confirmed that it's really useful, this PR is adding the missing error handling. It's a pretty inconvenient situation implementation-wise. The error needs to be reported from InternalIterator when trying to access value. But there are ~700 call sites of `InternalIterator::value()`, most of which either can't hit the error condition (because the iterator is reading from memtable or from index or something) or wouldn't benefit from the deferred loading of the value (e.g. compaction iterator that reads all values anyway). Adding error handling to all these call sites would needlessly bloat the code. So instead I made the deferred value loading optional: only the call sites that may use deferred loading have to call the new method `PrepareValue()` before calling `value()`. The feature is enabled with a new bool argument `allow_unprepared_value` to a bunch of methods that create iterators (it wouldn't make sense to put it in ReadOptions because it's completely internal to iterators, with virtually no user-visible effect). Lmk if you have better ideas. Note that the deferred value loading only happens for *internal* iterators. The user-visible iterator (DBIter) always prepares the value before returning from Seek/Next/etc. We could go further and add an API to defer that value loading too, but that's most likely not useful for LogDevice, so it doesn't seem worth the complexity for now. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6621 Test Plan: make -j5 check . Will also deploy to some logdevice test clusters and look at stats. Reviewed By: siying Differential Revision: D20786930 Pulled By: al13n321 fbshipit-source-id: 6da77d918bad3780522e918f17f4d5513d3e99ee

-

- 11 4月, 2020 2 次提交

-

-

由 Yanqin Jin 提交于

Summary: Towards making compaction logic compatible with user timestamp. When computing boundaries and overlapping ranges for inputs of compaction, We need to compare SSTs by user key without timestamp. Test plan (devserver): ``` make check ``` Several individual tests: ``` ./version_set_test --gtest_filter=VersionStorageInfoTimestampTest.GetOverlappingInputs ./db_with_timestamp_compaction_test ./db_with_timestamp_basic_test ``` Pull Request resolved: https://github.com/facebook/rocksdb/pull/6645 Reviewed By: ltamasi Differential Revision: D20960012 Pulled By: riversand963 fbshipit-source-id: ad377fa9eb481bf7a8a3e1824aaade48cdc653a4

-

由 Huisheng Liu 提交于

Summary: (Based on Yanqin's idea) Add a new field in readoptions as lower timestamp bound for iterator. When the parameter is not supplied (nullptr), the iterator returns the latest visible version of a record. When it is supplied, the existing timestamp field is the upper bound. Together the two serves as a bounded time window. The iterator returns all versions of a record falling in the window. SeekRandom perf test (10 minutes) on the same development machine ram drive with the same DB data shows no regression (within marge of error). The test is adapted from https://github.com/facebook/rocksdb/wiki/RocksDB-In-Memory-Workload-Performance-Benchmarks. base line (commit e860f884): seekrandom : 7.836 micros/op 4082449 ops/sec; (0 of 73481999 found) This PR: seekrandom : 7.764 micros/op 4120935 ops/sec; (0 of 71303999 found) db_bench --db=r:\rocksdb.github --num_levels=6 --key_size=20 --prefix_size=20 --keys_per_prefix=0 --value_size=100 --cache_size=2147483648 --cache_numshardbits=6 --compression_type=none --compression_ratio=1 --min_level_to_compress=-1 --disable_seek_compaction=1 --hard_rate_limit=2 --write_buffer_size=134217728 --max_write_buffer_number=2 --level0_file_num_compaction_trigger=8 --target_file_size_base=134217728 --max_bytes_for_level_base=1073741824 --disable_wal=0 --wal_dir=r:\rocksdb.github\WAL_LOG --sync=0 --verify_checksum=1 --statistics=0 --stats_per_interval=0 --stats_interval=1048576 --histogram=0 --use_plain_table=1 --open_files=-1 --memtablerep=prefix_hash --bloom_bits=10 --bloom_locality=1 --duration=600 --benchmarks=seekrandom --use_existing_db=1 --num=25000000 --threads=32 --allow_concurrent_memtable_write=0 Pull Request resolved: https://github.com/facebook/rocksdb/pull/6544 Reviewed By: ltamasi Differential Revision: D20844069 Pulled By: riversand963 fbshipit-source-id: d97f2bf38a323c8c6a68db213b2d3c694b1c1f74

-

- 07 3月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Preliminary support for iterator with user timestamp. Current implementation does not consider merge operator and reverse iterator. Auto compaction is also disabled in unit tests. Create an iterator with timestamp. ``` ... read_opts.timestamp = &ts; auto* iter = db->NewIterator(read_opts); // target is key without timestamp. for (iter->Seek(target); iter->Valid(); iter->Next()) {} for (iter->SeekToFirst(); iter->Valid(); iter->Next()) {} delete iter; read_opts.timestamp = &ts1; // lower_bound and upper_bound are without timestamp. read_opts.iterate_lower_bound = &lower_bound; read_opts.iterate_upper_bound = &upper_bound; auto* iter1 = db->NewIterator(read_opts); // Do Seek or SeekToFirst() delete iter1; ``` Test plan (dev server) ``` $make check ``` Simple benchmarking (dev server) 1. The overhead introduced by this PR even when timestamp is disabled. key size: 16 bytes value size: 100 bytes Entries: 1000000 Data reside in main memory, and try to stress iterator. Repeated three times on master and this PR. - Seek without next ``` ./db_bench -db=/dev/shm/rocksdbtest-1000 -benchmarks=fillseq,seekrandom -enable_pipelined_write=false -disable_wal=true -format_version=3 ``` master: 159047.0 ops/sec this PR: 158922.3 ops/sec (2% drop in throughput) - Seek and next 10 times ``` ./db_bench -db=/dev/shm/rocksdbtest-1000 -benchmarks=fillseq,seekrandom -enable_pipelined_write=false -disable_wal=true -format_version=3 -seek_nexts=10 ``` master: 109539.3 ops/sec this PR: 107519.7 ops/sec (2% drop in throughput) Pull Request resolved: https://github.com/facebook/rocksdb/pull/6255 Differential Revision: D19438227 Pulled By: riversand963 fbshipit-source-id: b66b4979486f8474619f4aa6bdd88598870b0746

-

- 22 2月, 2020 1 次提交

-

-

由 Yanqin Jin 提交于

Summary: Cleanup some code without any real change in functionality. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6440 Differential Revision: D20015891 Pulled By: riversand963 fbshipit-source-id: 33e18754b0f002006a6d4805e9aaf84c0c8ad25a

-

- 21 2月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: When dynamically linking two binaries together, different builds of RocksDB from two sources might cause errors. To provide a tool for user to solve the problem, the RocksDB namespace is changed to a flag which can be overridden in build time. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6433 Test Plan: Build release, all and jtest. Try to build with ROCKSDB_NAMESPACE with another flag. Differential Revision: D19977691 fbshipit-source-id: aa7f2d0972e1c31d75339ac48478f34f6cfcfb3e

-

- 29 1月, 2020 1 次提交

-

-

由 sdong 提交于

Summary: Add a new option ReadOptions.auto_prefix_mode. When set to true, iterator should return the same result as total order seek, but may choose to do prefix seek internally, based on iterator upper bounds. Also fix two previous bugs when handling prefix extrator changes: (1) reverse iterator should not rely on upper bound to determine prefix. Fix it with skipping prefix check. (2) block-based filter is not handled properly. Pull Request resolved: https://github.com/facebook/rocksdb/pull/6314 Test Plan: (1) add a unit test; (2) add the check to stress test and run see whether it can pass at least one run. Differential Revision: D19458717 fbshipit-source-id: 51c1bcc5cdd826c2469af201979a39600e779bce

-

- 20 11月, 2019 1 次提交

-

-

由 tabokie 提交于

Summary: Fix: when `db_iter` falls back to using seek by `FindValueForCurrentKeyUsingSeek`, `is_blob_` flag is not properly set on encountering BlobIndex. Also patch existing test for the mentioned code path. Signed-off-by: Ntabokie <xy.tao@outlook.com> Pull Request resolved: https://github.com/facebook/rocksdb/pull/6051 Differential Revision: D18596274 Pulled By: ltamasi fbshipit-source-id: 8e4714af263b99dc2c379707d50db88fe6799278

-

- 17 9月, 2019 1 次提交

-

-

由 sdong 提交于

Summary: Doing some code reordering in DBIter::Seek() and DBIter::SeekForPrev(). The logic largely remains the same, except slight difference when handling some stats when valid_ = false, where they are not supposed to be used anyway. Also remove prefix_start_key_, which sometimes point a part of seek target, some times prefix_start_buf_, which is confusing. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5794 Test Plan: Run all tests. Differential Revision: D17375257 fbshipit-source-id: 7339a23898cecd3a8475bf72340fcd6f82b933c5

-

- 14 9月, 2019 1 次提交

-

-

由 anand76 提交于

Summary: Move definition and implementation for ArenaWrappedDBIter into its own .h/.cc files. Also, change inlining of functions to better comply with the Google C++ style guide. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5801 Test Plan: make check Differential Revision: D17371012 Pulled By: anand1976 fbshipit-source-id: c1361abc2851575111e357a63d88be3b3d6cb341

-

- 12 9月, 2019 1 次提交

-

-

由 Shylock Hg 提交于

Summary: Use delete to disable automatic generated methods instead of private, and put the constructor together for more clear.This modification cause the unused field warning, so add unused attribute to disable this warning. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5009 Differential Revision: D17288733 fbshipit-source-id: 8a767ce096f185f1db01bd28fc88fef1cdd921f3

-

- 23 7月, 2019 1 次提交

-

-

由 Manuel Ung 提交于

Summary: There are a number of fixes in this PR (with most bugs found via the added stress tests): 1. Re-enable reseek optimization. This was initially disabled to avoid infinite loops in https://github.com/facebook/rocksdb/pull/3955 but this can be resolved by remembering not to reseek after a reseek has already been done. This problem only affects forward iteration in `DBIter::FindNextUserEntryInternal`, as we already disable reseeking in `DBIter::FindValueForCurrentKeyUsingSeek`. 2. Verify that ReadOption.snapshot can be safely used for iterator creation. Some snapshots would not give correct results because snaphsot validation would not be enforced, breaking some assumptions in Prev() iteration. 3. In the non-snapshot Get() case, reads done at `LastPublishedSequence` may not be enough, because unprepared sequence numbers are not published. Use `std::max(published_seq, max_visible_seq)` to do lookups instead. 4. Add stress test to test reading own writes. 5. Minor bug in the allow_concurrent_memtable_write case where we forgot to pass in batch_per_txn_. 6. Minor performance optimization in `CalcMaxUnpreparedSequenceNumber` by assigning by reference instead of value. 7. Add some more comments everywhere. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5573 Differential Revision: D16276089 Pulled By: lth fbshipit-source-id: 18029c944eb427a90a87dee76ac1b23f37ec1ccb

-

- 03 7月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: This is a second attempt for https://github.com/facebook/rocksdb/issues/5111, with the fix to redo iterate bounds check after `SeekXXX()`. This is because MyRocks may change iterate bounds between seek. See https://github.com/facebook/rocksdb/issues/5111 for original benchmark result and discussion. Closes https://github.com/facebook/rocksdb/issues/5463. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5468 Test Plan: Existing rocksdb tests, plus myrocks test `rocksdb.optimizer_loose_index_scans` and `rocksdb.group_min_max`. Differential Revision: D15863332 fbshipit-source-id: ab4aba5899838591806b8673899bd465f3f53e18

-

- 12 6月, 2019 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: This reverts commit f3a78475. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5440 Differential Revision: D15765967 Pulled By: ltamasi fbshipit-source-id: d027fe24132e3729289cd7c01857a7eb449d9dd0

-

- 08 6月, 2019 1 次提交

-

-

由 Levi Tamasi 提交于

Summary: PR #5111 reduced the number of key comparisons when iterating with upper/lower bounds; however, this caused a regression for MyRocks. Reverting to the previous behavior in BlockBasedTableIterator as a hotfix. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5428 Differential Revision: D15721038 Pulled By: ltamasi fbshipit-source-id: 5450106442f1763bccd17f6cfd648697f2ae8b6c

-

- 04 6月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: util/ means for lower level libraries. trace_replay is highly integrated to DB and sometimes call DB. Move it out to a separate directory. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5376 Differential Revision: D15550938 Pulled By: siying fbshipit-source-id: f46dce5ceffdc05a73f26379c7bb1b79ebe6c207

-

- 01 6月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: Many logging related source files are under util/. It will be more structured if they are together. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5387 Differential Revision: D15579036 Pulled By: siying fbshipit-source-id: 3850134ed50b8c0bb40a0c8ae1f184fa4081303f

-

- 31 5月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: Move arena, allocator, and memory tools under util to a separate memory/ directory. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5382 Differential Revision: D15564655 Pulled By: siying fbshipit-source-id: 9cd6b5d0d3d52b39606e19221fa154596e5852a5

-

- 30 5月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: util/ means for lower level libraries, so it's a good idea to move the files which requires knowledge to DB out. Create a file/ and move some files there. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5375 Differential Revision: D15550935 Pulled By: siying fbshipit-source-id: 61a9715dcde5386eebfb43e93f847bba1ae0d3f2

-

- 18 5月, 2019 1 次提交

-

-

由 yiwu-arbug 提交于

Summary: Previously if iterator upper/lower bound presents, `DBIter` will check the bound for every key. This patch turns the check into per-file or per-data block check when applicable, by checking against either file largest/smallest key or block index key. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5111 Differential Revision: D15330061 Pulled By: siying fbshipit-source-id: 8a653fe3cd50d94d81eb2d13b087326c58ee2024

-

- 10 5月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: Right now, DBIter::Next() always checks whether an entry is for the same user key as the previous entry to see whether the key should be hidden to the user. However, if previous entry's sequence number is 0, the check is not needed because 0 is the oldest possible sequence number. We could extend it from seqnum 0 case to simply prev_seqno >= current_seqno. However, it is less robust with bug or unexpected situations, while the gain is relatively low. We can always extend it later when needed. In a readseq benchmark with full formed LSM-tree, number of key comparisons called is reduced from 2.981 to 2.165. readseq against a fully compacted DB, no key comparison is called. Performance in this benchmark didn't show obvious improvement, which is expected because key comparisons only takes small percentage of CPU. But it may show up to be more effective if users have an expensive customized comparator. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5244 Differential Revision: D15067257 Pulled By: siying fbshipit-source-id: b7e1ef3ec4fa928cba509683d2b3246e35d270d9

-

- 24 4月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: It's hard to get DBIter to directly use InternalIterator::NextAndGetResult() because the code change would be complicated. Instead, use IteratorWrapper, where Next() is already using NextAndGetResult(). Performance number is hard to measure because it is small and ther is variation. I run readseq many times, and there seems to be 1% gain. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5214 Differential Revision: D15003635 Pulled By: siying fbshipit-source-id: 17af1965c409c2fe90cd85037fbd2c5a1364f82a

-

- 20 4月, 2019 1 次提交

-

-

由 Siying Dong 提交于

Summary: My compiler doesn't inline DBIter::Next() to arena wrapped iterator, even if it is a direct forward. Adding this annotation makes it inlined. It might not always work but inlinging this function to arena wrapped iterator always feels like the right decision. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5217 Differential Revision: D15004086 Pulled By: siying fbshipit-source-id: a4cffd79c6fb092669a3a90633c9aa5e494f8a66

-

- 19 4月, 2019 2 次提交

-

-

由 Siying Dong 提交于

Summary: Several small changes for Next(): 1. Reducing branching by always update local_stats_.next_count_++ even if statistics is null. This should be faster than a branching. 2. Replacing ResetInternalKeysSkippedCounter() in Next() because the valid_ check is not needed in this case. 3. iter_->Valid() should always be true for non merge case. Remove this check. 4. Adding an inline annotation. It ends up with not picked up by my compiler, but it shouldn't hurt. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5200 Differential Revision: D15000391 Pulled By: siying fbshipit-source-id: be97f61c708968234fb8e5cf272b5c2ac07dc4dd

-

由 Siying Dong 提交于

Summary: In long scans, virtual function calls of Next(), Valid(), key() and value() are not trivial. By introducing NextAndGetResult(), Some of the Next(), Valid() and key() calls are consolidated into one virtual function call to reduce CPU. Also did some inline tricks and add some "final" randomly in some functions. Even without the "final" annotation, most Next() calls are inlined with -O3, but sometimes with a final it is inlined by O2 too. It doesn't hurt to add those final annotations. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5197 Differential Revision: D14945977 Pulled By: siying fbshipit-source-id: 7003969f9a5f1d5717f0bda503b91d19ba75ed88

-

- 03 4月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: WriteUnPrepared adds a virtual function, MaxUnpreparedSequenceNumber, to ReadCallback, which returns 0 unless WriteUnPrepared is enabled and the transaction has uncommitted data written to the DB. Together with snapshot sequence number, this determines the last sequence that is visible to reads. The patch clarifies the guarantees of the GetIterator API in WriteUnPrepared transactions and make use of that to statically initialize the read callback and thus avoid the virtual call. Furthermore it increases the minimum value for min_uncommitted from 0 to 1 as seq 0 is used only for last level keys that are committed in all snapshots. The following benchmark shows +0.26% higher throughput in seekrandom benchmark. Benchmark: ./db_bench --benchmarks=fillrandom --use_existing_db=0 --num=1000000 --db=/dev/shm/dbbench ./db_bench --benchmarks=seekrandom[X10] --use_existing_db=1 --db=/dev/shm/dbbench --num=1000000 --duration=60 --seek_nexts=100 seekrandom [AVG 10 runs] : 20355 ops/sec; 225.2 MB/sec seekrandom [MEDIAN 10 runs] : 20425 ops/sec; 225.9 MB/sec ./db_bench_lessvirtual3 --benchmarks=seekrandom[X10] --use_existing_db=1 --db=/dev/shm/dbbench --num=1000000 --duration=60 --seek_nexts=100 seekrandom [AVG 10 runs] : 20409 ops/sec; 225.8 MB/sec seekrandom [MEDIAN 10 runs] : 20487 ops/sec; 226.6 MB/sec Pull Request resolved: https://github.com/facebook/rocksdb/pull/5049 Differential Revision: D14366459 Pulled By: maysamyabandeh fbshipit-source-id: ebaff8908332a5ae9af7defeadabcb624be660ef

-

- 28 3月, 2019 1 次提交

-

-

由 Yi Wu 提交于

Summary: Currently `perf_context.user_key_comparison_count` is bump only in `InternalKeyComparator`. For places user comparator is used directly the counter is not bump. Fixing the majority of it. Index iterator and filter code also use user comparator directly and don't bump the counter. It is not fixed in this patch. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5098 Differential Revision: D14603753 Pulled By: siying fbshipit-source-id: 1cd41035644ca9e49b97a51030a5d1e15f5f3cae

-

- 27 3月, 2019 1 次提交

-

-

由 Shi Feng 提交于

Summary: Introduce CPU timers for iterator seek and next operations. Seek counter includes SeekToFirst, SeekToLast and SeekForPrev, w/ the caveat that SeekToLast timer doesn't include some post processing time if upper bound is defined. Pull Request resolved: https://github.com/facebook/rocksdb/pull/5076 Differential Revision: D14525218 Pulled By: fredfsh fbshipit-source-id: 03ba25df3b22b06c072621e4de0eacfa1445f0d9

-

- 22 3月, 2019 1 次提交

-

-

由 Maysam Yabandeh 提交于

Summary: The patch reorders DBIter fields to put 1-byte fields together and let the compiler optimize the memory usage by using less 64-bit allocations for bools and enums. This might have a negative side effect of putting the variables that are accessed together into different cache lines and hence increasing the cache misses. Not sure what benchmark would verify that thought. I ran simple, single-threaded seekrandom benchmarks but the variance in the results is too much to be conclusive. ./db_bench --benchmarks=fillrandom --use_existing_db=0 --num=1000000 --db=/dev/shm/dbbench ./db_bench --benchmarks=seekrandom[X10] --use_existing_db=1 --db=/dev/shm/dbbench --num=1000000 --duration=60 --seek_nexts=100 Pull Request resolved: https://github.com/facebook/rocksdb/pull/5078 Differential Revision: D14562676 Pulled By: maysamyabandeh fbshipit-source-id: 2284655d46e079b6e9a860e94be5defb6f482167

-