Initial commit

上级

Showing

LICENSE

0 → 100644

README.md

0 → 100644

assets/detection_activations.png

0 → 100644

69.1 KB

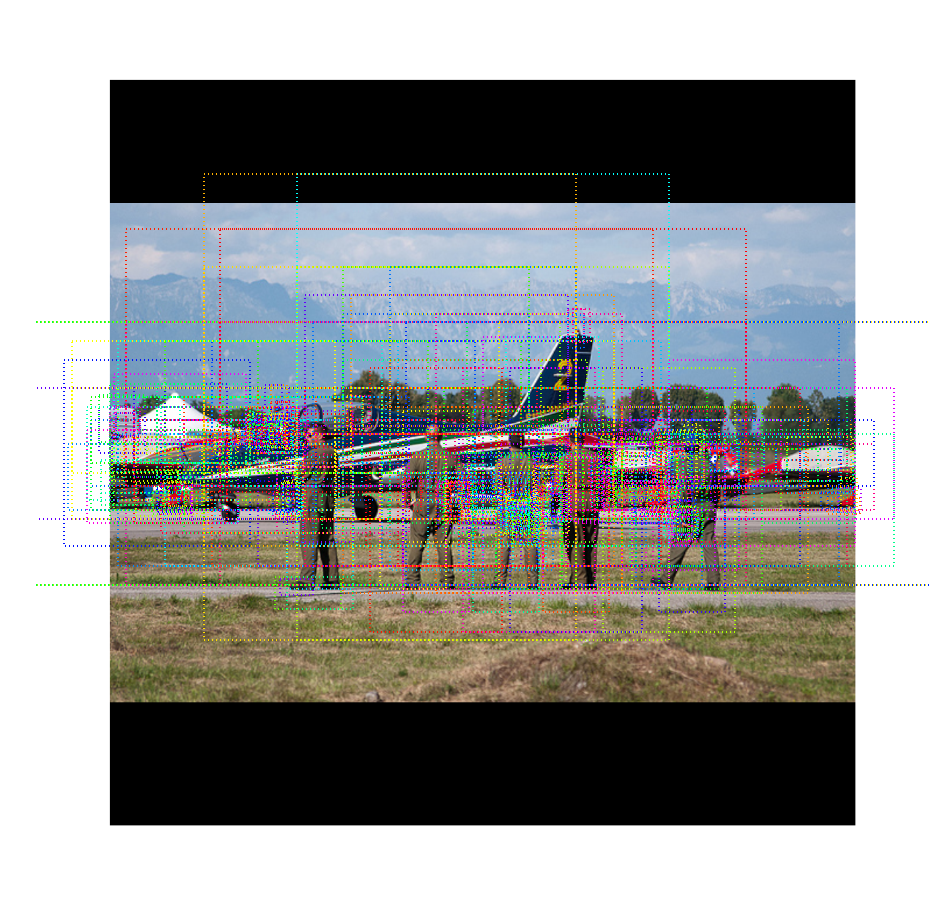

assets/detection_anchors.png

0 → 100644

746.8 KB

assets/detection_final.png

0 → 100644

887.1 KB

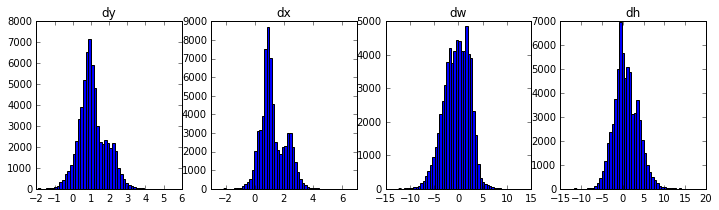

assets/detection_histograms.png

0 → 100644

13.4 KB

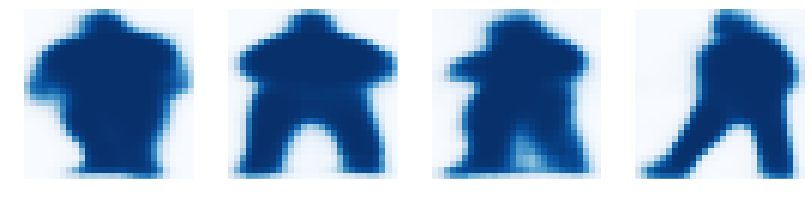

assets/detection_masks.png

0 → 100644

9.7 KB

assets/detection_refinement.png

0 → 100644

702.8 KB

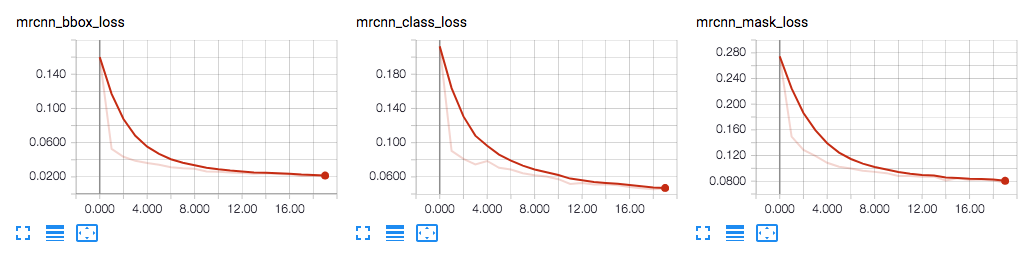

assets/detection_tensorboard.png

0 → 100644

43.1 KB

assets/donuts.png

0 → 100644

871.4 KB

assets/sheep.png

0 → 100644

929.2 KB

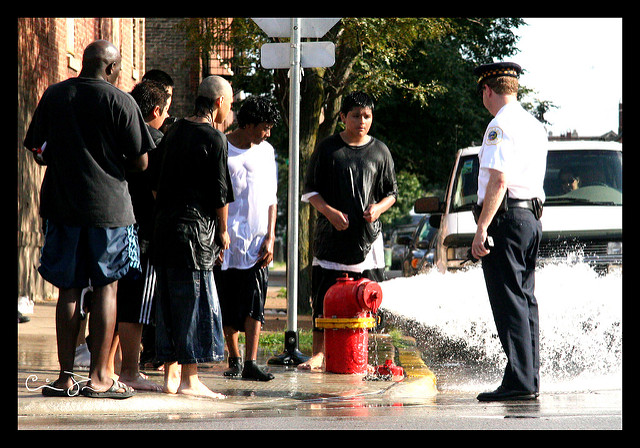

assets/street.png

0 → 100644

917.2 KB

coco.py

0 → 100644

config.py

0 → 100644

demo.ipynb

0 → 100644

此差异已折叠。

137.6 KB

images/12283150_12d37e6389_z.jpg

0 → 100644

66.8 KB

203.2 KB

130.6 KB

177.0 KB

images/25691390_f9944f61b5_z.jpg

0 → 100644

176.2 KB

images/262985539_1709e54576_z.jpg

0 → 100644

121.9 KB

169.6 KB

157.2 KB

147.0 KB

119.7 KB

94.0 KB

85.8 KB

123.8 KB

223.7 KB

157.2 KB

142.2 KB

212.5 KB

142.4 KB

208.6 KB

219.6 KB

221.1 KB

177.9 KB

145.9 KB

234.3 KB

162.8 KB

237.3 KB

300.7 KB

282.2 KB

inspect_data.ipynb

0 → 100644

此差异已折叠。

inspect_model.ipynb

0 → 100644

此差异已折叠。

inspect_weights.ipynb

0 → 100644

此差异已折叠。

model.py

0 → 100644

此差异已折叠。

parallel_model.py

0 → 100644

shapes.py

0 → 100644

train_shapes.ipynb

0 → 100644

此差异已折叠。

utils.py

0 → 100644

此差异已折叠。

visualize.py

0 → 100644

此差异已折叠。