Merge branch 'refactor_docs' into 'master'

Refactor docs See merge request !644

Showing

docs/introduction.rst

0 → 100644

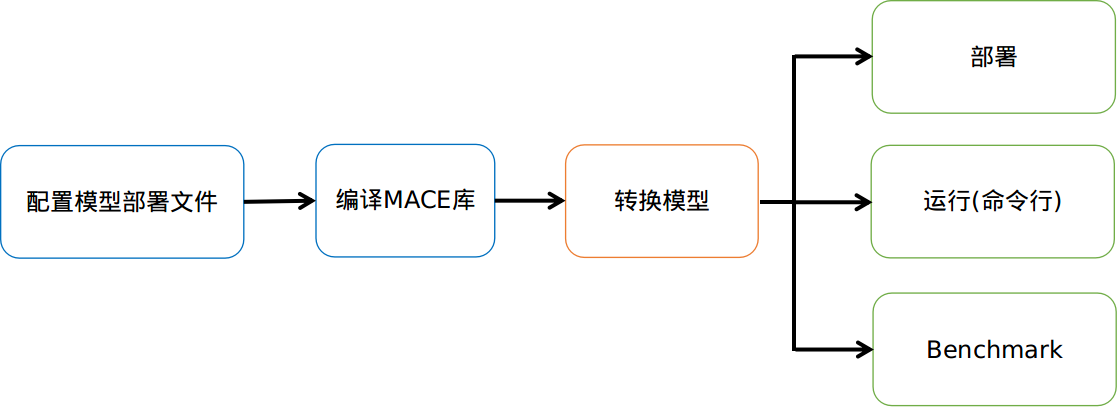

docs/mace-work-flow-zh.png

0 → 100644

37.7 KB

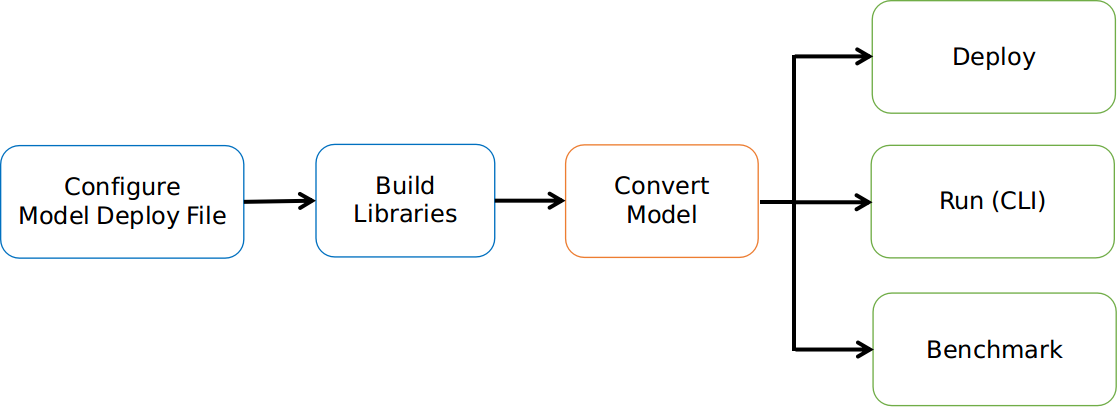

docs/mace-work-flow.png

0 → 100644

34.7 KB

docs/user_guide/basic_usage.rst

0 → 100644