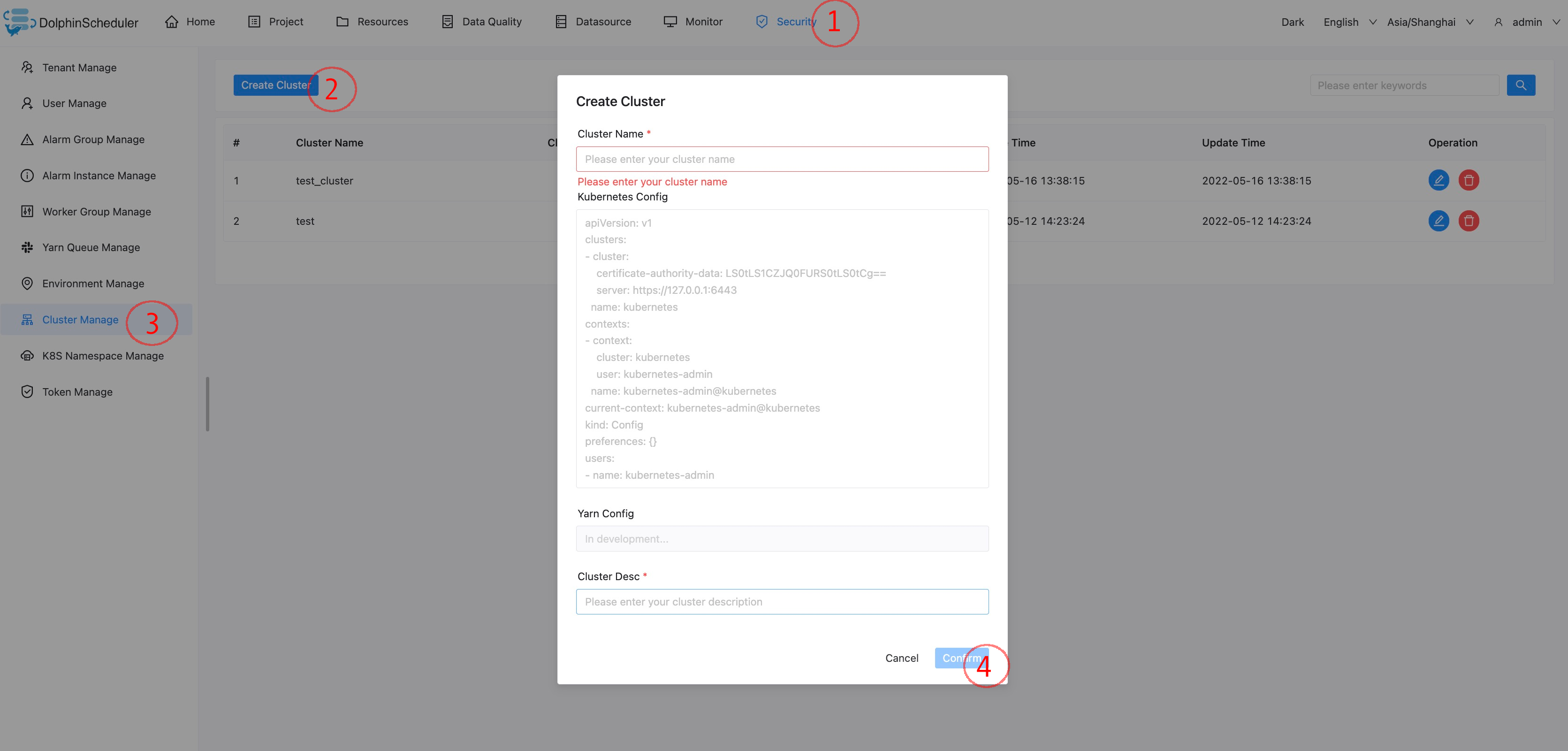

[Feature][Improvement] Support multi cluster environments - k8s type (#10096)

* service code * [Feature][UI] Add front-end for cluster manage * fix e2e * remove comment on cluster controller * doc * img * setting e2e.yaml * test * rerun e2e * fix bug from comment * Update index.tsx use Nspace instead of css. * Update index.tsx Remove the style. * Delete index.module.scss Remove the useless file. Co-authored-by: Nqianl4 <qianl4@cicso.com> Co-authored-by: NWilliam Tong <weitong@cisco.com> Co-authored-by: NAmy0104 <97265214+Amy0104@users.noreply.github.com>

Showing

626.6 KB