Fix conflict with gpu profiling docs

Showing

doc/optimization/index.rst

0 → 100644

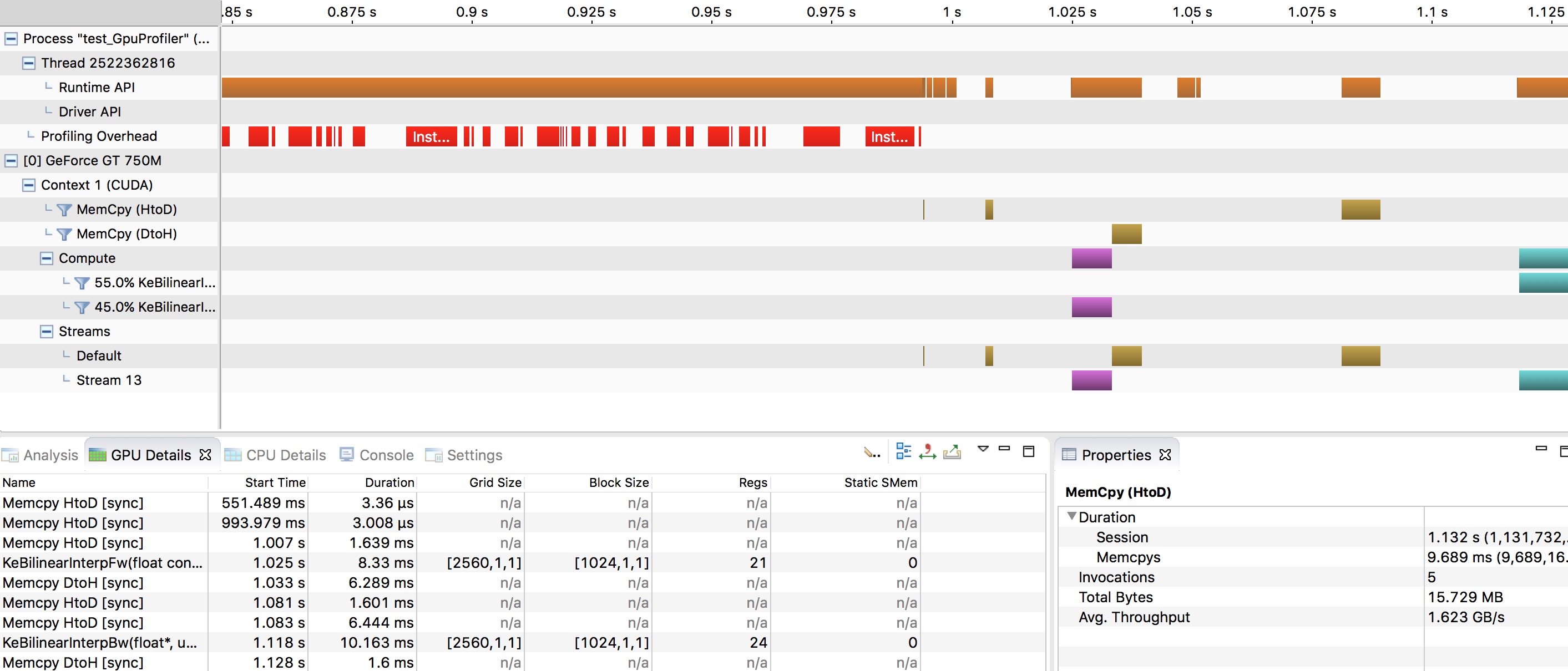

doc/optimization/nvvp1.png

0 → 100644

416.1 KB

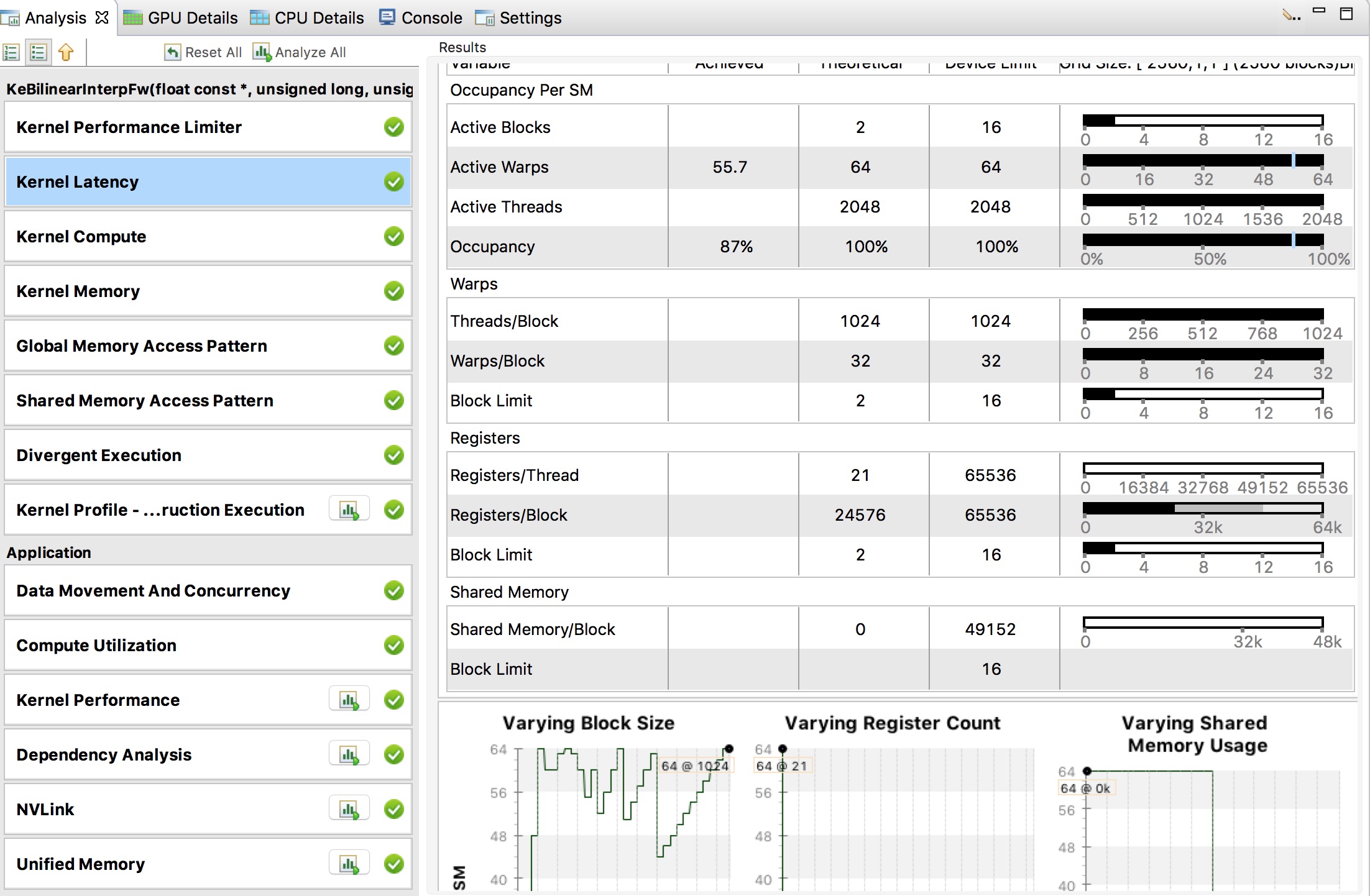

doc/optimization/nvvp2.png

0 → 100644

483.5 KB

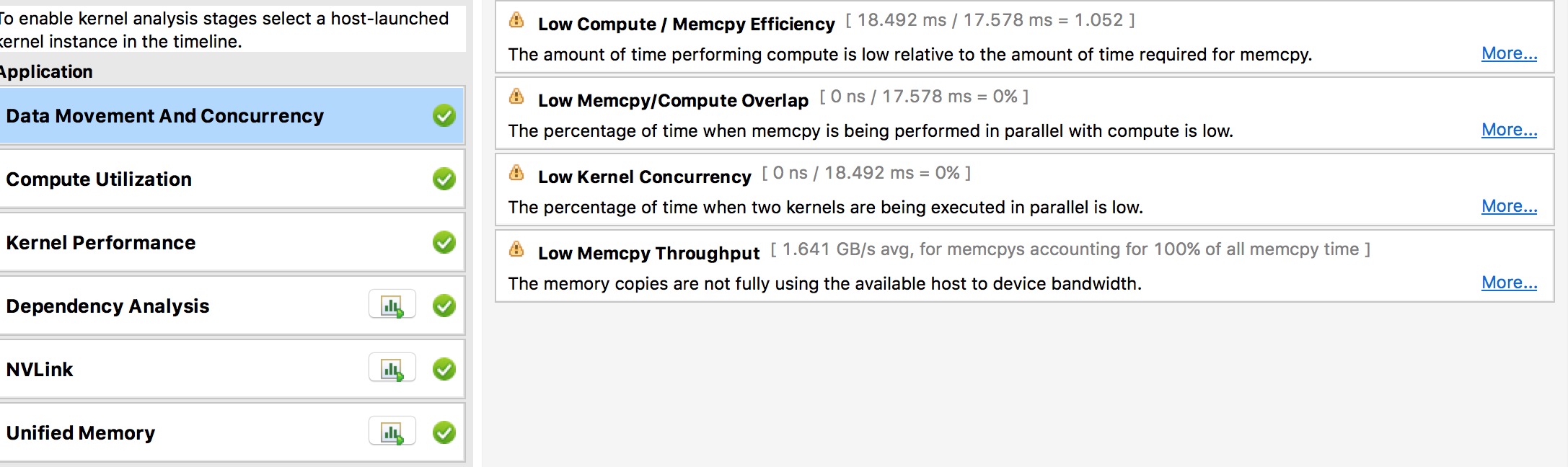

doc/optimization/nvvp3.png

0 → 100644

247.8 KB

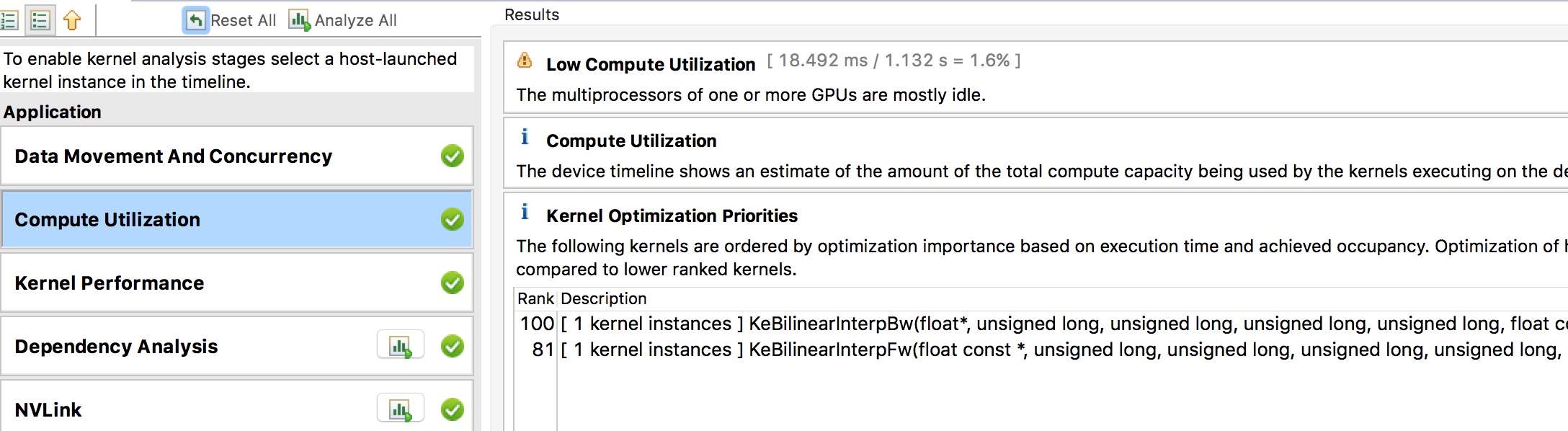

doc/optimization/nvvp4.png

0 → 100644

276.6 KB