Merge branch 'develop' of github.com:baidu/Paddle into feature/get_places

Showing

doc/design/backward.md

0 → 100644

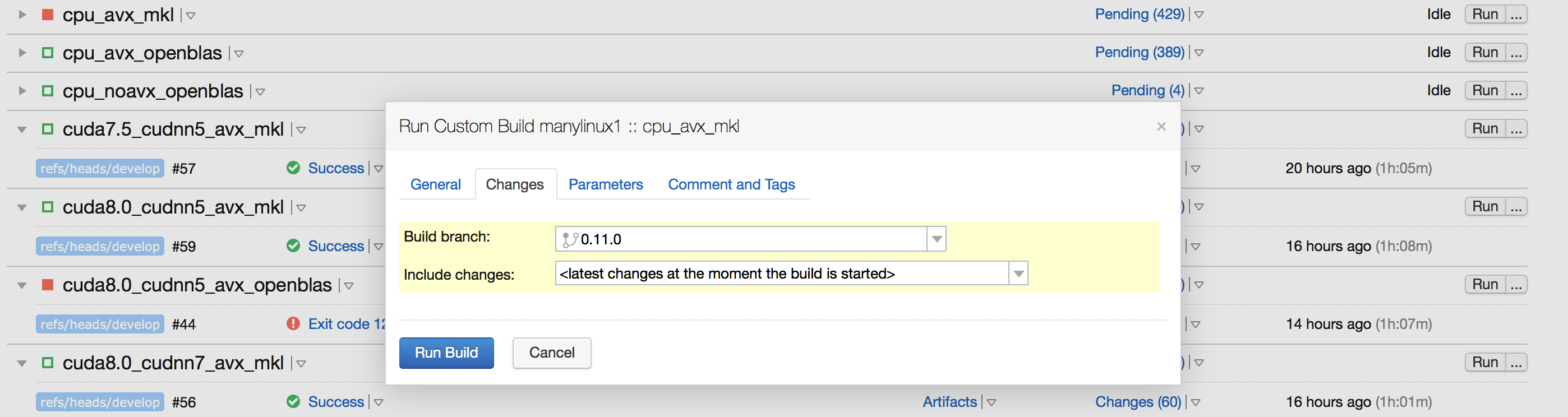

doc/design/ci_build_whl.png

0 → 100644

280.4 KB

83.3 KB

22.5 KB

39.7 KB

文件已移动

文件已移动

文件已移动

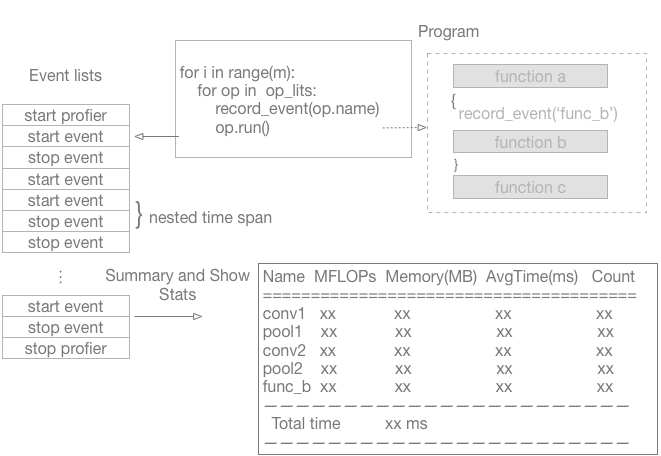

doc/design/images/profiler.png

0 → 100644

49.9 KB

doc/design/memory_optimization.md

0 → 100644

doc/design/mkl/mkldnn_fluid.md

0 → 100644

doc/design/profiler.md

0 → 100644

paddle/framework/backward.md

已删除

100644 → 0

paddle/framework/data_transform.h

0 → 100644

paddle/framework/op_kernel_type.h

0 → 100644

paddle/framework/tensor_util.cc

0 → 100644

此差异已折叠。

paddle/framework/tensor_util.cu

0 → 120000

此差异已折叠。

paddle/framework/threadpool.cc

0 → 100644

paddle/framework/threadpool.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/inference/CMakeLists.txt

0 → 100644

此差异已折叠。

paddle/inference/example.cc

0 → 100644

此差异已折叠。

paddle/inference/inference.cc

0 → 100644

此差异已折叠。

paddle/inference/inference.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/operators/norm_op.cc

0 → 100644

此差异已折叠。

paddle/operators/norm_op.cu

0 → 100644

此差异已折叠。

paddle/operators/norm_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/operators/tensor.save

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

paddle/platform/for_range.h

0 → 100644

此差异已折叠。

paddle/platform/mkldnn_helper.h

0 → 100644

此差异已折叠。

paddle/platform/profiler.cc

0 → 100644

此差异已折叠。

paddle/platform/profiler.h

0 → 100644

此差异已折叠。

paddle/platform/profiler_test.cc

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。