Merge branch 'develop' of github.com:baidu/Paddle into feature/make_lod_a_share_ptr

Showing

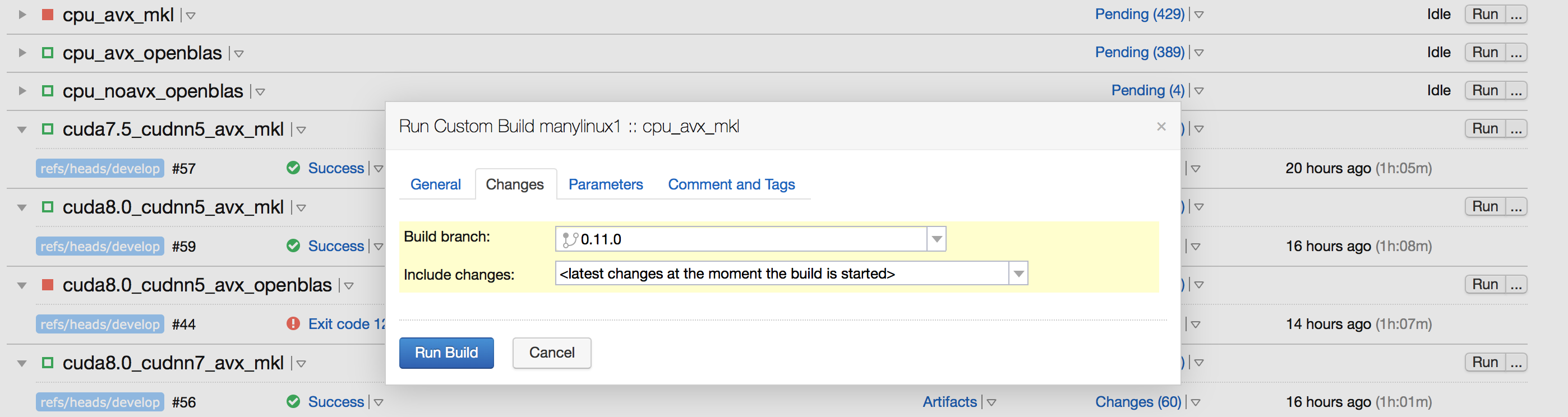

doc/design/ci_build_whl.png

0 → 100644

280.4 KB

paddle/operators/tensor.save

已删除

100644 → 0

文件已删除

paddle/platform/mkldnn_helper.h

0 → 100644