更新了爬虫和数据分析部分的文档

Showing

Day36-40/39.数据库相关知识.md

已删除

100644 → 0

Day36-40/40.大数据平台和HiveSQL.md

0 → 100644

Day61-65/64.使用Selenium抓取网页动态内容.md

0 → 100644

Day61-65/65.爬虫框架Scrapy简介.md

0 → 100644

Day61-65/code/asyncio01.py

已删除

100644 → 0

Day61-65/code/asyncio02.py

已删除

100644 → 0

Day61-65/code/coroutine01.py

已删除

100644 → 0

Day61-65/code/coroutine02.py

已删除

100644 → 0

Day61-65/code/example01.py

已删除

100644 → 0

Day61-65/code/example02.py

已删除

100644 → 0

Day61-65/code/example03.py

已删除

100644 → 0

Day61-65/code/example04.py

已删除

100644 → 0

Day61-65/code/example05.py

已删除

100644 → 0

Day61-65/code/example06.py

已删除

100644 → 0

Day61-65/code/example07.py

已删除

100644 → 0

Day61-65/code/example08.py

已删除

100644 → 0

Day61-65/code/example09.py

已删除

100644 → 0

Day61-65/code/example10.py

已删除

100644 → 0

Day61-65/code/example10a.py

已删除

100644 → 0

Day61-65/code/example11.py

已删除

100644 → 0

Day61-65/code/example11a.py

已删除

100644 → 0

Day61-65/code/example12.py

已删除

100644 → 0

Day61-65/code/generator01.py

已删除

100644 → 0

Day61-65/code/generator02.py

已删除

100644 → 0

Day61-65/code/guido.jpg

已删除

100644 → 0

56.7 KB

Day61-65/code/main.py

已删除

100644 → 0

Day61-65/code/main_redis.py

已删除

100644 → 0

Day61-65/code/myutils.py

已删除

100644 → 0

Day61-65/code/tesseract.png

已删除

100644 → 0

48.8 KB

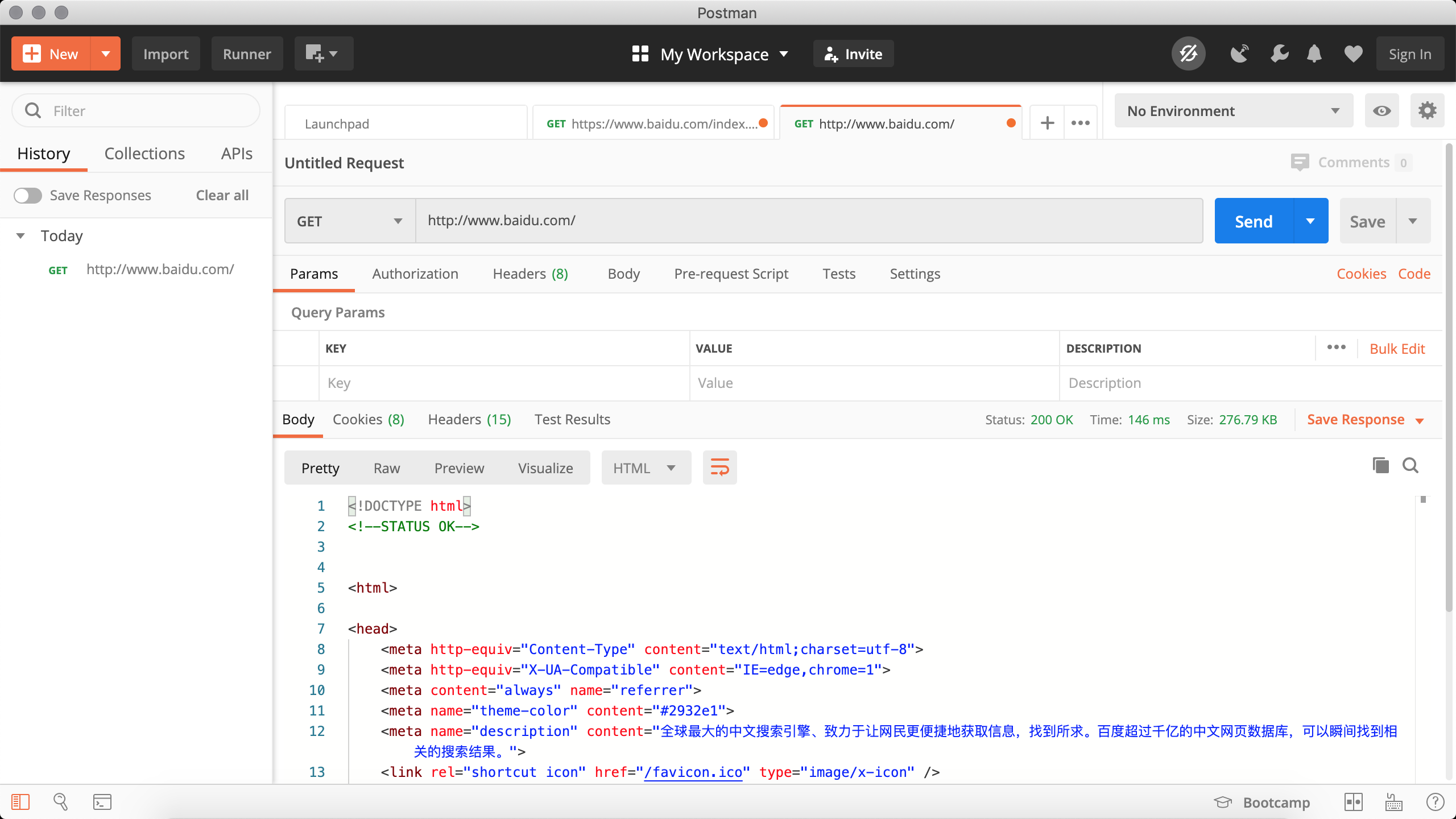

Day61-65/res/postman.png

已删除

100644 → 0

201.3 KB

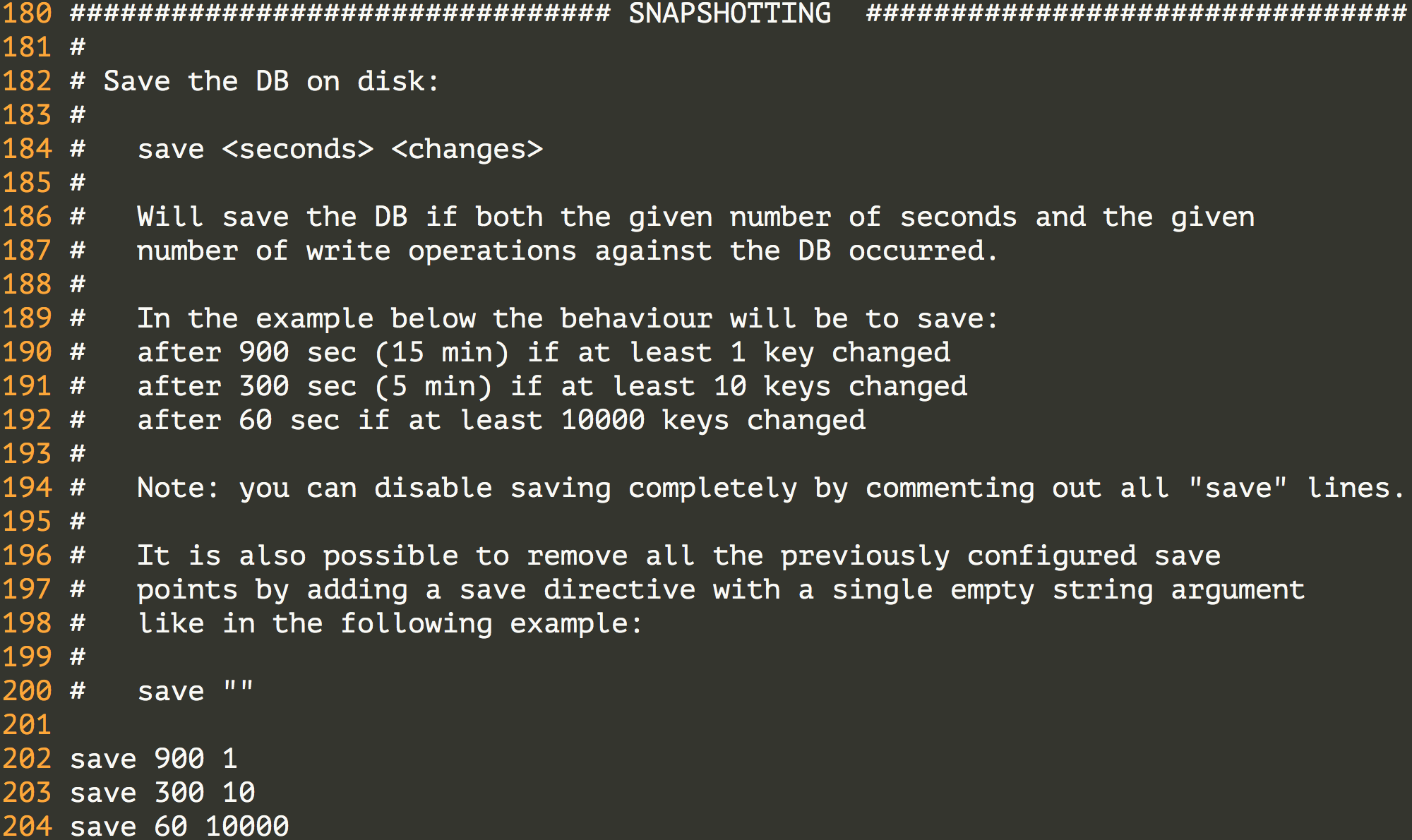

Day61-65/res/redis-save.png

已删除

100644 → 0

133.0 KB

Day61-65/res/tesseract.gif

已删除

100644 → 0

775.3 KB

Day66-80/80.数据分析方法论.md

已删除

100644 → 0