Open source MnasFPN and minor fixes to OD API (#8484)

310447280 by lzc:

Internal change

310420845 by Zhichao Lu:

Open source the internal Context RCNN code.

--

310362339 by Zhichao Lu:

Internal change

310259448 by lzc:

Update required TF version for OD API.

--

310252159 by Zhichao Lu:

Port patch_ops_test to TF1/TF2 as TPUs.

--

310247180 by Zhichao Lu:

Ignore keypoint heatmap loss in the regions/bounding boxes with target keypoint

class but no valid keypoint annotations.

--

310178294 by Zhichao Lu:

Opensource MnasFPN

https://arxiv.org/abs/1912.01106

--

310094222 by lzc:

Internal changes.

--

310085250 by lzc:

Internal Change.

--

310016447 by huizhongc:

Remove unrecognized classes from labeled_classes.

--

310009470 by rathodv:

Mark batcher.py as TF1 only.

--

310001984 by rathodv:

Update core/preprocessor.py to be compatible with TF1/TF2..

--

309455035 by Zhichao Lu:

Makes the freezable_batch_norm_test run w/ v2 behavior.

The main change is in v2 updates will happen right away when running batchnorm in training mode. So, we need to restore the weights between batchnorm calls to make sure the numerical checks all start from the same place.

--

309425881 by Zhichao Lu:

Make TF1/TF2 optimizer builder tests explicit.

--

309408646 by Zhichao Lu:

Make dataset builder tests TF1 and TF2 compatible.

--

309246305 by Zhichao Lu:

Added the functionality of combining the person keypoints and object detection

annotations in the binary that converts the COCO raw data to TfRecord.

--

309125076 by Zhichao Lu:

Convert target_assigner_utils to TF1/TF2.

--

308966359 by huizhongc:

Support SSD training with partially labeled groundtruth.

--

308937159 by rathodv:

Update core/target_assigner.py to be compatible with TF1/TF2.

--

308774302 by Zhichao Lu:

Internal

--

308732860 by rathodv:

Make core/prefetcher.py compatible with TF1 only.

--

308726984 by rathodv:

Update core/multiclass_nms_test.py to be TF1/TF2 compatible.

--

308714718 by rathodv:

Update core/region_similarity_calculator_test.py to be TF1/TF2 compatible.

--

308707960 by rathodv:

Update core/minibatch_sampler_test.py to be TF1/TF2 compatible.

--

308700595 by rathodv:

Update core/losses_test.py to be TF1/TF2 compatible and remove losses_test_v2.py

--

308361472 by rathodv:

Update core/matcher_test.py to be TF1/TF2 compatible.

--

308335846 by Zhichao Lu:

Updated the COCO evaluation logics and populated the groundturth area

information through. This change matches the groundtruth format expected by the

COCO keypoint evaluation.

--

308256924 by rathodv:

Update core/keypoints_ops_test.py to be TF1/TF2 compatible.

--

308256826 by rathodv:

Update class_agnostic_nms_test.py to be TF1/TF2 compatible.

--

308256112 by rathodv:

Update box_list_ops_test.py to be TF1/TF2 compatible.

--

308159360 by Zhichao Lu:

Internal change

308145008 by Zhichao Lu:

Added 'image/class/confidence' field in the TFExample decoder.

--

307651875 by rathodv:

Refactor core/box_list.py to support TF1/TF2.

--

307651798 by rathodv:

Modify box_coder.py base class to work with with TF1/TF2

--

307651652 by rathodv:

Refactor core/balanced_positive_negative_sampler.py to support TF1/TF2.

--

307651571 by rathodv:

Modify BoxCoders tests to use test_case:execute method to allow testing with TF1.X and TF2.X

--

307651480 by rathodv:

Modify Matcher tests to use test_case:execute method to allow testing with TF1.X and TF2.X

--

307651409 by rathodv:

Modify AnchorGenerator tests to use test_case:execute method to allow testing with TF1.X and TF2.X

--

307651314 by rathodv:

Refactor model_builder to support TF1 or TF2 models based on TensorFlow version.

--

307092053 by Zhichao Lu:

Use manager to save checkpoint.

--

307071352 by ronnyvotel:

Fixing keypoint visibilities. Now by default, the visibility is marked True if the keypoint is labeled (regardless of whether it is visible or not).

Also, if visibilities are not present in the dataset, they will be created based on whether the keypoint coordinates are finite (vis = True) or NaN (vis = False).

--

307069557 by Zhichao Lu:

Internal change to add few fields related to postprocessing parameters in

center_net.proto and populate those parameters to the keypoint postprocessing

functions.

--

307012091 by Zhichao Lu:

Make Adam Optimizer's epsilon proto configurable.

Potential issue: tf.compat.v1's AdamOptimizer has a default epsilon on 1e-08 ([doc-link](https://www.tensorflow.org/api_docs/python/tf/compat/v1/train/AdamOptimizer)) whereas tf.keras's AdamOptimizer has default epsilon 1e-07 ([doc-link](https://www.tensorflow.org/api_docs/python/tf/keras/optimizers/Adam))

--

306858598 by Zhichao Lu:

Internal changes to update the CenterNet model:

1) Modified eval job loss computation to avoid averaging over batches with zero loss.

2) Updated CenterNet keypoint heatmap target assigner to apply box size to heatmap Guassian standard deviation.

3) Updated the CenterNet meta arch keypoint losses computation to apply weights outside of loss function.

--

306731223 by jonathanhuang:

Internal change.

--

306549183 by rathodv:

Internal Update.

--

306542930 by rathodv:

Internal Update

--

306322697 by rathodv:

Internal.

--

305345036 by Zhichao Lu:

Adding COCO Camera Traps Json to tf.Example beam code

--

304104869 by lzc:

Internal changes.

--

304068971 by jonathanhuang:

Internal change.

--

304050469 by Zhichao Lu:

Internal change.

--

303880642 by huizhongc:

Support parsing partially labeled groundtruth.

--

303841743 by Zhichao Lu:

Deprecate nms_on_host in SSDMetaArch.

--

303803204 by rathodv:

Internal change.

--

303793895 by jonathanhuang:

Internal change.

--

303467631 by rathodv:

Py3 update for detection inference test.

--

303444542 by rathodv:

Py3 update to metrics module

--

303421960 by rathodv:

Update json_utils to python3.

--

302787583 by ronnyvotel:

Coco results generator for submission to the coco test server.

--

302719091 by Zhichao Lu:

Internal change to add the ResNet50 image feature extractor for CenterNet model.

--

302116230 by Zhichao Lu:

Added the functions to overlay the heatmaps with images in visualization util

library.

--

301888316 by Zhichao Lu:

Fix checkpoint_filepath not defined error.

--

301840312 by ronnyvotel:

Adding keypoint_scores to visualizations.

--

301683475 by ronnyvotel:

Introducing the ability to preprocess `keypoint_visibilities`.

Some data augmentation ops such as random crop can filter instances and keypoints. It's important to also filter keypoint visibilities, so that the groundtruth tensors are always in alignment.

--

301532344 by Zhichao Lu:

Don't use tf.divide since "Quantization not yet supported for op: DIV"

--

301480348 by ronnyvotel:

Introducing keypoint evaluation into model lib v2.

Also, making some fixes to coco keypoint evaluation.

--

301454018 by Zhichao Lu:

Added the image summary to visualize the train/eval input images and eval's

prediction/groundtruth side-by-side image.

--

301317527 by Zhichao Lu:

Updated the random_absolute_pad_image function in the preprocessor library to

support the keypoints argument.

--

301300324 by Zhichao Lu:

Apply name change(experimental_run_v2 -> run) for all callers in Tensorflow.

--

301297115 by ronnyvotel:

Utility function for setting keypoint visibilities based on keypoint coordinates.

--

301248885 by Zhichao Lu:

Allow MultiworkerMirroredStrategy(MWMS) use by adding checkpoint handling with temporary directories in model_lib_v2. Added missing WeakKeyDictionary cfer_fn_cache field in CollectiveAllReduceStrategyExtended.

--

301224559 by Zhichao Lu:

...1) Fixes model_lib to also use keypoints while preparing model groundtruth.

...2) Tests model_lib with newly added keypoint metrics config.

--

300836556 by Zhichao Lu:

Internal changes to add keypoint estimation parameters in CenterNet proto.

--

300795208 by Zhichao Lu:

Updated the eval_util library to populate the keypoint groundtruth to

eval_dict.

--

299474766 by Zhichao Lu:

...Modifies eval_util to create Keypoint Evaluator objects when configured in eval config.

--

299453920 by Zhichao Lu:

Add swish activation as a hyperperams option.

--

299240093 by ronnyvotel:

Keypoint postprocessing for CenterNetMetaArch.

--

299176395 by Zhichao Lu:

Internal change.

--

299135608 by Zhichao Lu:

Internal changes to refactor the CenterNet model in preparation for keypoint estimation tasks.

--

298915482 by Zhichao Lu:

Make dataset_builder aware of input_context for distributed training.

--

298713595 by Zhichao Lu:

Handling data with negative size boxes.

--

298695964 by Zhichao Lu:

Expose change_coordinate_frame as a config parameter; fix multiclass_scores optional field.

--

298492150 by Zhichao Lu:

Rename optimizer_builder_test_v2.py -> optimizer_builder_v2_test.py

--

298476471 by Zhichao Lu:

Internal changes to support CenterNet keypoint estimation.

--

298365851 by ronnyvotel:

Fixing a bug where groundtruth_keypoint_weights were being padded with a dynamic dimension.

--

297843700 by Zhichao Lu:

Internal change.

--

297706988 by lzc:

Internal change.

--

297705287 by ronnyvotel:

Creating the "snapping" behavior in CenterNet, where regressed keypoints are refined with updated candidate keypoints from a heatmap.

--

297700447 by Zhichao Lu:

Improve checkpoint checking logic with TF2 loop.

--

297686094 by Zhichao Lu:

Convert "import tensorflow as tf" to "import tensorflow.compat.v1".

--

297670468 by lzc:

Internal change.

--

297241327 by Zhichao Lu:

Convert "import tensorflow as tf" to "import tensorflow.compat.v1".

--

297205959 by Zhichao Lu:

Internal changes to support refactored the centernet object detection target assigner into a separate library.

--

297143806 by Zhichao Lu:

Convert "import tensorflow as tf" to "import tensorflow.compat.v1".

--

297129625 by Zhichao Lu:

Explicitly replace "import tensorflow" with "tensorflow.compat.v1" for TF2.x migration

--

297117070 by Zhichao Lu:

Explicitly replace "import tensorflow" with "tensorflow.compat.v1" for TF2.x migration

--

297030190 by Zhichao Lu:

Add configuration options for visualizing keypoint edges

--

296359649 by Zhichao Lu:

Support DepthwiseConv2dNative (of separable conv) in weight equalization loss.

--

296290582 by Zhichao Lu:

Internal change.

--

296093857 by Zhichao Lu:

Internal changes to add general target assigner utilities.

--

295975116 by Zhichao Lu:

Fix visualize_boxes_and_labels_on_image_array to show max_boxes_to_draw correctly.

--

295819711 by Zhichao Lu:

Adds a flag to visualize_boxes_and_labels_on_image_array to skip the drawing of axis aligned bounding boxes.

--

295811929 by Zhichao Lu:

Keypoint support in random_square_crop_by_scale.

--

295788458 by rathodv:

Remove unused checkpoint to reduce repo size on github

--

295787184 by Zhichao Lu:

Enable visualization of edges between keypoints

--

295763508 by Zhichao Lu:

[Context RCNN] Add an option to enable / disable cropping feature in the post

process step in the meta archtecture.

--

295605344 by Zhichao Lu:

internal change.

--

294926050 by ronnyvotel:

Adding per-keypoint groundtruth weights. These weights are intended to be used as multipliers in a keypoint loss function.

Groundtruth keypoint weights are constructed as follows:

- Initialize the weight for each keypoint type based on user-specified weights in the input_reader proto

- Mask out (i.e. make zero) all keypoint weights that are not visible.

--

294829061 by lzc:

Internal change.

--

294566503 by Zhichao Lu:

Changed internal CenterNet Model configuration.

--

294346662 by ronnyvotel:

Using NaN values in keypoint coordinates that are not visible.

--

294333339 by Zhichao Lu:

Change experimetna_distribute_dataset -> experimental_distribute_dataset_from_function

--

293928752 by Zhichao Lu:

Internal change

--

293909384 by Zhichao Lu:

Add capabilities to train 1024x1024 CenterNet models.

--

293637554 by ronnyvotel:

Adding keypoint visibilities to TfExampleDecoder.

--

293501558 by lzc:

Internal change.

--

293252851 by Zhichao Lu:

Change tf.gfile.GFile to tf.io.gfile.GFile.

--

292730217 by Zhichao Lu:

Internal change.

--

292456563 by lzc:

Internal changes.

--

292355612 by Zhichao Lu:

Use tf.gather and tf.scatter_nd instead of matrix ops.

--

292245265 by rathodv:

Internal

--

291989323 by richardmunoz:

Refactor out building a DataDecoder from building a tf.data.Dataset.

--

291950147 by Zhichao Lu:

Flip bounding boxes in arbitrary shaped tensors.

--

291401052 by huizhongc:

Fix multiscale grid anchor generator to allow fully convolutional inference. When exporting model with identity_resizer as image_resizer, there is an incorrect box offset on the detection results. We add the anchor offset to address this problem.

--

291298871 by Zhichao Lu:

Py3 compatibility changes.

--

290957957 by Zhichao Lu:

Hourglass feature extractor for CenterNet.

--

290564372 by Zhichao Lu:

Internal change.

--

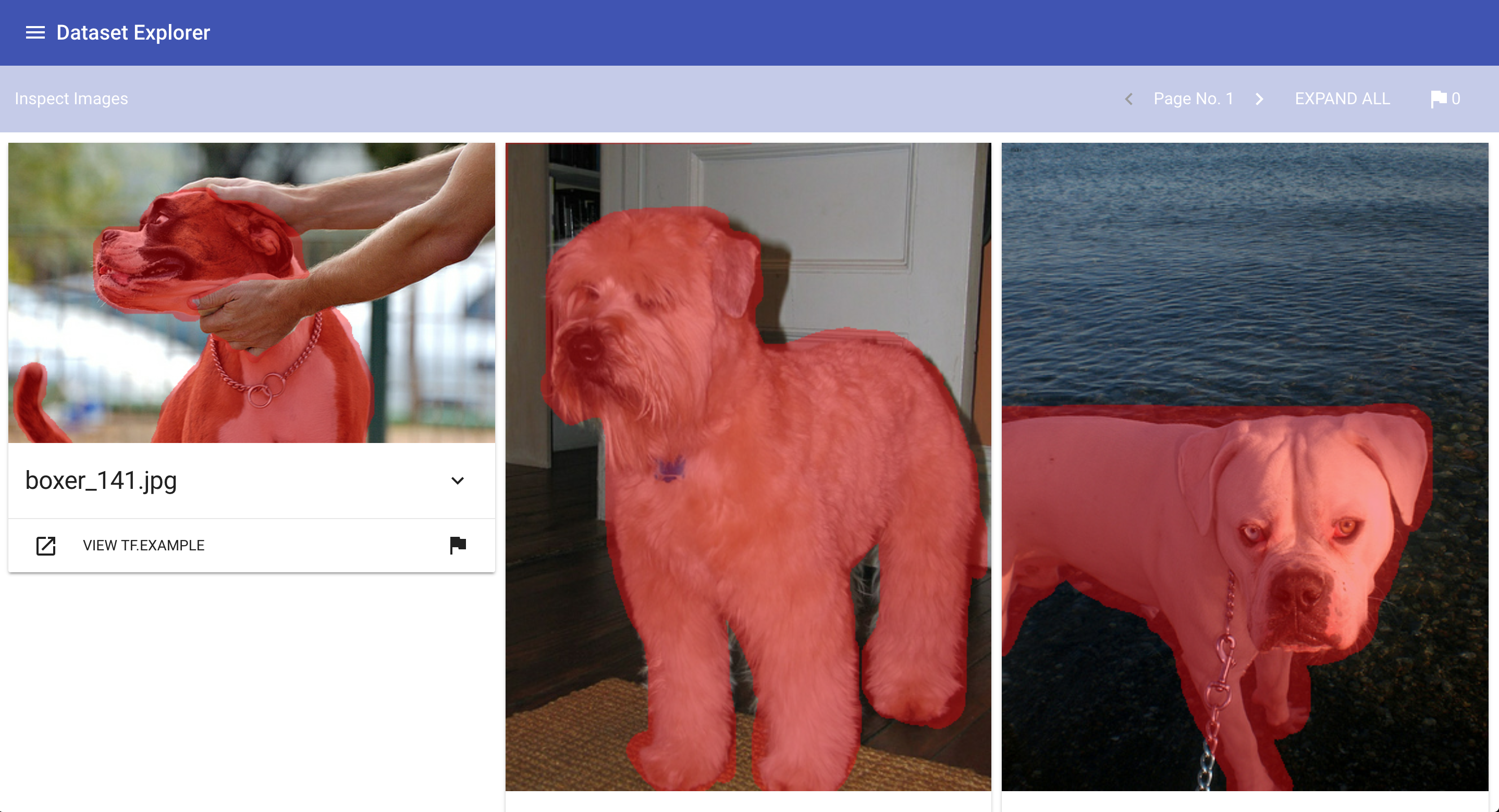

290155278 by rathodv:

Remove Dataset Explorer.

--

290155153 by Zhichao Lu:

Internal change

--

290122054 by Zhichao Lu:

Unify the format in the faster_rcnn.proto

--

290116084 by Zhichao Lu:

Deprecate tensorflow.contrib.

--

290100672 by Zhichao Lu:

Update MobilenetV3 SSD candidates

--

289926392 by Zhichao Lu:

Internal change

--

289553440 by Zhichao Lu:

[Object Detection API] Fix the comments about the dimension of the rpn_box_encodings from 4-D to 3-D.

--

288994128 by lzc:

Internal changes.

--

288942194 by lzc:

Internal change.

--

288746124 by Zhichao Lu:

Configurable channel mean/std. dev in CenterNet feature extractors.

--

288552509 by rathodv:

Internal.

--

288541285 by rathodv:

Internal update.

--

288396396 by Zhichao Lu:

Make object detection import contrib explicitly

--

288255791 by rathodv:

Internal

--

288078600 by Zhichao Lu:

Fix model_lib_v2 test

--

287952244 by rathodv:

Internal

--

287921774 by Zhichao Lu:

internal change

--

287906173 by Zhichao Lu:

internal change

--

287889407 by jonathanhuang:

PY3 compatibility

--

287889042 by rathodv:

Internal

--

287876178 by Zhichao Lu:

Internal change.

--

287770490 by Zhichao Lu:

Add CenterNet proto and builder

--

287694213 by Zhichao Lu:

Support for running multiple steps per tf.function call.

--

287377183 by jonathanhuang:

PY3 compatibility

--

287371344 by rathodv:

Support loading keypoint labels and ids.

--

287368213 by rathodv:

Add protos supporting keypoint evaluation.

--

286673200 by rathodv:

dataset_tools PY3 migration

--

286635106 by Zhichao Lu:

Update code for upcoming tf.contrib removal

--

286479439 by Zhichao Lu:

Internal change

--

286311711 by Zhichao Lu:

Skeleton of context model within TFODAPI

--

286005546 by Zhichao Lu:

Fix Faster-RCNN training when using keep_aspect_ratio_resizer with pad_to_max_dimension

--

285906400 by derekjchow:

Internal change

--

285822795 by Zhichao Lu:

Add CenterNet meta arch target assigners.

--

285447238 by Zhichao Lu:

Internal changes.

--

285016927 by Zhichao Lu:

Make _dummy_computation a tf.function. This fixes breakage caused by

cl/284256438

--

284827274 by Zhichao Lu:

Convert to python 3.

--

284645593 by rathodv:

Internal change

--

284639893 by rathodv:

Add missing documentation for keypoints in eval_util.py.

--

284323712 by Zhichao Lu:

Internal changes.

--

284295290 by Zhichao Lu:

Updating input config proto and dataset builder to include context fields

Updating standard_fields and tf_example_decoder to include context features

--

284226821 by derekjchow:

Update exporter.

--

284211030 by Zhichao Lu:

API changes in CenterNet informed by the experiments with hourlgass network.

--

284190451 by Zhichao Lu:

Add support for CenterNet losses in protos and builders.

--

284093961 by lzc:

Internal changes.

--

284028174 by Zhichao Lu:

Internal change

--

284014719 by derekjchow:

Do not pad top_down feature maps unnecessarily.

--

284005765 by Zhichao Lu:

Add new pad_to_multiple_resizer

--

283858233 by Zhichao Lu:

Make target assigner work when under tf.function.

--

283836611 by Zhichao Lu:

Make config getters more general.

--

283808990 by Zhichao Lu:

Internal change

--

283754588 by Zhichao Lu:

Internal changes.

--

282460301 by Zhichao Lu:

Add ability to restore v2 style checkpoints.

--

281605842 by lzc:

Add option to disable loss computation in OD API eval job.

--

280298212 by Zhichao Lu:

Add backwards compatible change

--

280237857 by Zhichao Lu:

internal change

--

PiperOrigin-RevId: 310447280

Showing

此差异已折叠。

此差异已折叠。

4.2 MB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。