Merge branch 'develop' of https://github.com/PaddlePaddle/Paddle into pixel_softmax_layer

Showing

doc/design/graph.md

0 → 100644

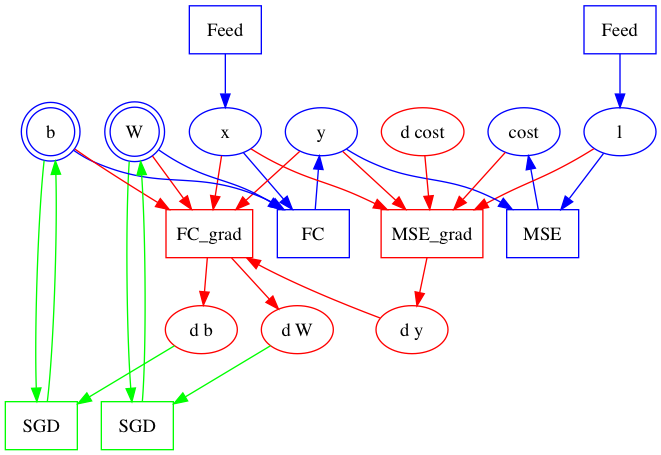

54.1 KB

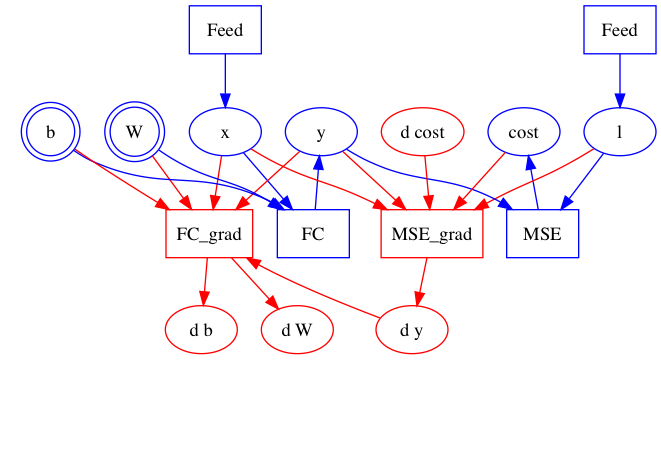

46.1 KB

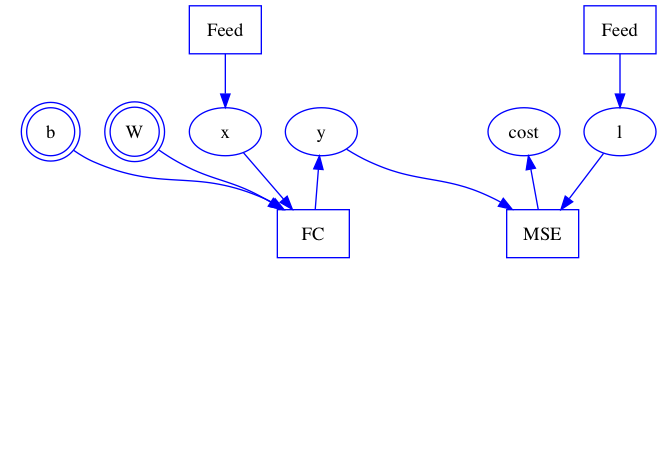

28.5 KB

paddle/platform/cudnn_helper.h

0 → 100644

paddle/platform/macros.h

0 → 100644

文件已移动