Merge remote-tracking branch 'origin/develop' into szhou/hotfix/order-by-tbname

Showing

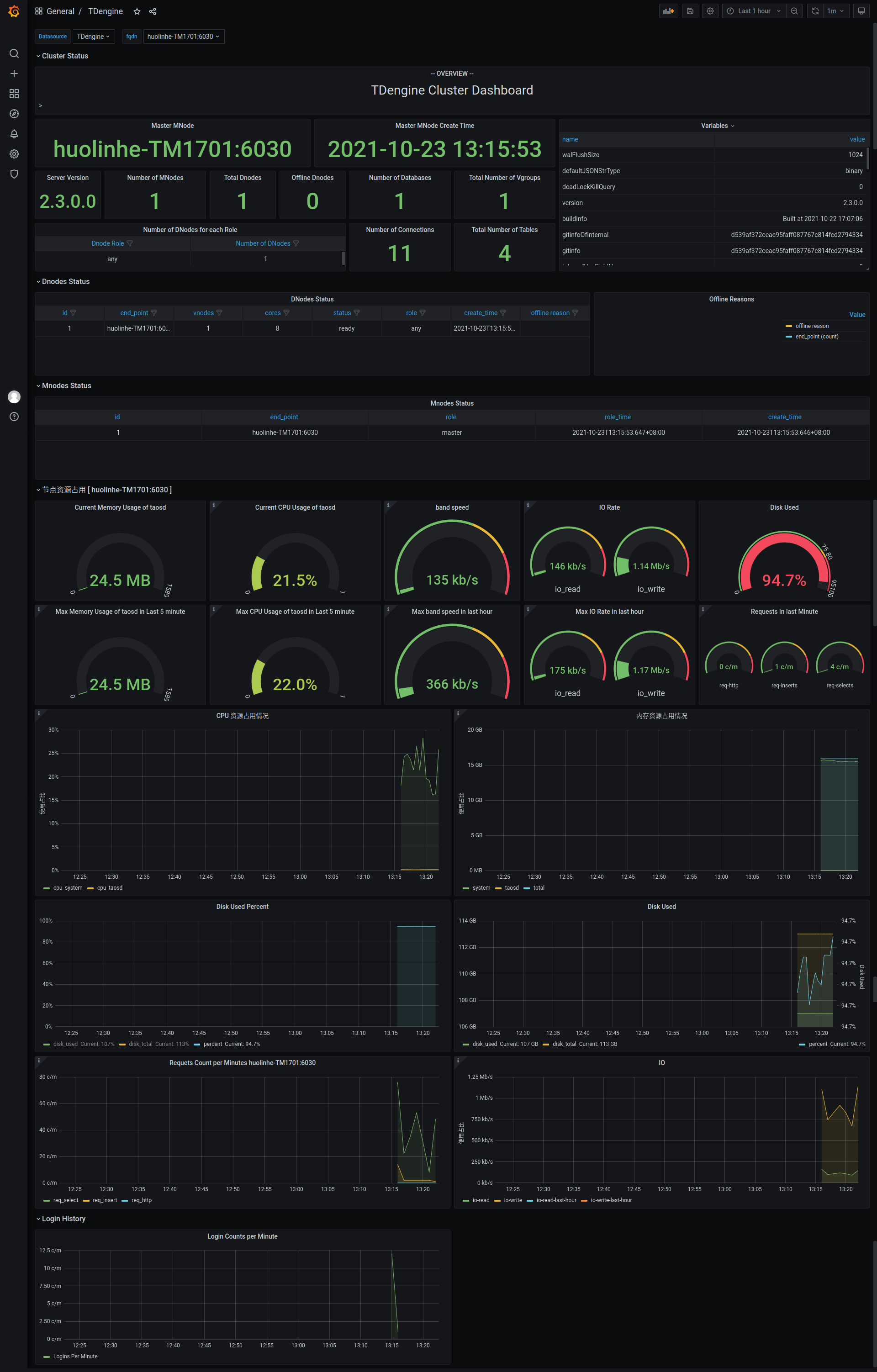

171.2 KB

171.2 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

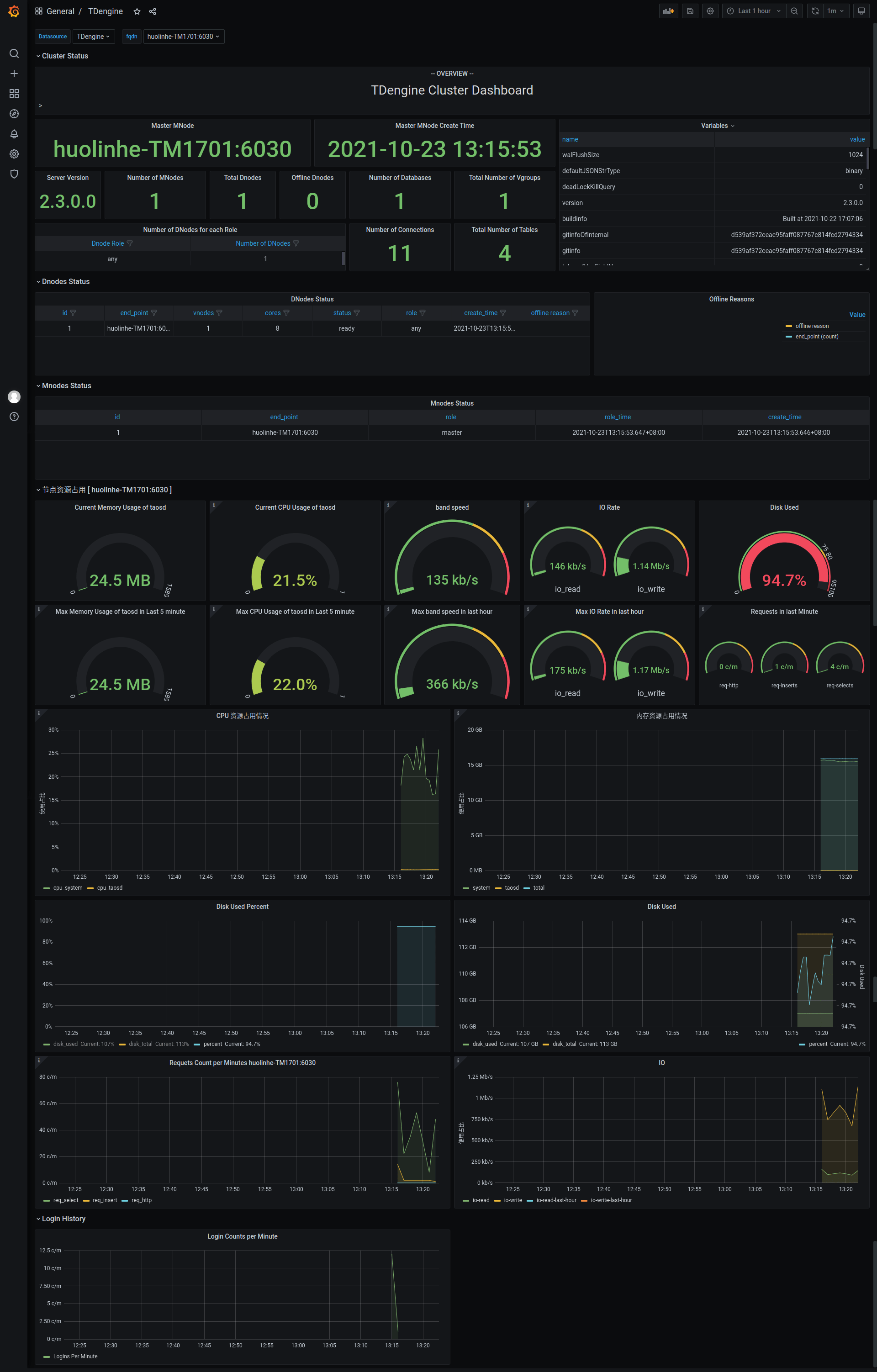

171.2 KB

171.2 KB