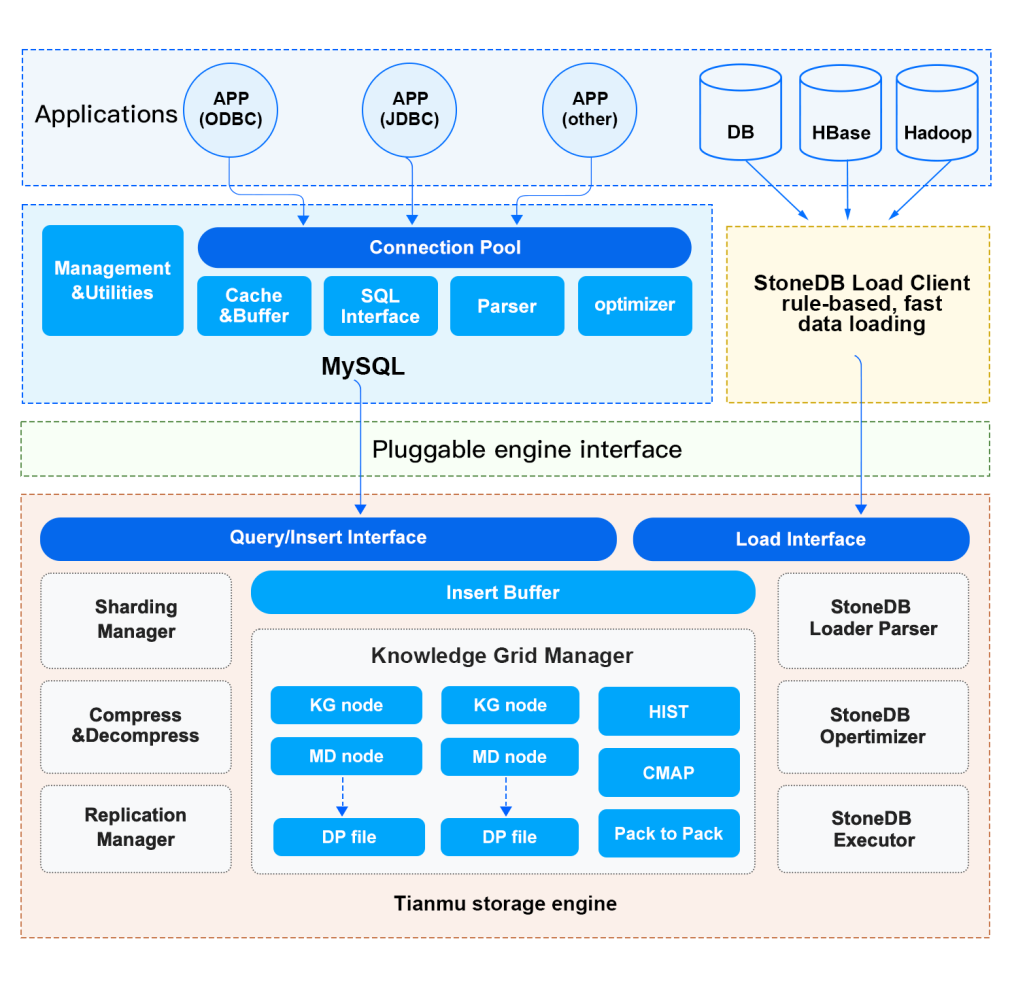

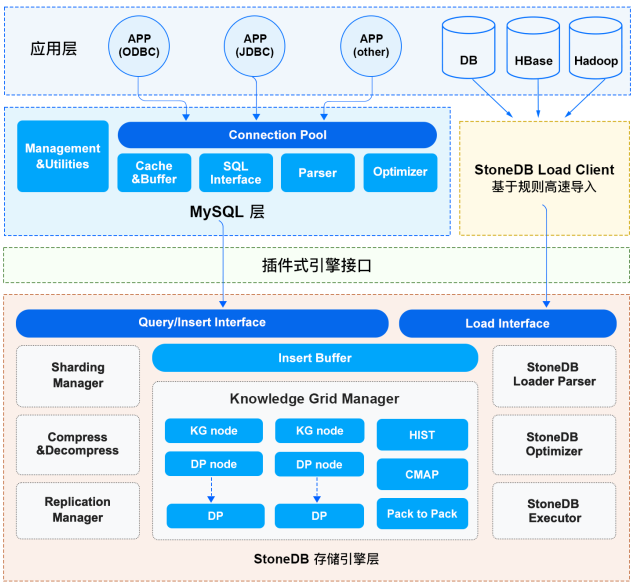

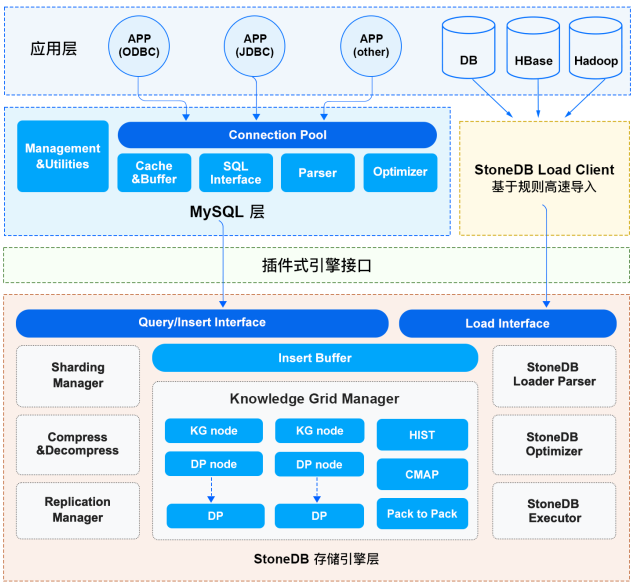

docs(stonedb): update the latest docs(#391) (#392)

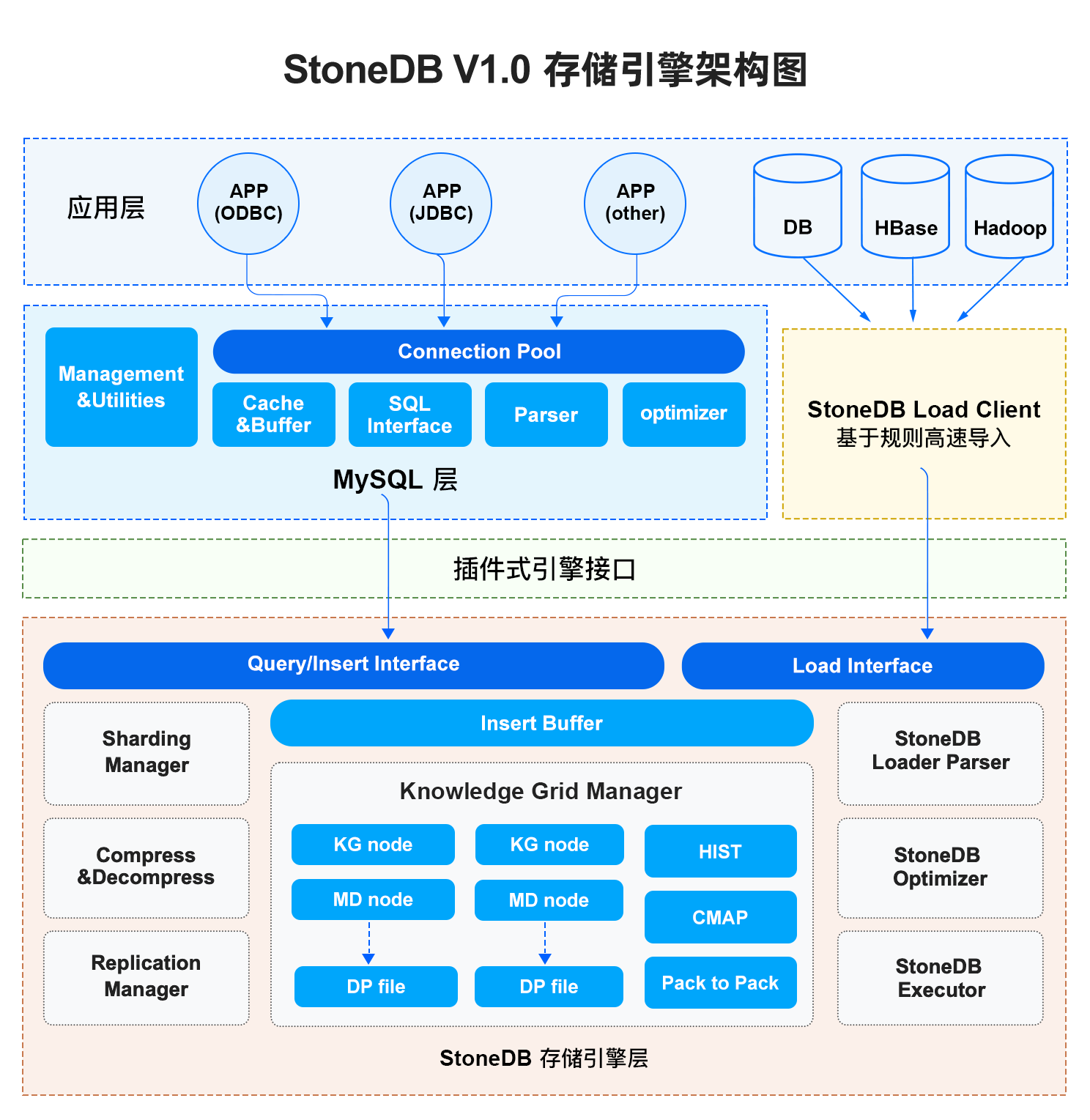

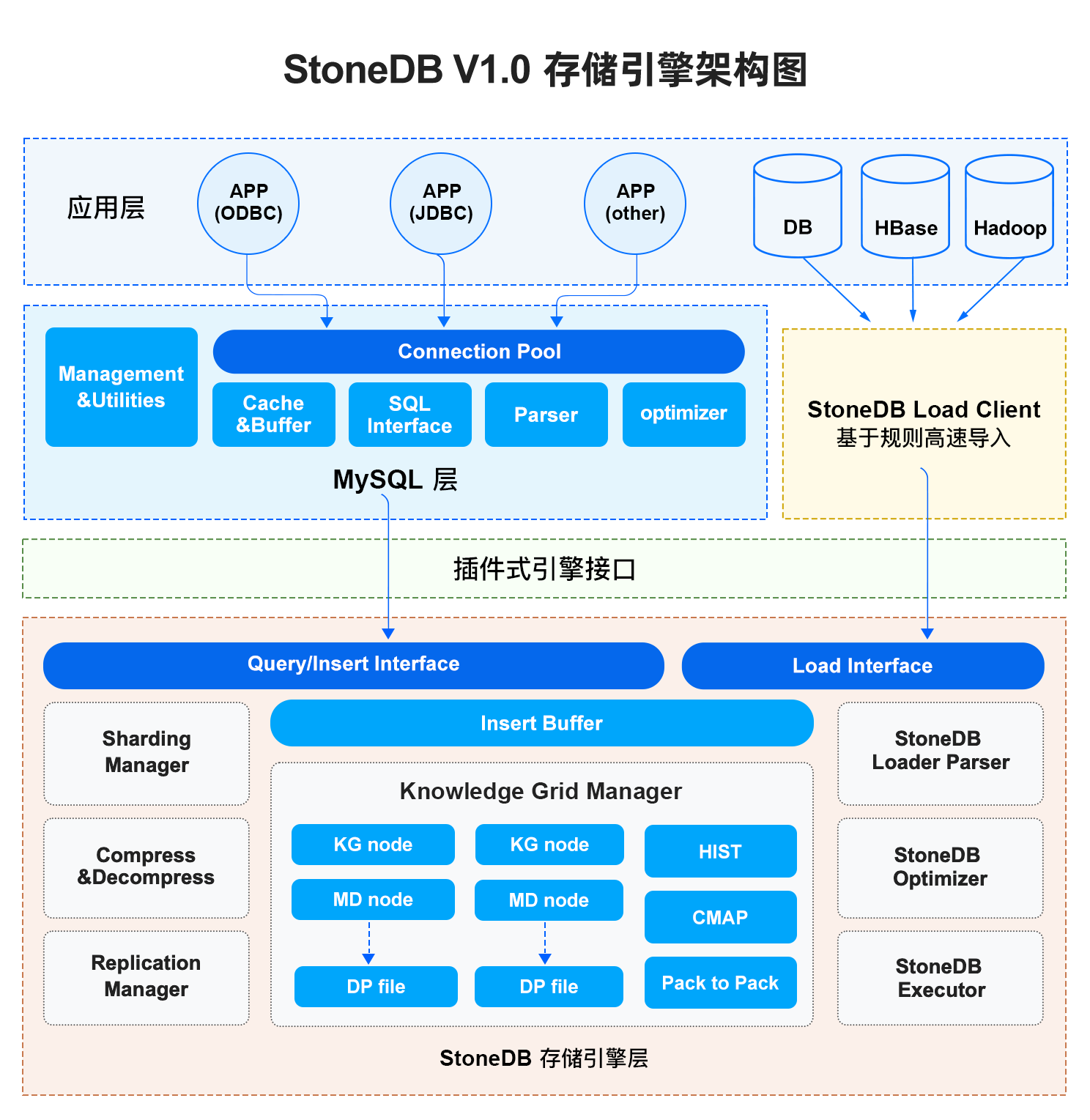

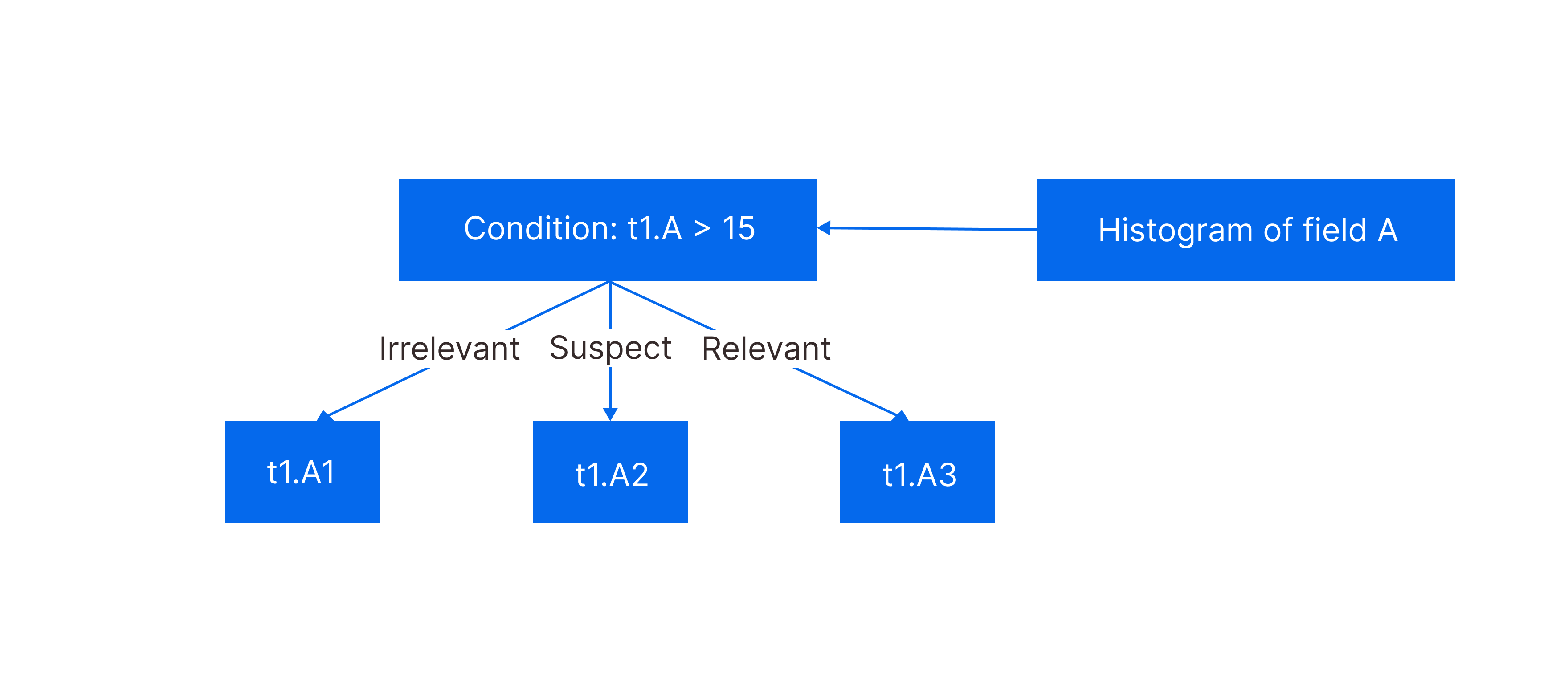

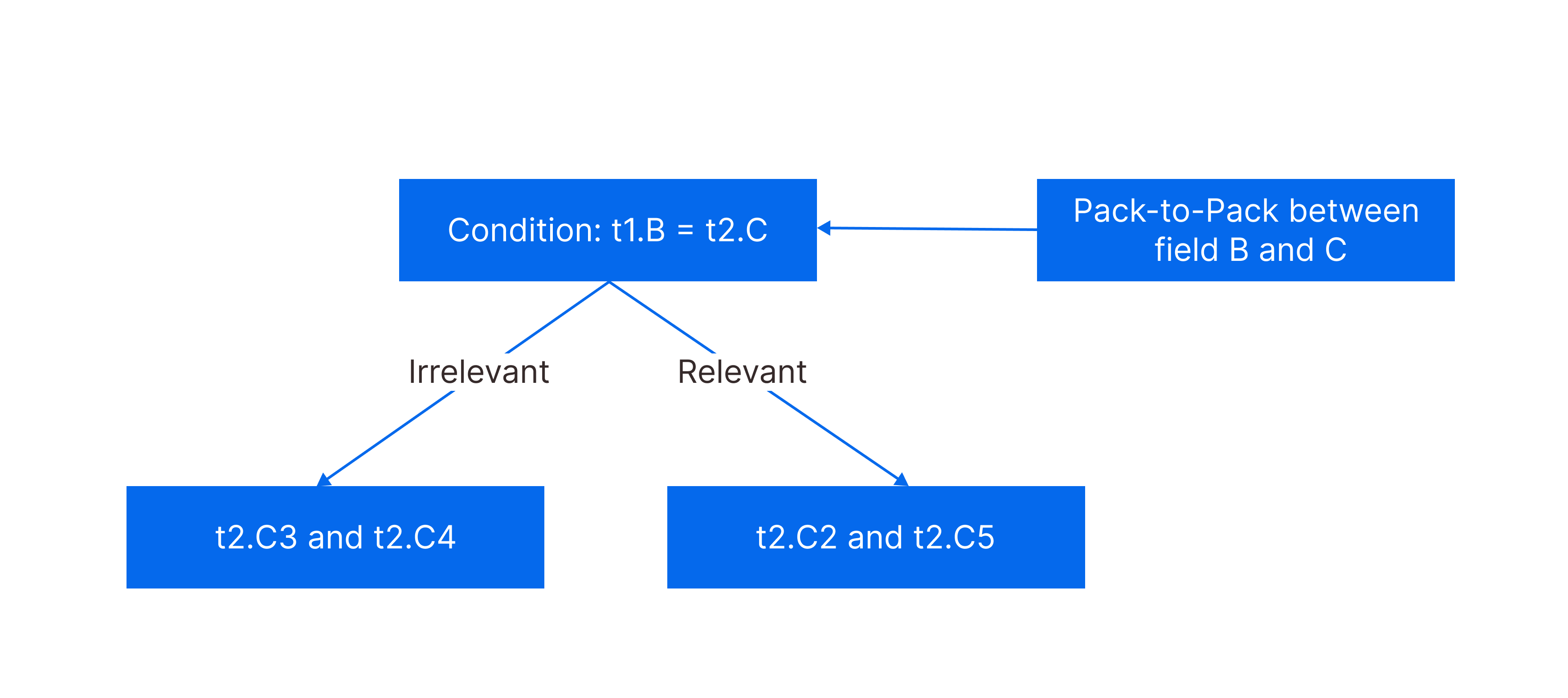

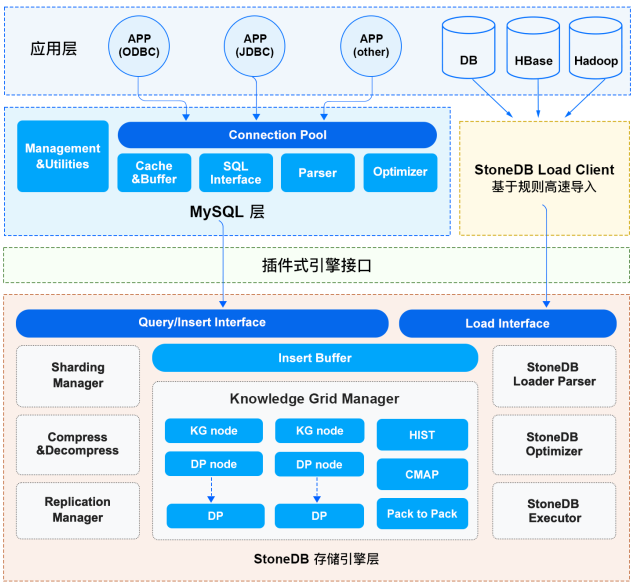

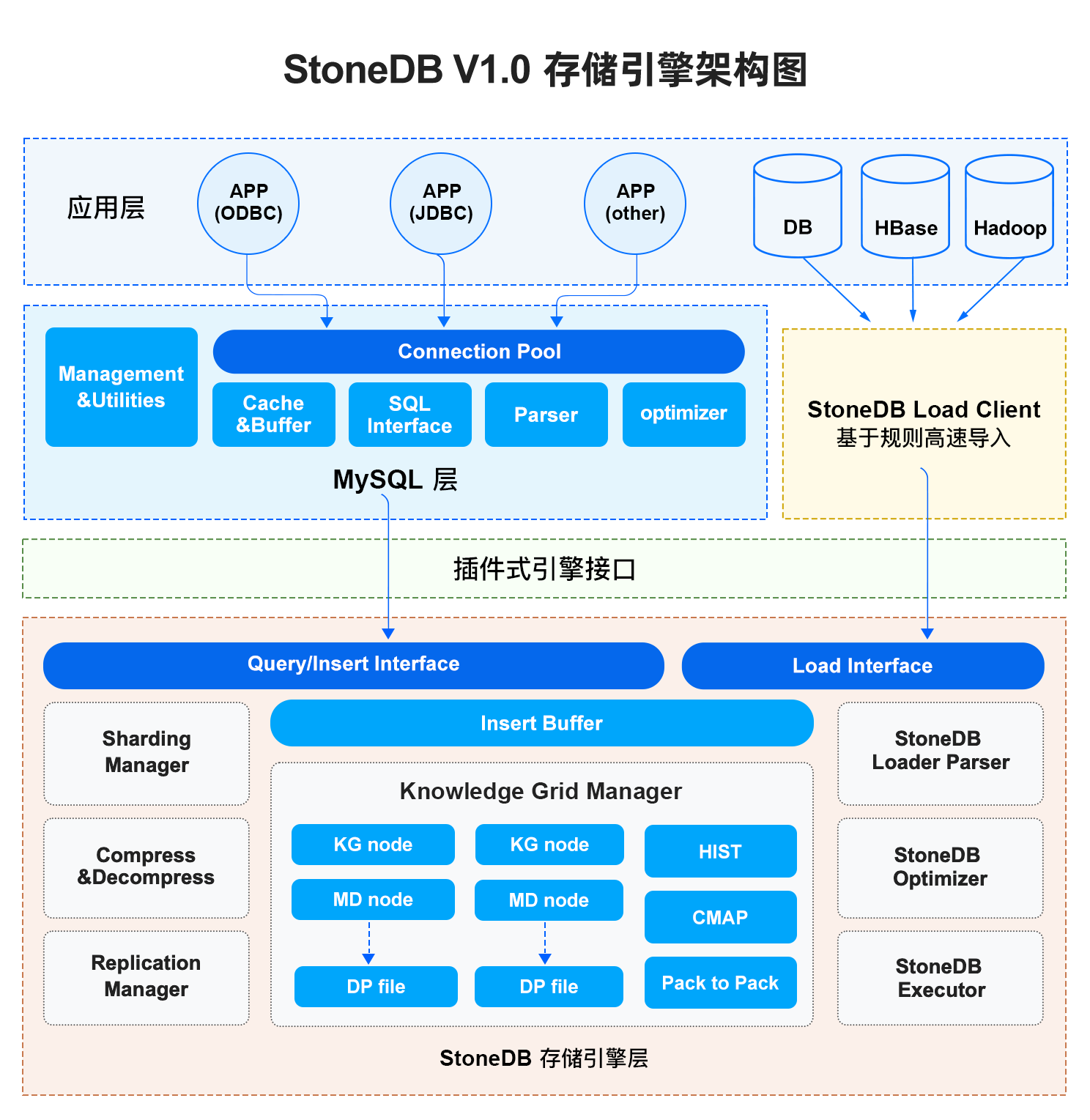

* docs(stonedb): update the latest docs(#391) * docs(stonedb): update the docs of Architechure and Limits

Showing

67.8 KB

85.4 KB

117.9 KB

| W: | H:

| W: | H:

10.7 KB

10.7 KB

18.2 KB

此差异已折叠。

| W: | H:

| W: | H: