Merge branch 'dygraph' of https://github.com/PaddlePaddle/PaddleOCR into fix_vqa

Showing

doc/doc_ch/FAQ.md

100755 → 100644

此差异已折叠。

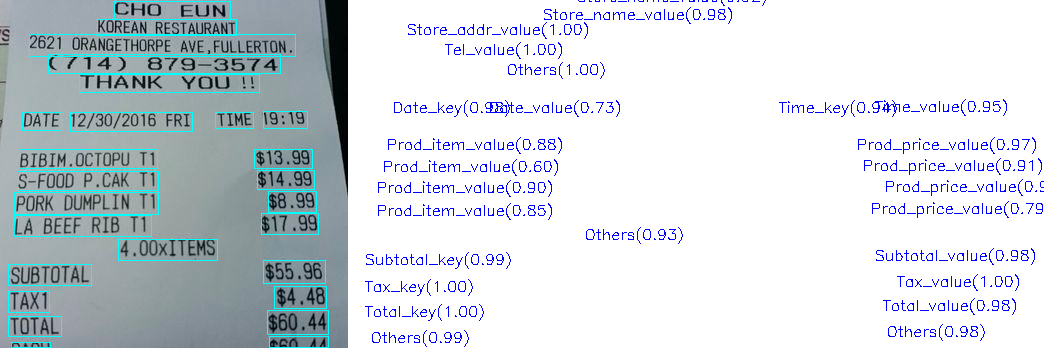

ppstructure/docs/imgs/0.png

0 → 100644

208.3 KB

ppstructure/docs/kie.md

0 → 100644