Support GradCAM (#7626)

* fix slice infer one image save_results (#7654)

* Support GradCAM Cascade_rcnn forward bugfix

* code style fix

* BBoxCAM class name fix

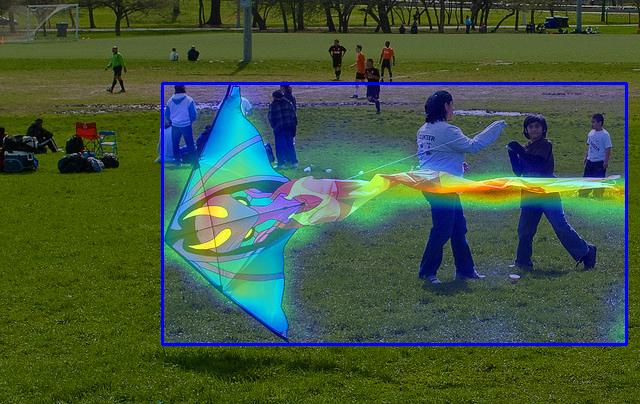

* Add gradcam tutorial and demo

---------

Co-authored-by: NFeng Ni <nemonameless@qq.com>

Showing

60.7 KB

docs/tutorials/GradCAM_cn.md

0 → 100644

docs/tutorials/GradCAM_en.md

0 → 100644

ppdet/utils/cam_utils.py

0 → 100644

tools/cam_ppdet.py

0 → 100644