merge develop

Showing

文件已移动

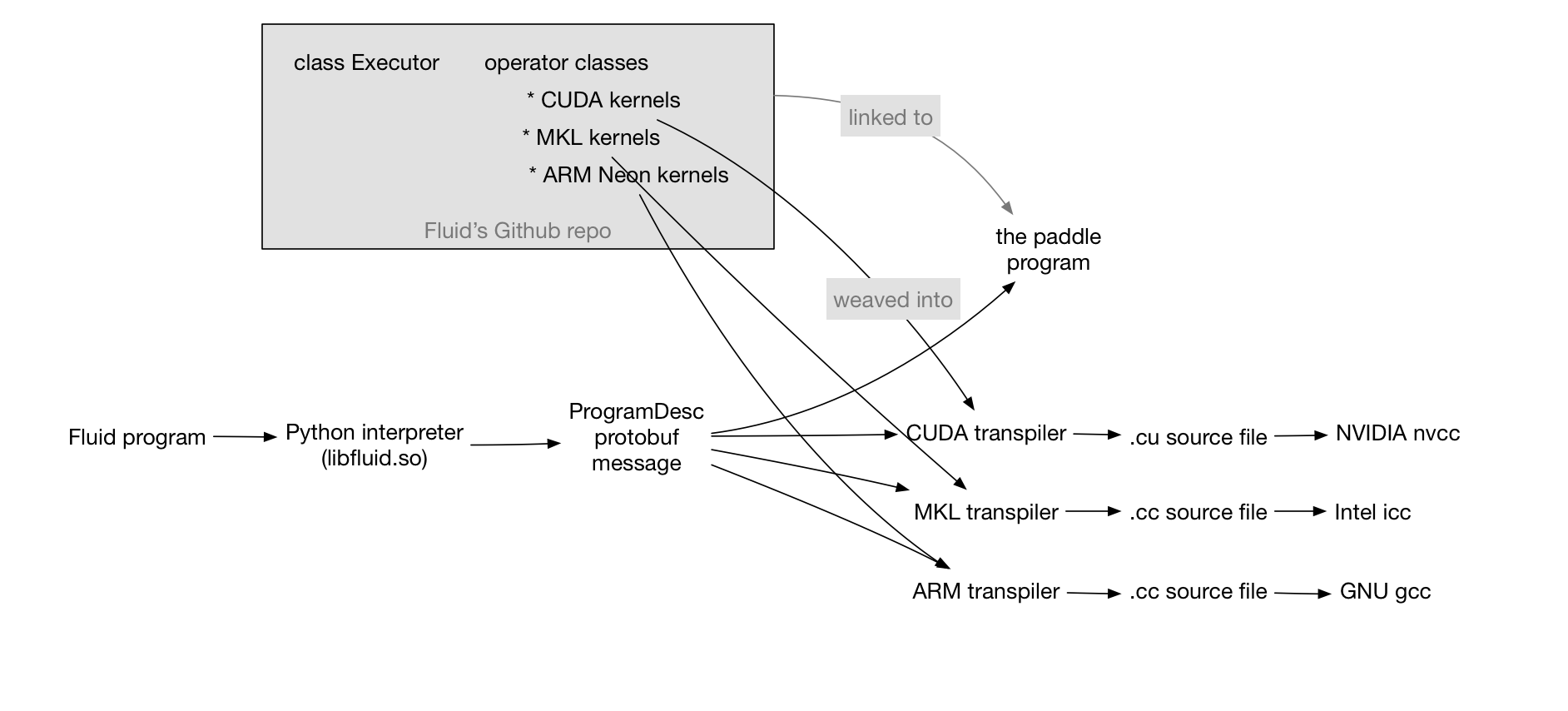

doc/design/fluid-compiler.graffle

0 → 100644

文件已添加

doc/design/fluid-compiler.png

0 → 100644

121.2 KB

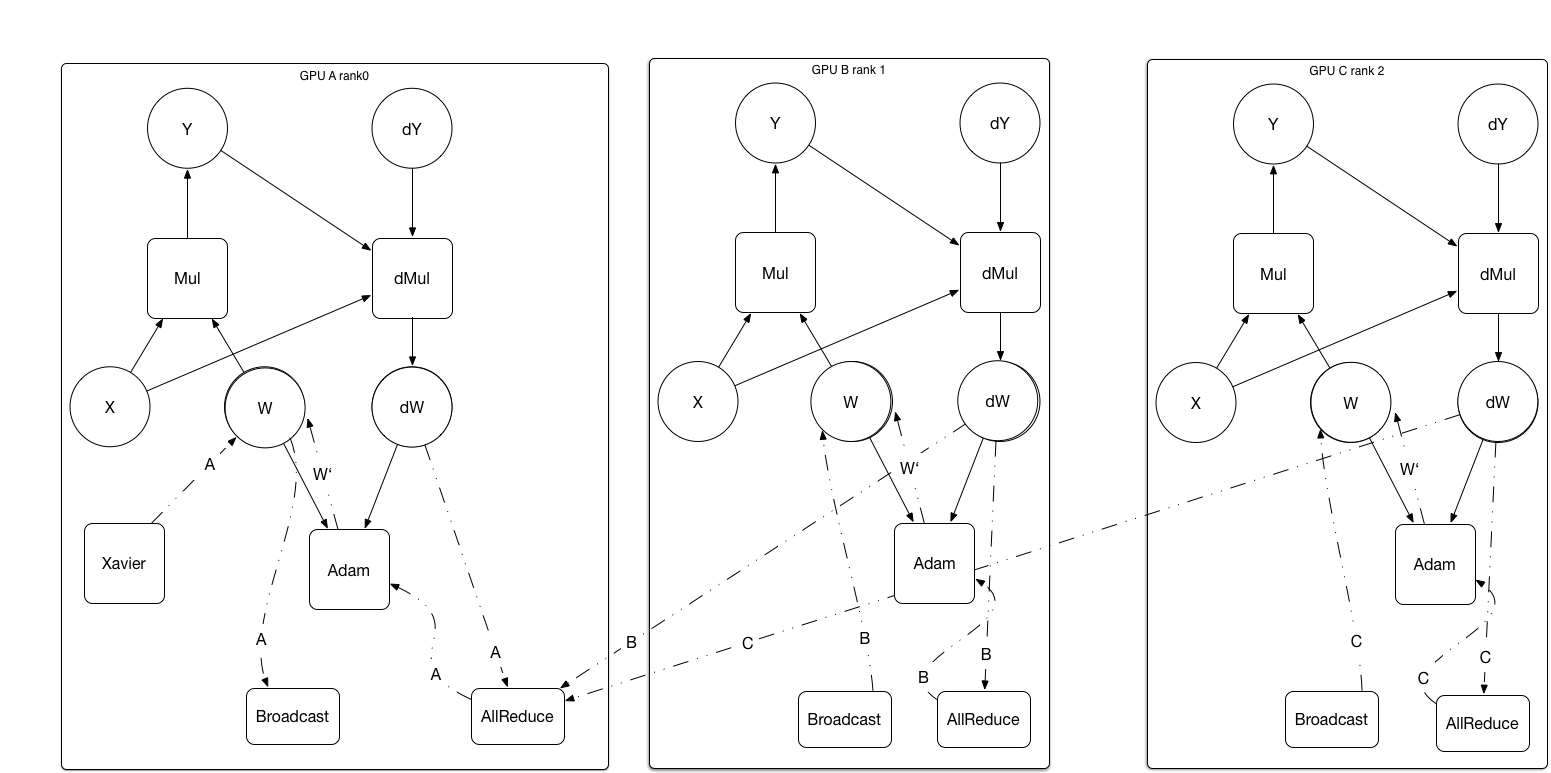

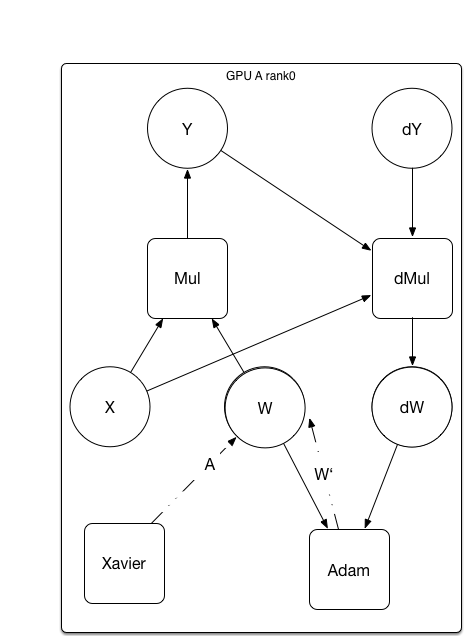

doc/design/fluid.md

0 → 100644

文件已添加

108.4 KB

文件已添加

32.8 KB

doc/design/mkl/mkl_packed.md

0 → 100644

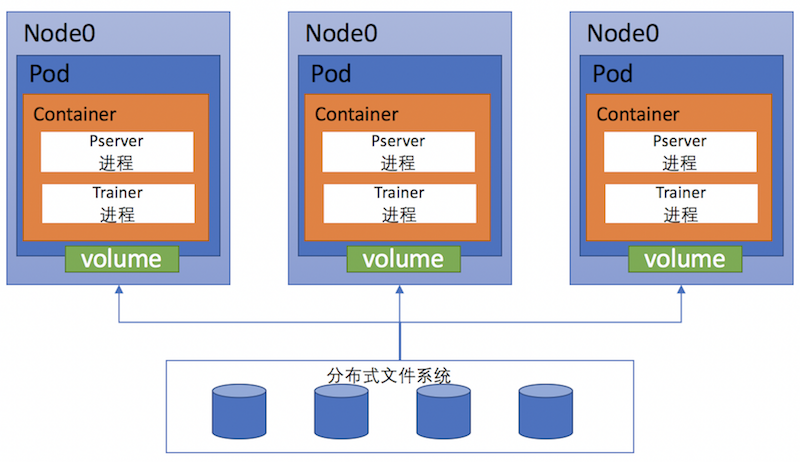

doc/design/paddle_nccl.md

0 → 100644

doc/design/support_new_device.md

0 → 100644

doc/howto/read_source.md

0 → 100644

文件已移动

文件已移动

文件已移动

420.9 KB

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

文件已移动

501.1 KB

paddle/framework/init.cc

0 → 100644

paddle/framework/init.h

0 → 100644

paddle/framework/init_test.cc

0 → 100644

paddle/operators/fill_op.cc

0 → 100644

paddle/operators/spp_op.cc

0 → 100644

此差异已折叠。

paddle/operators/spp_op.cu.cc

0 → 100644

此差异已折叠。

paddle/operators/spp_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。