init v1.0 version

上级

Showing

.bazeliskrc

0 → 100644

.bazelrc

0 → 100644

.bazelversion

0 → 100644

.gitignore

0 → 100755

BUILD.bazel

0 → 100644

此差异已折叠。

Dockerfile

0 → 100644

LICENSE

0 → 100644

README.md

0 → 100644

README_CN.md

0 → 100644

WORKSPACE

0 → 100644

bazel/BUILD

0 → 100644

bazel/BUILD.arrow

0 → 100644

bazel/BUILD.eigen

0 → 100755

bazel/BUILD.function2

0 → 100755

bazel/BUILD.hiredis

0 → 100755

bazel/BUILD.nlohmann_json

0 → 100644

bazel/BUILD.relic

0 → 100755

bazel/brotli.BUILD

0 → 100644

bazel/bzip2.BUILD

0 → 100644

bazel/cares.BUILD

0 → 100644

bazel/deps.bzl

0 → 100644

bazel/di.BUILD

0 → 100644

bazel/fmt.BUILD

0 → 100644

bazel/hat_trie.BUILD

0 → 100644

bazel/liborc.BUILD

0 → 100644

bazel/libp2p.BUILD

0 → 100644

bazel/lz4.BUILD

0 → 100644

bazel/prim.bzl

0 → 100644

bazel/rapidjson.BUILD

0 → 100644

bazel/repos.bzl

0 → 100644

bazel/snappy.BUILD

0 → 100644

bazel/soralog.BUILD

0 → 100644

bazel/sqlite.BUILD

0 → 100644

bazel/thrift.BUILD

0 → 100644

bazel/xsimd.BUILD

0 → 100644

bazel/xz.BUILD

0 → 100644

bazel/zlib.BUILD

0 → 100644

bazel/zstd.BUILD

0 → 100644

config/node0.yaml

0 → 100644

config/node1.yaml

0 → 100644

config/node2.yaml

0 → 100644

config/primihub_node0.yaml

0 → 100644

config/primihub_node1.yaml

0 → 100644

config/primihub_node2.yaml

0 → 100644

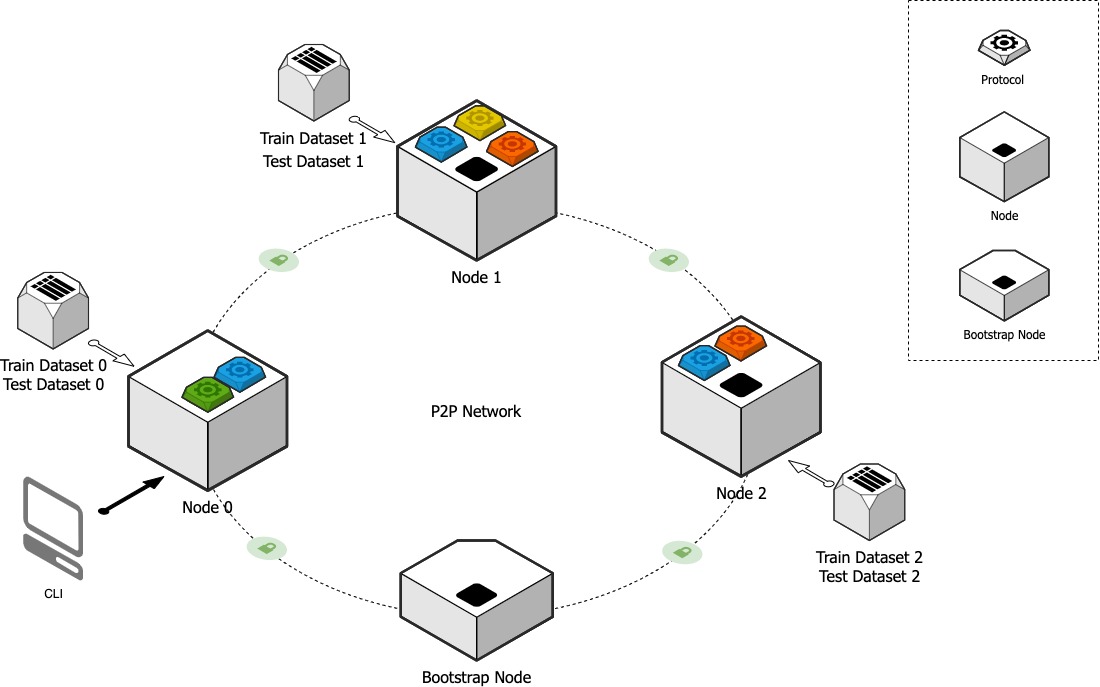

doc/tutorial-depolyment.jpg

0 → 100644

81.2 KB

docker-compose-dev.yml

0 → 100644

docker-compose.yml

0 → 100644

external/cryptoTools.BUILD

0 → 100755

external/libOTe.BUILD

0 → 100755

pre_build.sh

0 → 100755

python/README.md

0 → 100644

python/primihub/FL/__init__.py

0 → 100644

此差异已折叠。

python/primihub/Pipfile

0 → 100644

python/primihub/__init__.py

0 → 100644

python/primihub/context.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/primihub/executor.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

python/requirements.txt

0 → 100644

python/setup.py

0 → 100644

此差异已折叠。

src/primihub/algorithm/aby3ML.cc

0 → 100755

此差异已折叠。

src/primihub/algorithm/aby3ML.h

0 → 100755

此差异已折叠。

src/primihub/algorithm/base.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/algorithm/logistic.h

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/algorithm/plainML.cc

0 → 100755

src/primihub/algorithm/plainML.h

0 → 100755

此差异已折叠。

src/primihub/algorithm/readme.md

0 → 100644

此差异已折叠。

此差异已折叠。

src/primihub/cli/cli.cc

0 → 100755

此差异已折叠。

src/primihub/cli/cli.h

0 → 100755

此差异已折叠。

src/primihub/common/clp.cc

0 → 100755

此差异已折叠。

src/primihub/common/clp.h

0 → 100755

此差异已折叠。

此差异已折叠。

src/primihub/common/defines.cc

0 → 100755

此差异已折叠。

src/primihub/common/defines.h

0 → 100755

此差异已折叠。

src/primihub/common/finally.h

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/common/gsl/gsl

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/common/gsl/gsl_byte

0 → 100755

此差异已折叠。

src/primihub/common/gsl/gsl_util

0 → 100755

此差异已折叠。

此差异已折叠。

src/primihub/common/gsl/span

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/common/type/matrix.h

0 → 100755

此差异已折叠。

此差异已折叠。

src/primihub/common/type/type.cc

0 → 100755

此差异已折叠。

src/primihub/common/type/type.h

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/data_store/dataset.h

0 → 100644

此差异已折叠。

src/primihub/data_store/driver.cc

0 → 100755

此差异已折叠。

src/primihub/data_store/driver.h

0 → 100755

此差异已折叠。

src/primihub/data_store/factory.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/node/node.cc

0 → 100755

此差异已折叠。

src/primihub/node/node.h

0 → 100755

此差异已折叠。

src/primihub/node/nodelet.cc

0 → 100644

此差异已折叠。

src/primihub/node/nodelet.h

0 → 100644

此差异已折叠。

此差异已折叠。

src/primihub/node/worker/worker.h

0 → 100755

此差异已折叠。

src/primihub/p2p/node_stub.cc

0 → 100644

此差异已折叠。

src/primihub/p2p/node_stub.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/primitive/ot/LICENSE

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/protocol/LICENSE

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/protocol/runtime.cc

0 → 100644

此差异已折叠。

src/primihub/protocol/runtime.h

0 → 100644

此差异已折叠。

此差异已折叠。

src/primihub/protocol/scheduler.h

0 → 100644

此差异已折叠。

src/primihub/protocol/sh_task.cc

0 → 100644

此差异已折叠。

src/primihub/protocol/sh_task.h

0 → 100644

此差异已折叠。

src/primihub/protocol/task.cc

0 → 100644

此差异已折叠。

src/primihub/protocol/task.h

0 → 100644

此差异已折叠。

src/primihub/protos/common.proto

0 → 100755

此差异已折叠。

src/primihub/protos/pir.proto

0 → 100644

此差异已折叠。

src/primihub/protos/psi.proto

0 → 100644

此差异已折叠。

此差异已折叠。

src/primihub/protos/worker.proto

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/service/error.cpp

0 → 100644

此差异已折叠。

src/primihub/service/error.hpp

0 → 100644

此差异已折叠。

src/primihub/service/outcome.hpp

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/task/semantic/task.h

0 → 100644

此差异已折叠。

此差异已折叠。

src/primihub/util/crypto/Blake2.h

0 → 100755

此差异已折叠。

src/primihub/util/crypto/LICENSE

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/util/crypto/block.cc

0 → 100755

此差异已折叠。

src/primihub/util/crypto/block.h

0 → 100755

此差异已折叠。

此差异已折叠。

src/primihub/util/crypto/prng.cc

0 → 100755

此差异已折叠。

src/primihub/util/crypto/prng.h

0 → 100755

此差异已折叠。

此差异已折叠。

src/primihub/util/eigen_util.cc

0 → 100644

此差异已折叠。

src/primihub/util/eigen_util.h

0 → 100644

此差异已折叠。

src/primihub/util/file_util.cc

0 → 100644

此差异已折叠。

src/primihub/util/file_util.h

0 → 100644

此差异已折叠。

src/primihub/util/json.hpp

0 → 100755

此差异已折叠。

src/primihub/util/log.cc

0 → 100755

此差异已折叠。

src/primihub/util/log.h

0 → 100755

此差异已折叠。

src/primihub/util/model_util.cc

0 → 100644

此差异已折叠。

src/primihub/util/model_util.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

src/primihub/util/timer.cc

0 → 100755

此差异已折叠。

src/primihub/util/timer.h

0 → 100755

此差异已折叠。

src/primihub/util/util.cc

0 → 100755

此差异已折叠。

src/primihub/util/util.h

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/primihub/script/csv_utils.py

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/primihub/test_util.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

test/primihub/util/util_test.h

0 → 100755

此差异已折叠。

thirdparty/linux/eigen.get

0 → 100755

此差异已折叠。

thirdparty/linux/function2.get

0 → 100755

此差异已折叠。

thirdparty/linux/json.get

0 → 100755

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mraes.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrbits.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrcore.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrcrt.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrec2m.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrecn2.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrfast.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrfpe.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrfrnd.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrgcd.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrgcm.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrgf2m.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrio1.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrio2.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrjack.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrpi.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrrand.c

0 → 100644

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrscrt.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrsha3.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrshs.c

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrxgcd.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrzzn2.c

0 → 100644

此差异已折叠。

此差异已折叠。

thirdparty/miracl/mr_src/mrzzn3.c

0 → 100644

此差异已折叠。

thirdparty/miracl/mr_src/mrzzn4.c

0 → 100644

此差异已折叠。