Api update of video model (#4591)

* API update of video model * update api and refine bmn dygraph implementation * refine Readme and config of bmn dygraph * fix reademe and reader * fix details

Showing

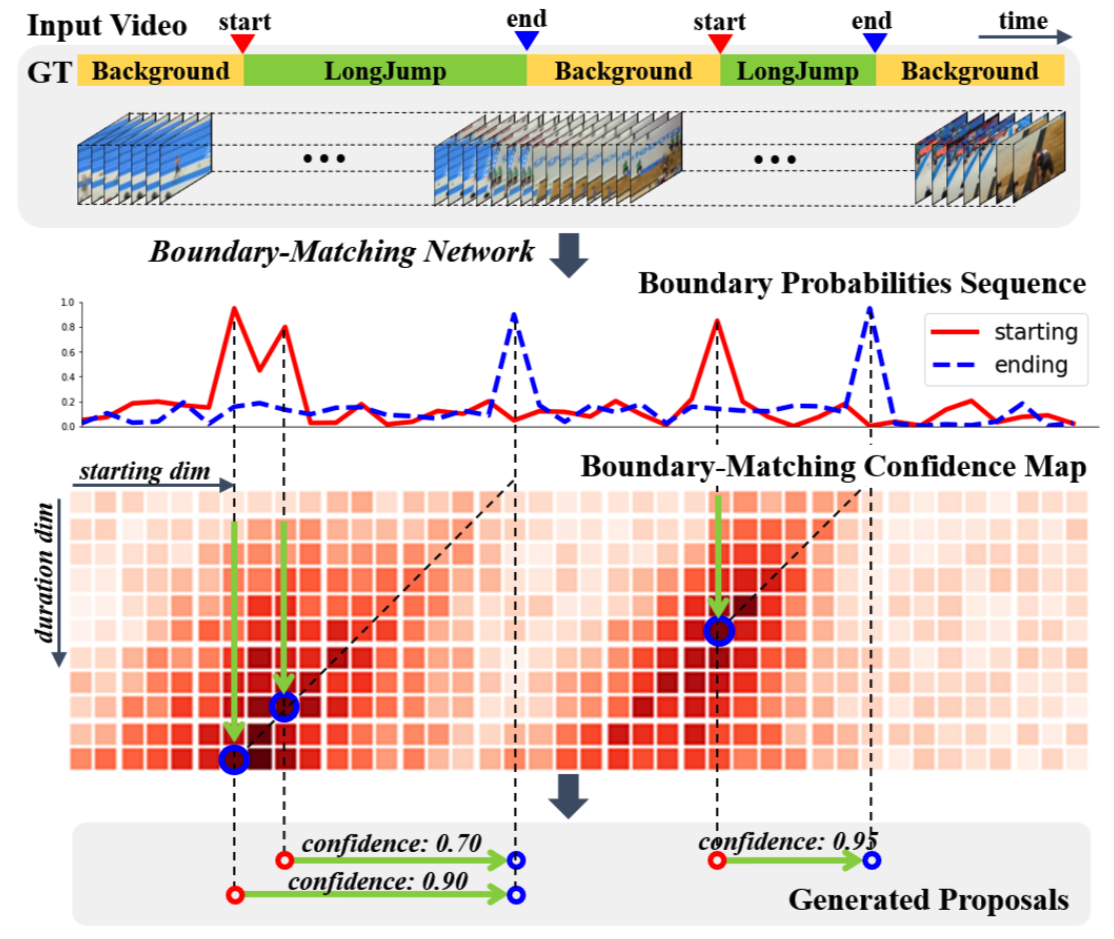

dygraph/bmn/BMN.png

0 → 100644

520.3 KB