Add inference benchmark for PaddleDetection. (#3437)

* Add inference benchmark * Adjustment order * Update some descriptions

Showing

demo/000000014439_640x640.jpg

0 → 100644

267.0 KB

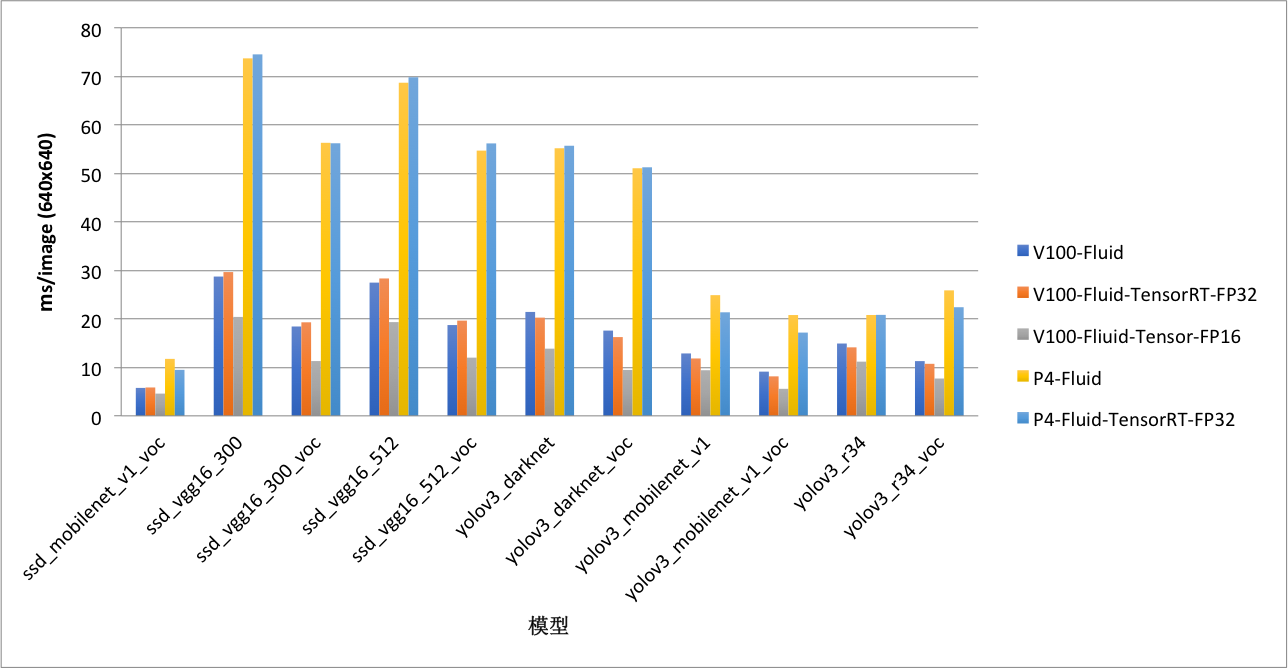

docs/BENCHMARK_INFER_cn.md

0 → 100644

149.3 KB