Remove dev

Showing

dev/doc/.buildinfo

已删除

100644 → 0

49.7 KB

53.0 KB

57.7 KB

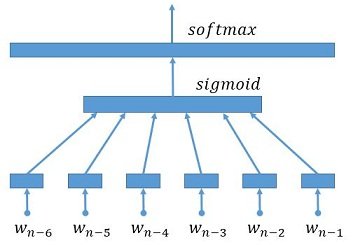

dev/doc/_images/NetLR_en.png

已删除

100644 → 0

48.3 KB

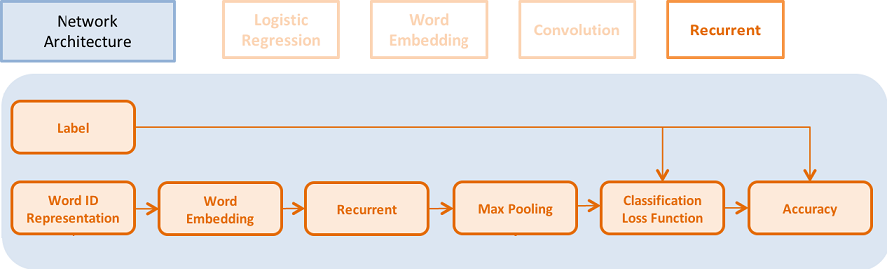

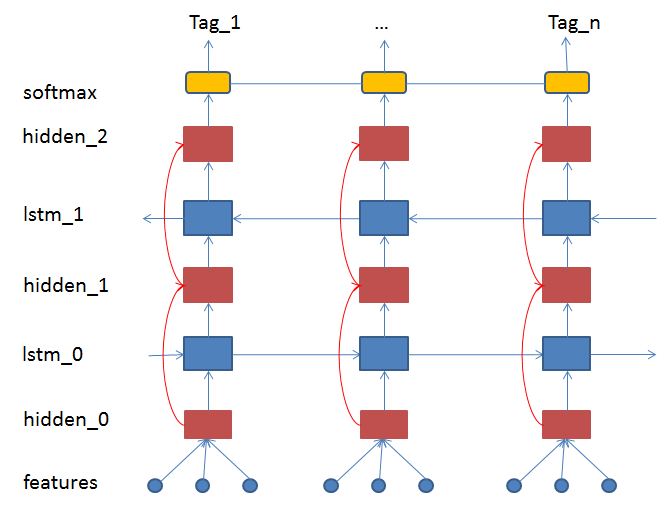

dev/doc/_images/NetRNN_en.png

已删除

100644 → 0

55.8 KB

7.3 KB

8.5 KB

8.6 KB

11.4 KB

116.2 KB

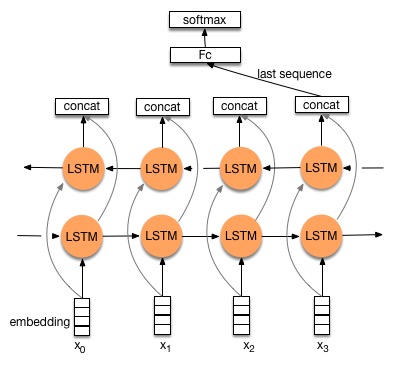

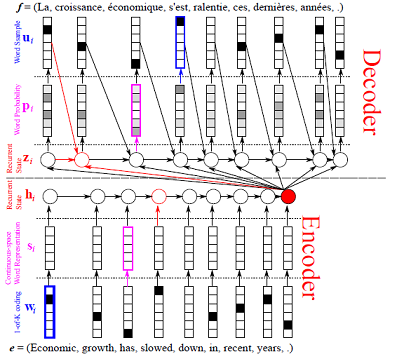

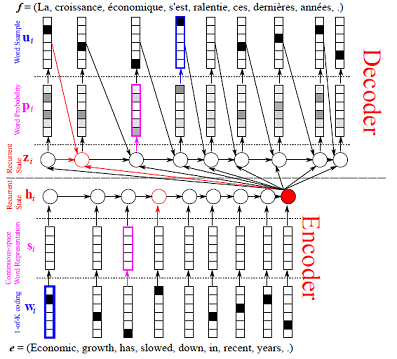

dev/doc/_images/bi_lstm.jpg

已删除

100644 → 0

34.8 KB

dev/doc/_images/bi_lstm1.jpg

已删除

100644 → 0

34.8 KB

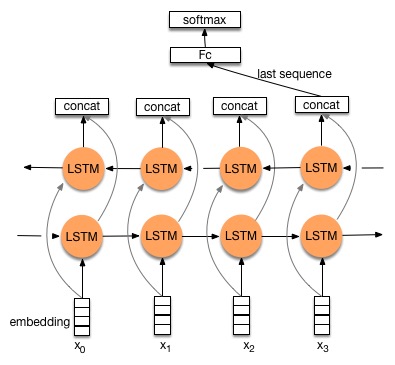

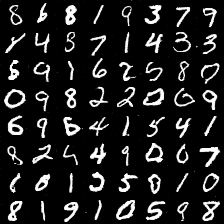

dev/doc/_images/cifar.png

已删除

100644 → 0

455.6 KB

236.1 KB

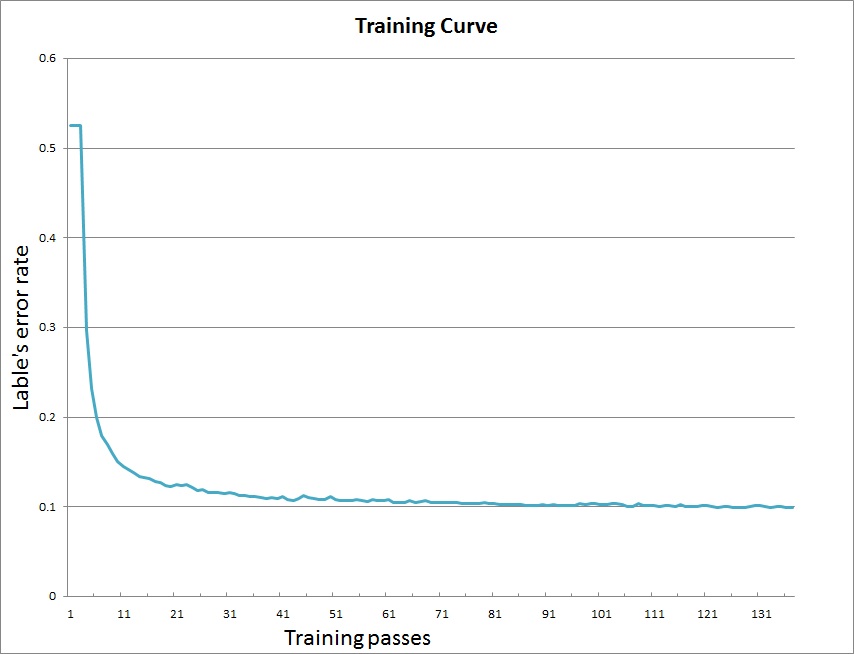

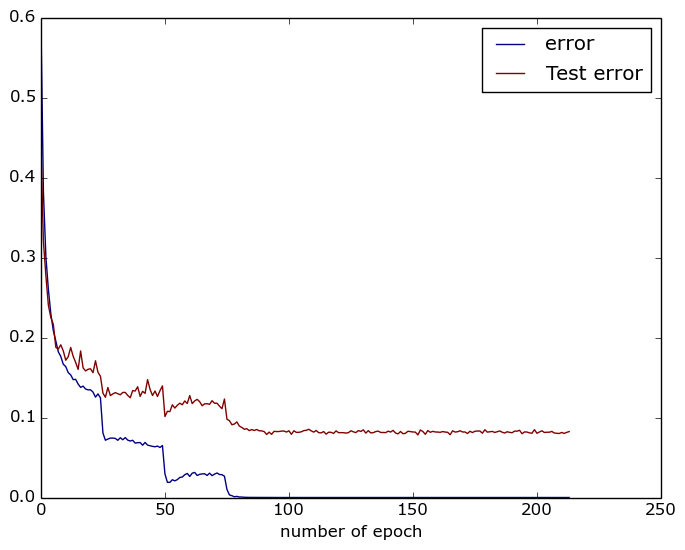

dev/doc/_images/curve.jpg

已删除

100644 → 0

52.0 KB

66.5 KB

66.5 KB

dev/doc/_images/feature.jpg

已删除

100644 → 0

30.5 KB

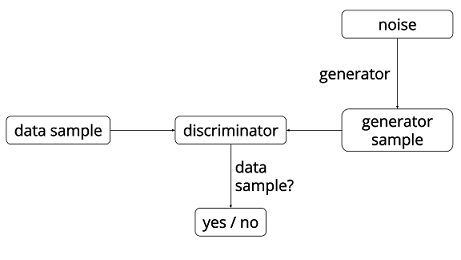

dev/doc/_images/gan.png

已删除

100644 → 0

17.4 KB

51.4 KB

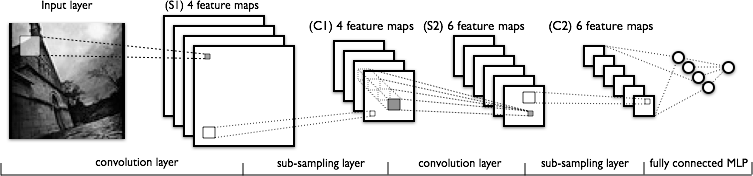

dev/doc/_images/lenet.png

已删除

100644 → 0

48.7 KB

dev/doc/_images/lstm.png

已删除

100644 → 0

49.5 KB

28.0 KB

27.2 KB

66.9 KB

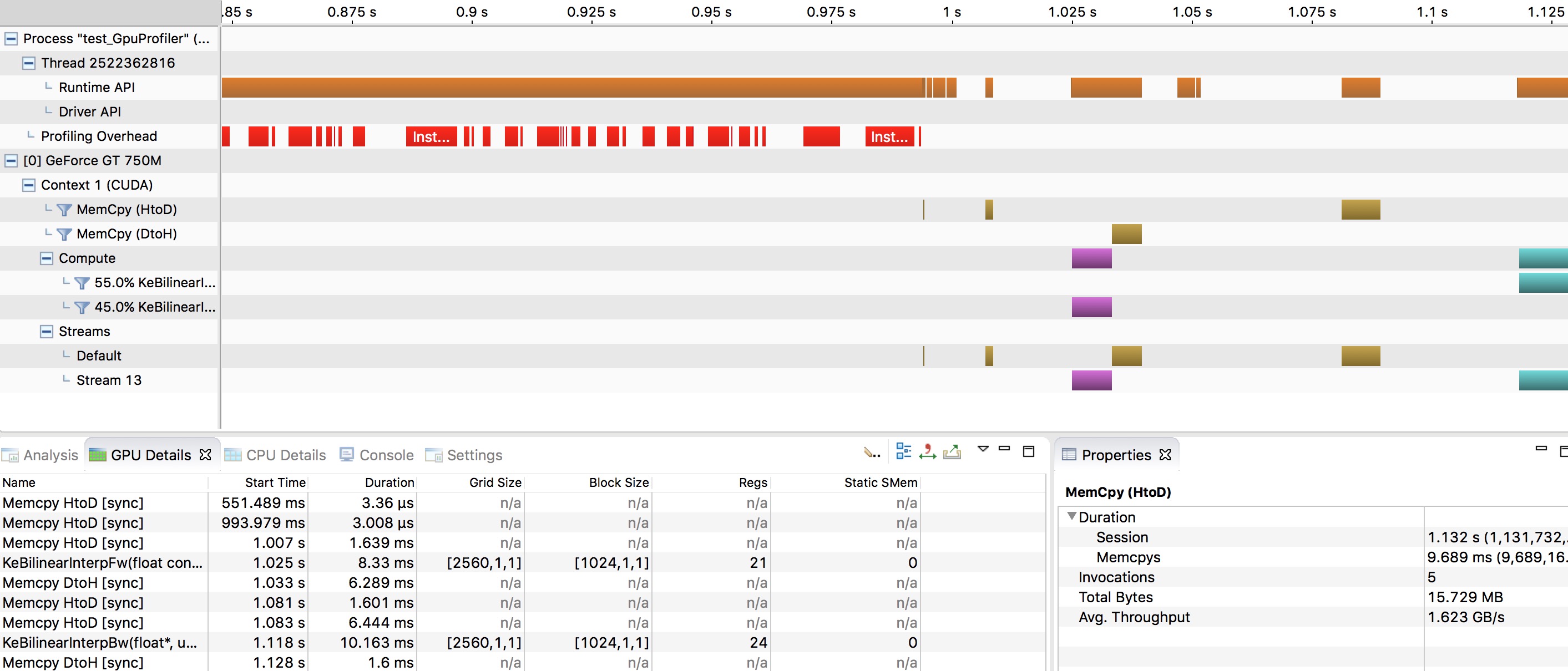

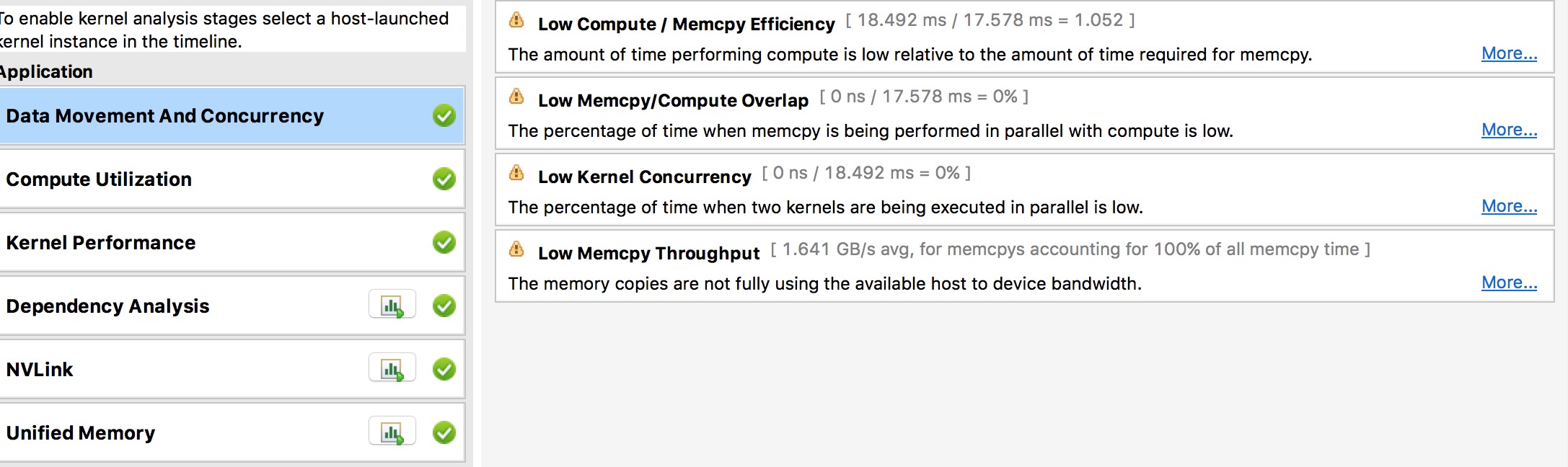

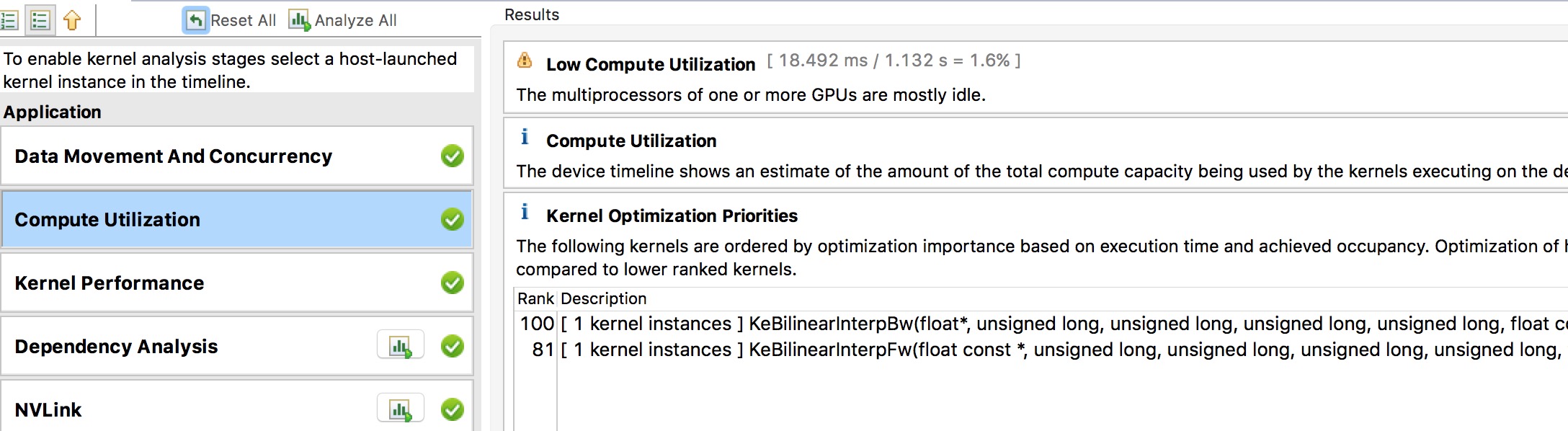

dev/doc/_images/nvvp1.png

已删除

100644 → 0

416.1 KB

dev/doc/_images/nvvp2.png

已删除

100644 → 0

483.5 KB

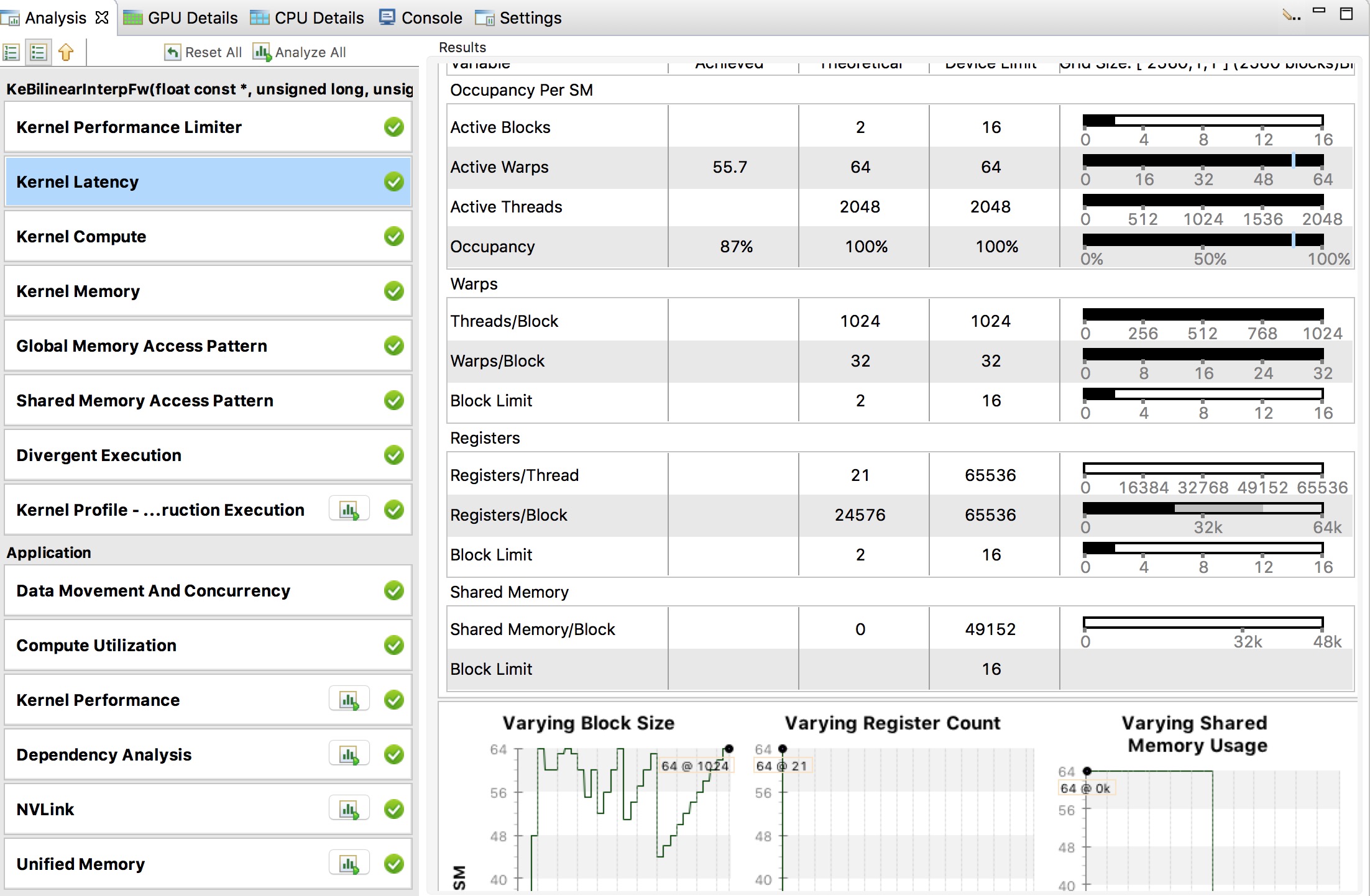

dev/doc/_images/nvvp3.png

已删除

100644 → 0

247.8 KB

dev/doc/_images/nvvp4.png

已删除

100644 → 0

276.6 KB

43.4 KB

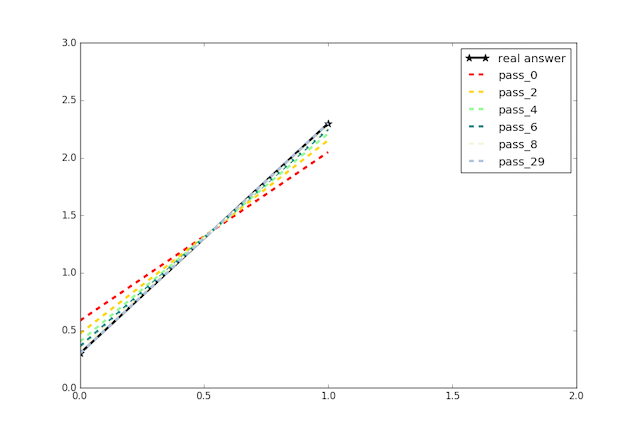

dev/doc/_images/plot.png

已删除

100644 → 0

30.3 KB

70.0 KB

81.2 KB

21.9 KB

34.9 KB

50.8 KB

30.3 KB

24.3 KB

87.1 KB

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

673 字节

dev/doc/_static/basic.css

已删除

100644 → 0

此差异已折叠。

756 字节

829 字节

dev/doc/_static/comment.png

已删除

100644 → 0

641 字节

此差异已折叠。

dev/doc/_static/css/theme.css

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/doctools.js

已删除

100644 → 0

此差异已折叠。

222 字节

dev/doc/_static/down.png

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/file.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/_static/jquery.js

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/_static/js/theme.js

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/minus.png

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/plus.png

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/pygments.css

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/_static/underscore.js

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

dev/doc/_static/up.png

已删除

100644 → 0

此差异已折叠。

dev/doc/_static/websupport.js

已删除

100644 → 0

此差异已折叠。

dev/doc/about/index_en.html

已删除

100644 → 0

此差异已折叠。

dev/doc/api/index_en.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/api/v1/index_en.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/design/api.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

dev/doc/genindex.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/howto/index_en.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc/index.html

已删除

120000 → 0

此差异已折叠。

dev/doc/index_en.html

已删除

100644 → 0

此差异已折叠。

dev/doc/objects.inv

已删除

100644 → 0

此差异已折叠。

dev/doc/py-modindex.html

已删除

100644 → 0

此差异已折叠。

dev/doc/search.html

已删除

100644 → 0

此差异已折叠。

dev/doc/searchindex.js

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/.buildinfo

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_images/cifar.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_images/curve.jpg

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_images/lenet.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_images/lstm.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_images/nvvp1.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_images/nvvp2.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_images/nvvp3.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_images/nvvp4.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

dev/doc_cn/_images/plot.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_static/basic.css

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_static/down.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_static/file.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_static/jquery.js

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_static/minus.png

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/_static/plus.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/_static/up.png

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/api/index_cn.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/design/api.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

dev/doc_cn/faq/index_cn.html

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/genindex.html

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

dev/doc_cn/index.html

已删除

120000 → 0

此差异已折叠。

dev/doc_cn/index_cn.html

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/objects.inv

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/py-modindex.html

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/search.html

已删除

100644 → 0

此差异已折叠。

dev/doc_cn/searchindex.js

已删除

100644 → 0

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。