Merge branch 'develop' of upstream into margin_rank_loss_op_dev

Showing

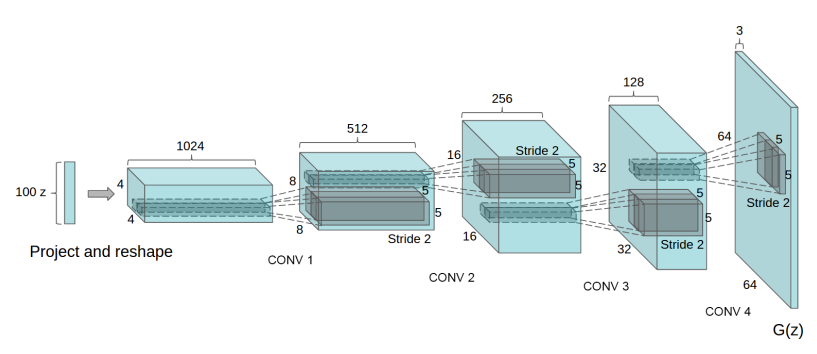

doc/design/dcgan.png

0 → 100644

56.6 KB

doc/design/gan_api.md

0 → 100644

doc/design/optimizer.md

0 → 100644

doc/design/python_api.md

0 → 100644

doc/design/refactor/session.md

0 → 100644

doc/design/register_grad_op.md

0 → 100644

doc/design/selected_rows.md

0 → 100644

doc/design/test.dot

0 → 100644

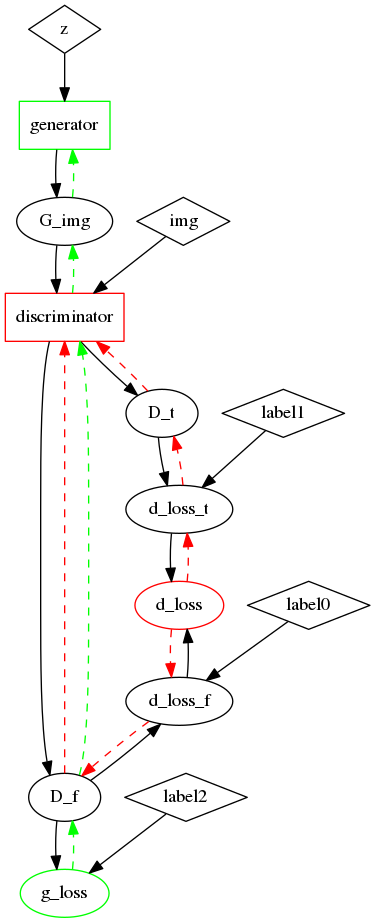

doc/design/test.dot.png

0 → 100644

57.6 KB

paddle/framework/data_type.h

0 → 100644

paddle/framework/executor.cc

0 → 100644

paddle/framework/executor.h

0 → 100644

paddle/framework/executor_test.cc

0 → 100644

paddle/framework/tensor_array.cc

0 → 100644

此差异已折叠。

paddle/framework/tensor_array.h

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/framework/type_defs.h

0 → 100644

此差异已折叠。

paddle/operators/adadelta_op.cc

0 → 100644

此差异已折叠。

paddle/operators/adadelta_op.cu

0 → 100644

此差异已折叠。

paddle/operators/adadelta_op.h

0 → 100644

此差异已折叠。

paddle/operators/adagrad_op.cc

0 → 100644

此差异已折叠。

paddle/operators/adagrad_op.cu

0 → 100644

此差异已折叠。

paddle/operators/adagrad_op.h

0 → 100644

此差异已折叠。

paddle/operators/adamax_op.cc

0 → 100644

此差异已折叠。

paddle/operators/adamax_op.h

0 → 100644

此差异已折叠。

paddle/operators/conv_shift_op.cc

0 → 100644

此差异已折叠。

paddle/operators/conv_shift_op.cu

0 → 100644

此差异已折叠。

paddle/operators/conv_shift_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/operators/feed_op.h

0 → 100644

此差异已折叠。

paddle/operators/fetch_op.cc

0 → 100644

此差异已折叠。

paddle/operators/fetch_op.cu

0 → 100644

此差异已折叠。

paddle/operators/fetch_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/operators/gather.cu.h

0 → 100644

此差异已折叠。

paddle/operators/gather_op.cu

0 → 100644

此差异已折叠。

paddle/operators/interp_op.cc

0 → 100644

此差异已折叠。

paddle/operators/math/pooling.cc

0 → 100644

此差异已折叠。

paddle/operators/math/pooling.cu

0 → 100644

此差异已折叠。

paddle/operators/math/pooling.h

0 → 100644

此差异已折叠。

paddle/operators/math/vol2col.cc

0 → 100644

此差异已折叠。

paddle/operators/math/vol2col.cu

0 → 100644

此差异已折叠。

paddle/operators/math/vol2col.h

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/operators/pool_op.cc

0 → 100644

此差异已折叠。

paddle/operators/pool_op.cu

0 → 100644

此差异已折叠。

paddle/operators/pool_op.h

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

paddle/operators/reduce_op.cc

0 → 100644

此差异已折叠。

paddle/operators/reduce_op.cu

0 → 100644

此差异已折叠。

paddle/operators/reduce_op.h

0 → 100644

此差异已折叠。

paddle/operators/rmsprop_op.cc

0 → 100644

此差异已折叠。

paddle/operators/rmsprop_op.cu

0 → 100644

此差异已折叠。

此差异已折叠。

paddle/operators/scatter.cu.h

0 → 100644

此差异已折叠。

paddle/operators/scatter_op.cu

0 → 100644

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。

此差异已折叠。