updata the decoder model for indefinite width image recognition

Signed-off-by: Nchenjun2hao <chenjun01@ebupt.com>

Showing

src/__init__.py

0 → 100644

src/class_attention.py

0 → 100644

src/dataset.py

0 → 100644

src/utils.py

0 → 100644

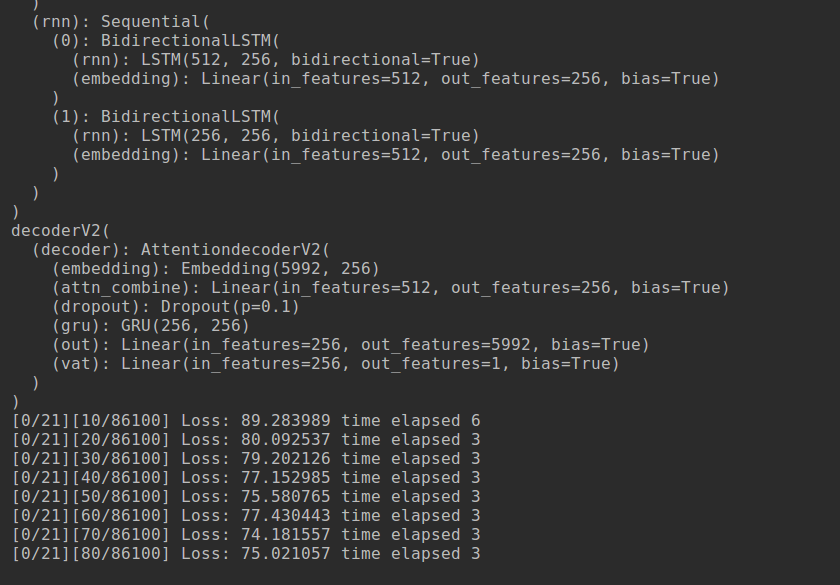

test_img/md_img/attentionV2.png

0 → 100644

93.7 KB