Merge pull request #283 from LDOUBLEV/fixocr

add deploy lite demo

Showing

.clang_format.hook

0 → 100644

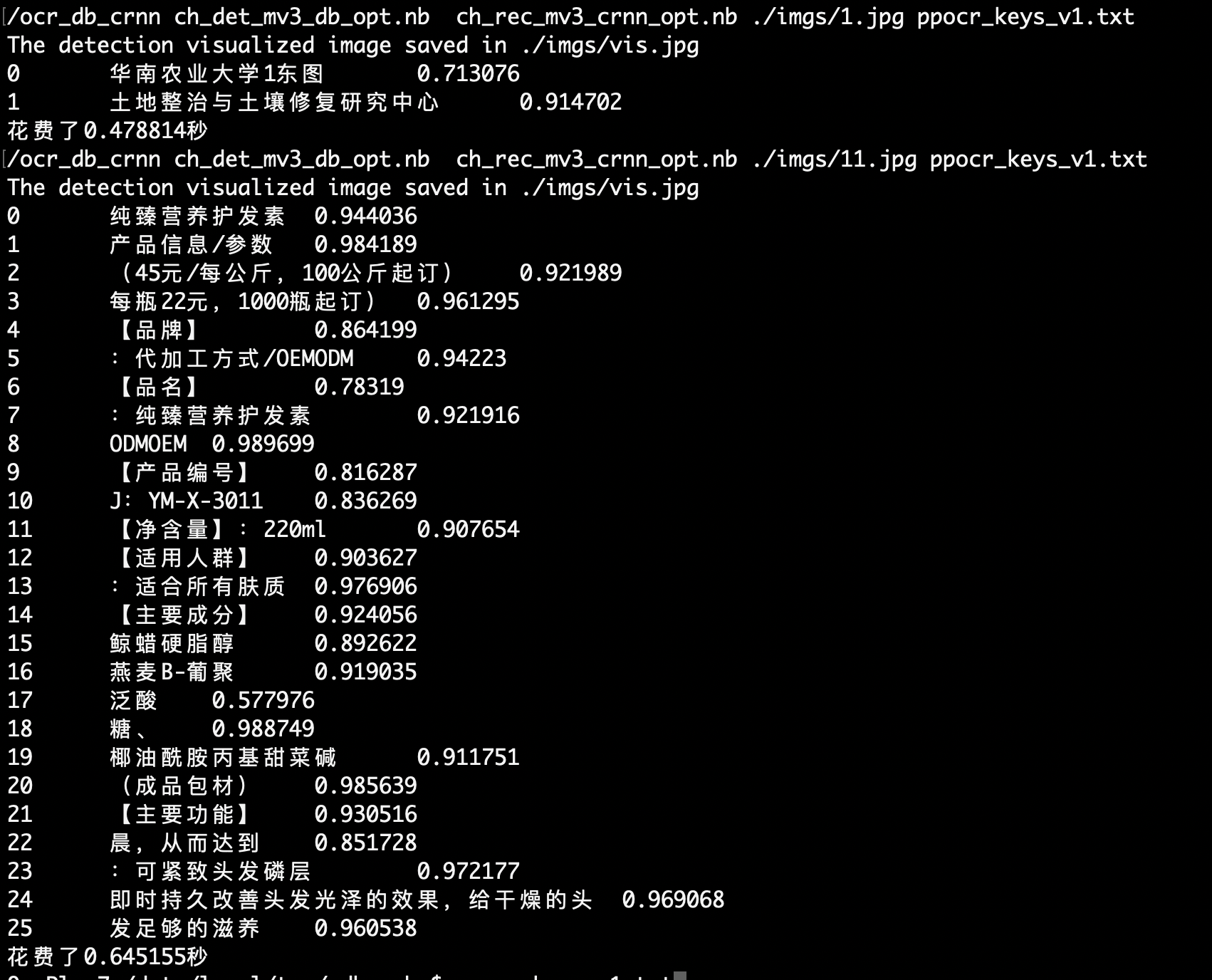

deploy/imgs/demo.png

0 → 100644

997.6 KB

deploy/lite/Makefile

0 → 100644

deploy/lite/config.txt

0 → 100644

deploy/lite/crnn_process.cc

0 → 100644

deploy/lite/crnn_process.h

0 → 100644

deploy/lite/db_post_process.cc

0 → 100644

deploy/lite/db_post_process.h

0 → 100644

deploy/lite/ocr_db_crnn.cc

0 → 100644

deploy/lite/readme.md

0 → 100644