add infer.py for ctc

Showing

ctc/README.md

0 → 100644

此差异已折叠。

ctc/data_provider.py

0 → 100644

ctc/decoder.py

0 → 100644

ctc/images/503.jpg

0 → 100644

10.2 KB

ctc/images/504.jpg

0 → 100644

3.2 KB

ctc/images/505.jpg

0 → 100644

7.6 KB

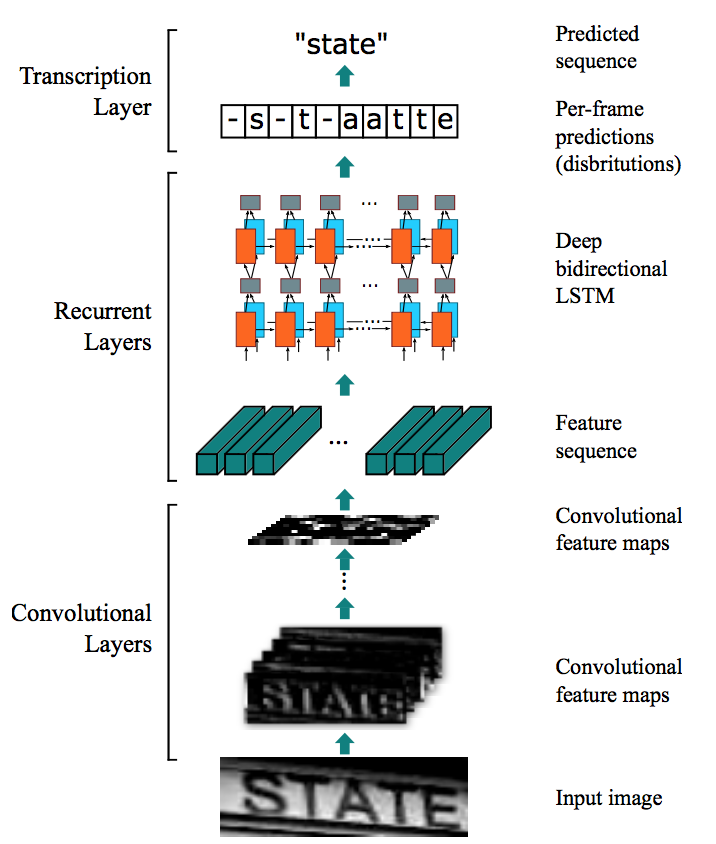

ctc/images/ctc.png

0 → 100644

129.8 KB

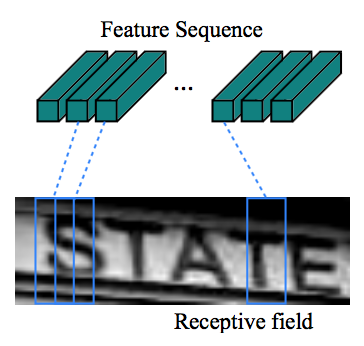

ctc/images/feature_vector.png

0 → 100644

48.1 KB

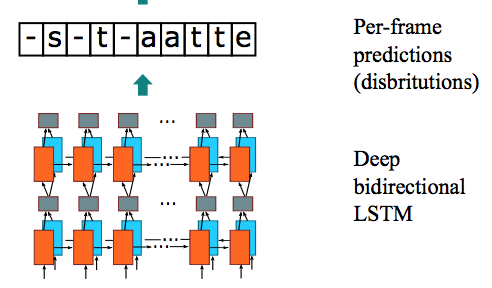

ctc/images/transcription.png

0 → 100644

36.0 KB

ctc/infer.py

0 → 100644

ctc/model.py

0 → 100644

ctc/train.py

0 → 100644