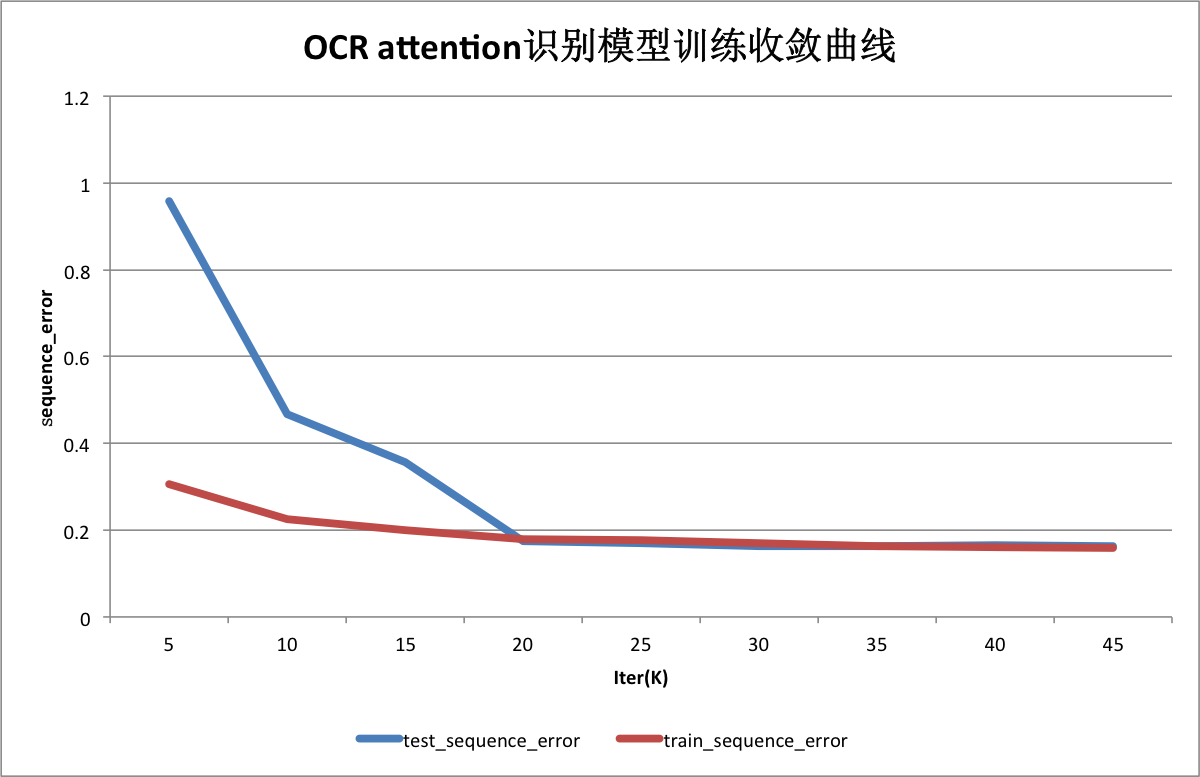

Add attention training model for ocr. (#1034)

* Add attention training model for ocr. * Add beam search for infer. * Fix data reader. * Fix inference. * Prune result of inference. * Fix README * update README * Rsize figure. * Resize image and fix format.

Showing

fluid/ocr_recognition/crnn_ctc_model.py

100644 → 100755

72.4 KB

fluid/ocr_recognition/infer.py

100644 → 100755

fluid/ocr_recognition/utility.py

100644 → 100755