【Fluid models】implement DQN model (#889)

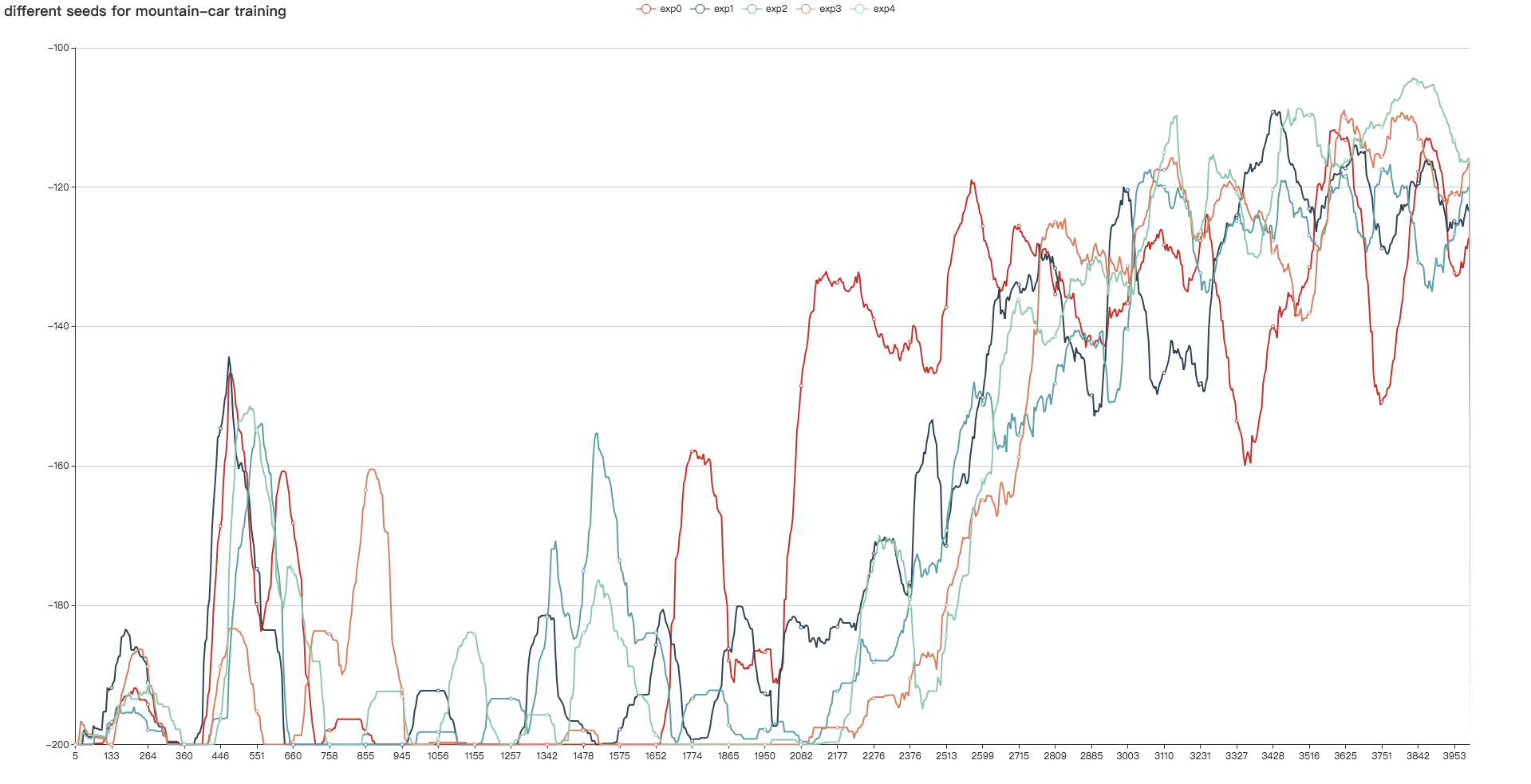

* [DQN]source code commit * Update README.md * Update README.md * add mountain-car curve * Update README.md * Update README.md * clean code * fix code style * [fix code style]/2 * remove some tensorflow package * a better way to sample from replay memory * code style

Showing

fluid/DeepQNetwork/DQN.py

0 → 100644

fluid/DeepQNetwork/README.md

0 → 100644

fluid/DeepQNetwork/agent.py

0 → 100644

fluid/DeepQNetwork/curve.png

0 → 100644

442.3 KB

fluid/DeepQNetwork/expreplay.py

0 → 100644

98.6 KB