add cnn_text

Showing

cnn_text/README.md

0 → 100644

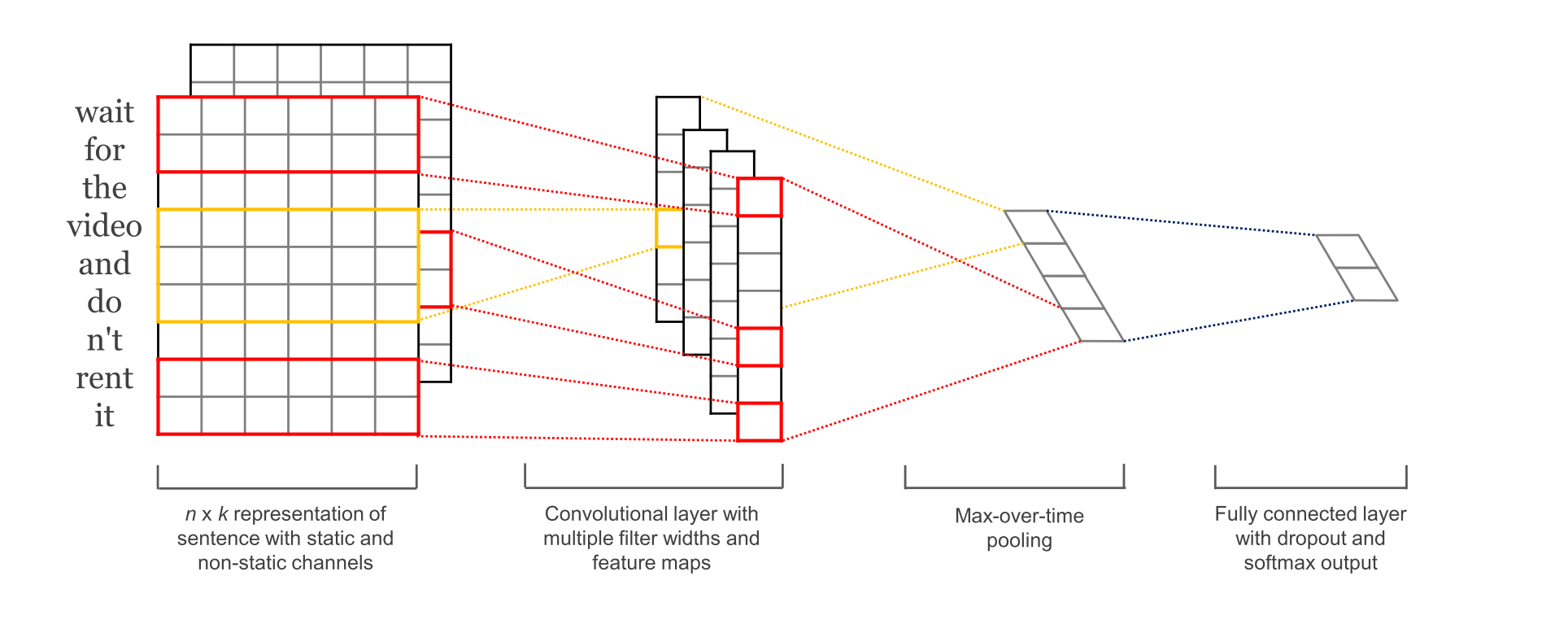

cnn_text/images/intro.png

0 → 100644

158.5 KB

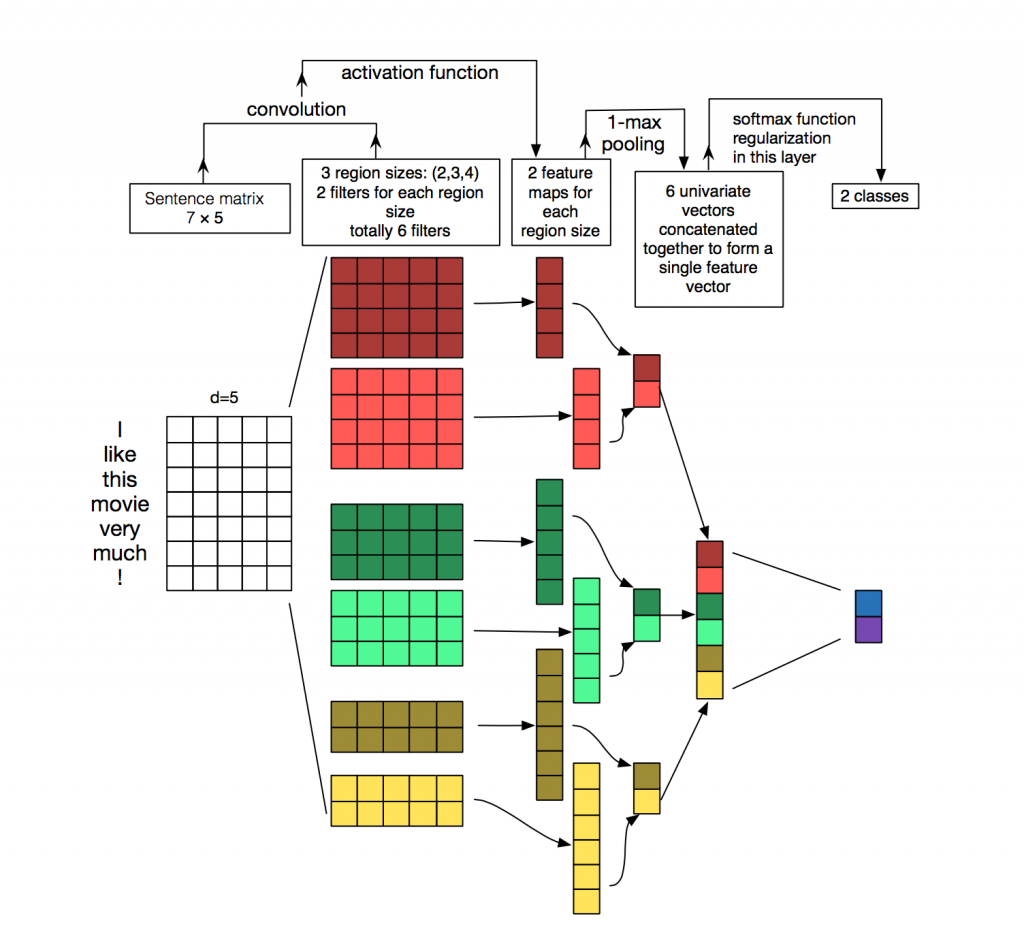

cnn_text/images/structure.png

0 → 100644

200.3 KB

cnn_text/infer.py

0 → 100644

cnn_text/network_conf.py

0 → 100644

cnn_text/reader.py

0 → 100644

cnn_text/train.py

0 → 100644

cnn_text/utils.py

0 → 100644