Support finetune by custom dataset (#3195)

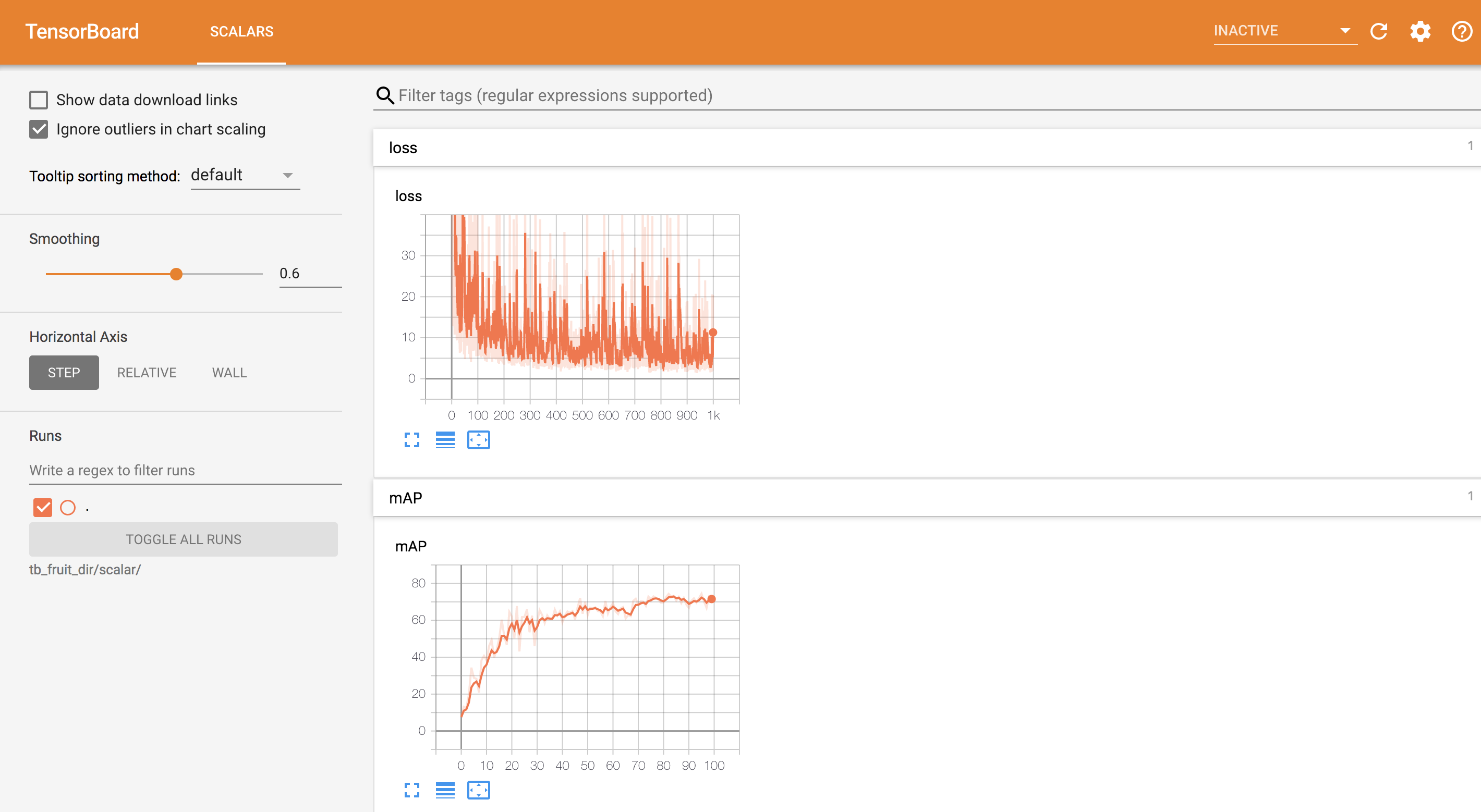

* Support finetune by custom dataset * add finetune args * add load finetune * reconstruct load * add transfer learning doc * add fruit demo * add quick start * add data preprocessing FAQ

Showing

225.2 KB

223.4 KB

255.8 KB